HBASE的集群搭建

4.1 描述

Hbase集群依赖hdfs,安装hbase集群,确保有hadoop集群,hbase启动之前确保hadoop已经启动。

启动顺序:zk------------hadoop(hdfs-yarn)------hbase

关闭顺序:hbase-------hadoop(yarn-hdfs)--------zk

!!!!安装hbase之前确保已经安装haoop和zk

4.2 hbase集群规划

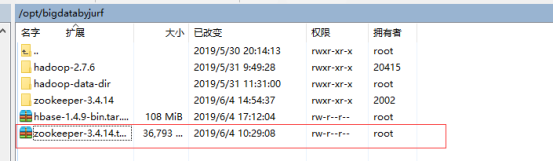

4.3 上传tar包

4.4 解压tar包

[root@meboth-master bigdatabyjurf]# tar -zxvf hbase-1.4.9-bin.tar.gz

[root@meboth-master bigdatabyjurf]# ls

hadoop-2.7.6 hadoop-data-dir hbase-1.4.9 hbase-1.4.9-bin.tar.gz zookeeper-3.4.14 zookeeper-3.4.14.tar.gz

4.5 zk配置环境变量

#120

export ZOOKEEPER_HOME=/opt/bigdatabyjurf/hbase-1.4.9

export PATH=$PATH:$ZOOKEEPER_HOME/bin

export HBASE_HOME=/opt/bigdatabyjurf/hbase-1.4.9

export PATH=$PATH:$HBASE_HOME/bin

#121

export ZOOKEEPER_HOME=/opt/bigdatabyjurf/hbase-1.4.9

export PATH=$PATH:$ZOOKEEPER_HOME/bin

#122

export ZOOKEEPER_HOME=/opt/bigdatabyjurf/hbase-1.4.9

export PATH=$PATH:$ZOOKEEPER_HOME/bin

注意每台机器都要执行:soure /etc/profile

4.6 在120上进行配置

4.6.1 复制haoop的hdfs和core两个配置文件

要把hadoop的hdfs-site.xml和core-site.xml 放到hbase/conf下,因为存储数据需要在hdfs上

[root@meboth-master conf]# cp -r /opt/bigdatabyjurf/hadoop-2.7.6/etc/hadoop/hdfs-site.xml .

[root@meboth-master conf]# ls

hadoop-metrics2-hbase.properties hbase-env.cmd hbase-env.sh hbase-policy.xml hbase-site.xml hdfs-site.xml log4j.properties regionservers

[root@meboth-master conf]# cp -r /opt/bigdatabyjurf/hadoop-2.7.6/etc/hadoop/core-site.xml .

[root@meboth-master conf]# ls

core-site.xml hadoop-metrics2-hbase.properties hbase-env.cmd hbase-env.sh hbase-policy.xml hbase-site.xml hdfs-site.xml log4j.properties regionservers

[root@meboth-master conf]#

4.6.2 配置hbase-env.sh

export JAVA_HOME=/usr/local/java/jdk1.8.0_171

//告诉hbase使用外部的zk

export HBASE_MANAGES_ZK=false

4.6.3 配置hbase-site.xml

<configuration>

<!-- 指定hbase在HDFS上存储的路径, -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://meboth-master:9000/hbase</value>

</property>

<!-- 指定hbase是分布式的 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 指定zk的地址,多个用“,”分割 -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>meboth-master:2181,meboth-slaver01:2181,meboth-slaver02:2181</value>

</property>

</configuration>

!!!注意:hbase.rootdir:这个属性配置要依据hadoop中core-site.xml中:fs.defaultFs属性的配置!!!

<property>

<name>fs.defaultFS</name>

<value>hdfs://meboth-master:9000</value>

</property>

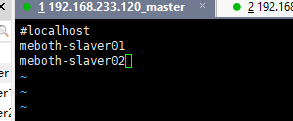

4.6.4 配置regionservers文件

[root@meboth-master conf]# vim regionservers

4.6.5 配置backup-masters配置文件

注意backup-masters需要手动创建

[root@meboth-master conf]# echo meboth-slaver01 > backup-masters

[root@meboth-master conf]# more backup-masters

meboth-slaver01

[root@meboth-master conf]#

参考:https://www.cnblogs.com/LHWorldBlog/p/8277509.html

4.7 复制配置到121上

[root@meboth-master bigdatabyjurf]# scp -r hbase-1.4.9/ root@meboth-slaver01:/opt/bigdatabyjurf/

4.8 复制配置到122上

[root@meboth-master bigdatabyjurf]# scp -r hbase-1.4.9/ root@meboth-slaver02:/opt/bigdatabyjurf/

4.9 在120主节点启动

1.你在哪里启动就在那台当前机器产生一个HMASTER;

2.通过regionservers文件中指定主机名来启动Hregionserver节点。

3.通过backup-masters文件中指定的主机名来启动备用hmaster。

[root@meboth-master hbase-1.4.9]# bin/start-hbase.sh

running master, logging to /opt/bigdatabyjurf/hbase-1.4.9/bin/../logs/hbase-root-master-meboth-master.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

meboth-slaver01: running regionserver, logging to /opt/bigdatabyjurf/hbase-1.4.9/bin/../logs/hbase-root-regionserver-meboth-slaver01.out

meboth-slaver02: running regionserver, logging to /opt/bigdatabyjurf/hbase-1.4.9/bin/../logs/hbase-root-regionserver-meboth-slaver02.out

meboth-slaver01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

meboth-slaver01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

meboth-slaver02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

meboth-slaver02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

#localhost: ssh: Could not resolve hostname #localhost: Name or service not known

meboth-slaver01: running master, logging to /opt/bigdatabyjurf/hbase-1.4.9/bin/../logs/hbase-root-master-meboth-slaver01.out

meboth-slaver01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

meboth-slaver01: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

只在120上启动就ok,不需要再从节点121,122上再执行启动命令

#进入hbase数据模式中

[root@meboth-master hbase-1.4.9]# bin/hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/bigdatabyjurf/hbase-1.4.9/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdatabyjurf/hadoop-2.7.6/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

Version 1.4.9, rd625b212e46d01cb17db9ac2e9e927fdb201afa1, Wed Dec 5 11:54:10 PST 2018

hbase(main):001:0>

4.10 通过页面访问

#主节点

http://meboth-master:16010/master-status

#从节点

http://meboth-slaver01:16010/master-status

4.11 关闭程序的步骤

4.11.1 关闭hbase

[root@meboth-master hbase-1.4.9]# stop-hbase.sh

stopping hbase................

[root@meboth-master hbase-1.4.9]#

4.11.2 关闭yarn

[root@meboth-slaver01 hbase-1.4.9]# stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

meboth-master: no nodemanager to stop

meboth-slaver01: no nodemanager to stop

localhost: cat: /tmp/yarn-root-nodemanager.pid: No such file or directory

localhost: no nodemanager to stop

meboth-slaver02: no nodemanager to stop

no proxyserver to stop

[root@meboth-slaver01 hbase-1.4.9]#

4.11.3 关闭hdfs

[root@meboth-master hbase-1.4.9]# stop-dfs.sh

Stopping namenodes on [meboth-master]

meboth-master: stopping namenode

localhost: stopping datanode

meboth-master: stopping datanode

meboth-slaver02: stopping datanode

meboth-slaver01: stopping datanode

Stopping secondary namenodes [meboth-slaver01]

meboth-slaver01: stopping secondarynamenode

[root@meboth-master hbase-1.4.9]# jps

4.11.4 关闭zk

[root@meboth-master hbase-1.4.9]# zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /opt/bigdatabyjurf/zookeeper-3.4.14/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

[root@meboth-master hbase-1.4.9]# jps

4.12 关闭程序的启动

先启动zk,然后启动hdfs,然后再启动hbase。

相关文章

- Hbase集群master.HMasterCommandLine: Master exiting

- 基于HBase的大数据存储的应用场景分析

- HBase 集群安装

- HBase最佳实践-读性能优化策略

- HBase最佳实践-列族设计优化

- Hadoop & Spark & Hive & HBase

- HBASE手动触发major_compact

- hbase操作(shell 命令,如建表,清空表,增删改查)以及 hbase表存储结构和原理

- HBase 索引创建

- 【甘道夫】Eclipse+Maven搭建HBase开发环境及HBaseDAO代码演示样例

- 2017年云栖大会-云HBase专场会后资料-欢迎扩散

- 分布式NoSQL列存储数据库Hbase操作(二)

- HBase 集群监控

- Hbase 基本操作用 在java 上的实现

- 将mr写到Hbase上

- HBase-1.2.4LruBlockCache实现分析(一)