[ARM] Accelerate computing on Android

一系列学习笔记总结,对当前发展趋势做一次调研。

一、优化策略

From: 代码Android端加速

1. OpenCL优化(GPU)

编译成功后,在android端优化却不是非常明显,这个问题我们还在找。

- 判断手机是否支持OpenCL

- Google可能不太喜欢OpenCL,需要自己配置。

More details: Android OpenCL测试程序,使用dlopen动态加载libOpenCL.so库

2. NEON指令集优化(并行)

NEON指令集加速,是目前android端推荐最多的方式,基本的介绍可以百度,有很多文章。

这个优化有两种:

(1)直接使用NEON指令集封装好的C指令,网上有很多介绍,操作方法也是类C风格,比较容易上手。

(2)对寄存器写汇编进行优化。

第一种方法上手快,效果也比较明显;

第二种写起来会比较费劲,但加速效果会非常恐怖(前提是要对汇编指令足够熟悉)。 而这两种方法对于使用opencv的函数却没法优化。

二、NDK引入NEON

Ref: ARM和NEON指令

Ref: Optimizing C Code with Neon Intrinsics

Ref: A Software Library for Computer Vision and Machine Learning

-

配置为NEON模式编译

Build for Android: https://arm-software.github.io/ComputeLibrary/v19.11.1/index.xhtml#S3_3_android

-

集成进工程

相关问题:how to build for android #300

Did you manage to use ACL in your project?

There shouldn't be anything different from other libraries (Except that you might need to link against OpenCL if you're using it, in which case it will not work on Android O and after).

It means that because of some added security now applications can only link against system libraries that have been explicitly whitelisted by the manufacturer.

Therefore, even if OpenCL is available on your platform, you're unlikely to be able to use it in an App

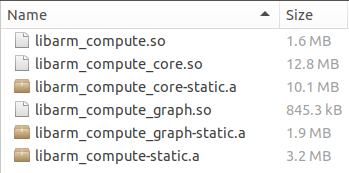

相关问题:How to use the Binaries release (android version) ? #434

直接下载Binary版本。

.

├── android-arm64-v8a-cl

├── android-arm64-v8a-cl-asserts

├── android-arm64-v8a-cl-debug

├── android-arm64-v8a-neon

│ ├── libarm_compute_core.so

│ ├── libarm_compute_core-static.a

│ ├── libarm_compute_graph.so

│ ├── libarm_compute_graph-static.a

│ ├── libarm_compute.so

│ └── libarm_compute-static.a

├── android-arm64-v8a-neon-asserts

├── android-arm64-v8a-neon-cl

├── android-arm64-v8a-neon-cl-asserts

├── android-arm64-v8a-neon-cl-debug

├── android-arm64-v8a-neon-debug

├── android-armv7a-cl

├── android-armv7a-cl-asserts

├── android-armv7a-cl-debug

├── android-armv7a-neon

├── android-armv7a-neon-asserts

├── android-armv7a-neon-cl

├── android-armv7a-neon-cl-asserts

├── android-armv7a-neon-cl-debug

└── android-armv7a-neon-debug

Ref: Arm NN 软件开发套件

libarm_compute.so, libarm_compute_core.so, libarm_compute_graph.so

集成到 Android 项目中。

In order to use the compute library on Android there are few steps to follow to make everything working. Before starting to present the necessary steps, I would suggest to use the static libs of arm compute library (i.e. libarm_compute-static.a) rather than the dynamic ones and to use the arm compute library only with NEON acceleration as there are extra steps to follow for OpenCL. Following I reported few necessary steps for using the arm compute library on Android. Hope this can help you.

Step1: Add pre-built library for arm compute library in CMakeLists.txt along with the include directory You could have something like this: // Add includes for arm_compute include_directories(${PATH_ARM_COMPUTE}/include) // Add prebuilt library for arm_compute add_library( lib_arm_compute STATIC IMPORTED ) set_target_properties(lib_arm_compute PROPERTIES IMPORTED_LOCATION ${PATH_ARM_COMPUTE}/libs/${ANDROID_ABI}/libarm_compute-static.a) ... target_link_libraries( native-lib lib_arm_compute ${log-lib} ) where PATH_ARM_COMPUTE is the path to your arm_compute folder

Step2: Add necessary includes in your .cpp file located in the JNI folder #include "arm_compute/runtime/NEON/NEFunctions.h" #include "arm_compute/runtime/CPP/CPPScheduler.h"

Step3: Write your example in Java_com_chenleiblogs_mlopencl_ml_1opencl_MainActivity_stringFromJNI( function In the arm compute library we have few examples which could be used (examples/ folder). Have a look at for instance to neon_convolution example. This should be a good example to integrate in your app.

/* implement */

相关文章

- Gradle for Android 第五篇( 多模块构建 )

- android studio 安装lombok

- [Android+LBS] LBS based on Symfony2 & MongoDB

- 转: app端数据库(性能高) realm (ios, android 均支持)

- android.os.NetworkOnMainThreadException异常如何解决

- remount issue on android 7.0

- 我的Android进阶之旅------>Android嵌入图像InsetDrawable的使用方法

- Android ANR优化 2

- Android Message handling (based on KK4.4)

- android 键盘弹起输入框

- ClassNotFoundException: Didn‘t find class “android.view.x“ on path:

- Android下未root时导出已安装APK的方法

- Android 快速获取热点名称密码

- 【IOC 控制反转】Android 事件依赖注入 ( 事件依赖注入具体的操作细节 | 获取 Activity 中的所有方法 | 获取方法上的注解 | 获取注解上的注解 | 通过注解属性获取事件信息 )

- Android Market的 Loading效果

- 【Android 安装包优化】开启 ProGuard 混淆 ( 压缩 Shrink | 优化 Optimize | 混淆 Obfuscate | 预检 | 混淆文件编写 | 混淆前后对比 )

- 【我的Android进阶之旅】解决错误:No enum constant com.android.build.gradle.OptionalCompilationStep.FULL_APK