C#,图像二值化(09)——全局阈值的最大熵算法(Maximum Entropy Algorithm)与源程序

The Max Entropy classifier is a probabilistic classifier which belongs to the class of exponential models. Unlike the Naive Bayes classifier that we discussed in the previous article, the Max Entropy does not assume that the features are conditionally independent of each other.

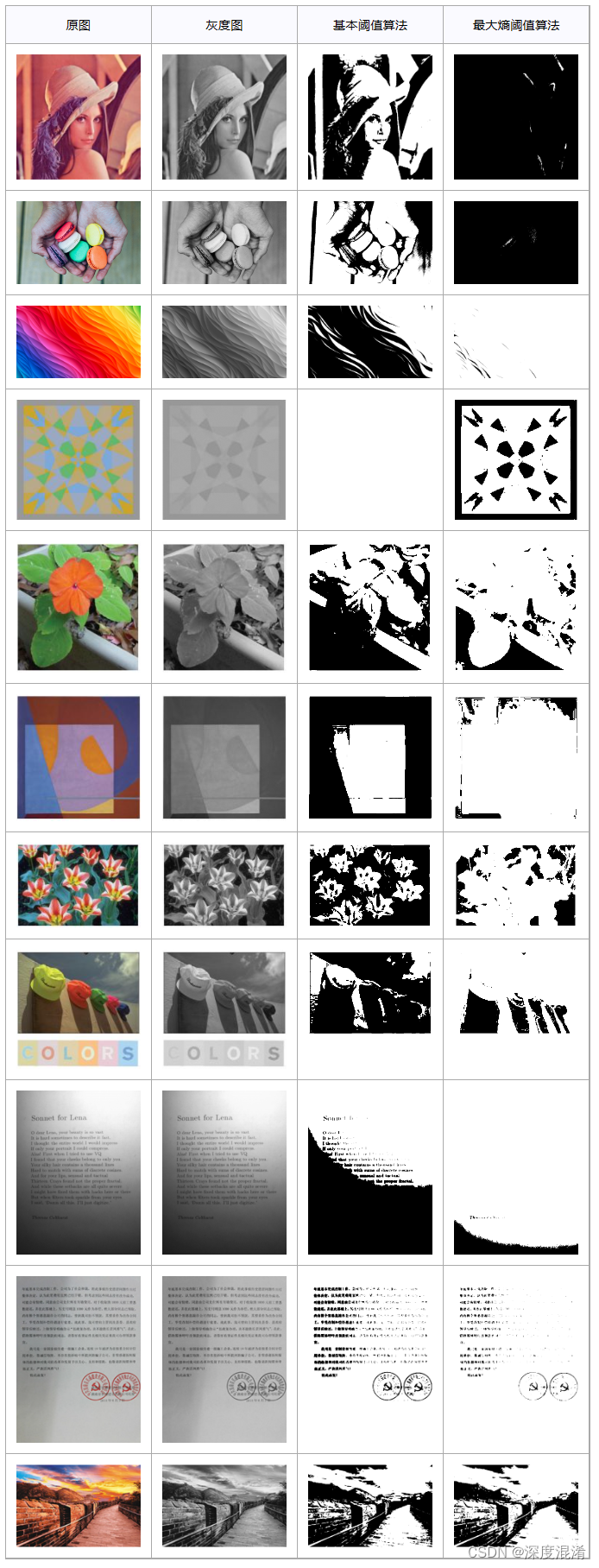

最大熵阈值分割法和OTSU算法类似,假设将图像分为背景和前景两个部分。 熵代表信息量,图像信息量越大,熵就越大,最大熵算法就是找出一个最佳阈值使得背景与前景两个部分熵之和最大。 直方图每个矩形框的数值描述的是图像中相应灰度值的频率。

改编自:

二值算法综述请阅读:

支持函数请阅读:

using System;

using System.Linq;

using System.Text;

using System.Drawing;

using System.Collections;

using System.Collections.Generic;

using System.Drawing.Imaging;

namespace Legalsoft.Truffer.ImageTools

{

public static partial class BinarizationHelper

{

#region 灰度图像二值化 全局算法 最大熵阈值

/// <summary>

/// 最大熵阈值分割

/// 计算当前位置的能量熵

/// https://blog.csdn.net/xw20084898/article/details/17564957

/// </summary>

/// <param name="histogram"></param>

/// <param name="cur_threshold"></param>

/// <param name="state"></param>

/// <returns></returns>

private static double Current_Entropy(int[] histogram, int cur_threshold, bool state)

{

int start;

int end;

double cur_entropy = 0.0;

if (state == false)

{

start = 0;

end = cur_threshold;

}

else

{

start = cur_threshold;

end = 256;

}

int sum = Histogram_Sum(histogram);

for (int j = start; j < end; j++)

{

if (histogram[j] == 0)

{

continue;

}

double percentage = (double)histogram[j] / (double)sum;

cur_entropy += -percentage * Math.Log(percentage);

}

return cur_entropy;

}

/// <summary>

/// 最大熵阈值分割算法

/// 寻找最大熵阈值并分割

/// </summary>

/// <param name="data"></param>

/// <returns></returns>

private static int Found_Maxium_Entropy(byte[,] data)

{

int[] histogram = Gray_Histogram(data);

double maxentropy = -1.0;

int max_index = -1;

for (int i = 0; i < histogram.Length; i++)

{

double cur_entropy = Current_Entropy(histogram, i, true) + Current_Entropy(histogram, i, false);

if (cur_entropy > maxentropy)

{

maxentropy = cur_entropy;

max_index = i;

}

}

return max_index;

}

public static void Maxium_Entropy_Algorithm(byte[,] data)

{

int threshold = Found_Maxium_Entropy(data);

Threshold_Algorithm(data, threshold);

}

#endregion

}

}

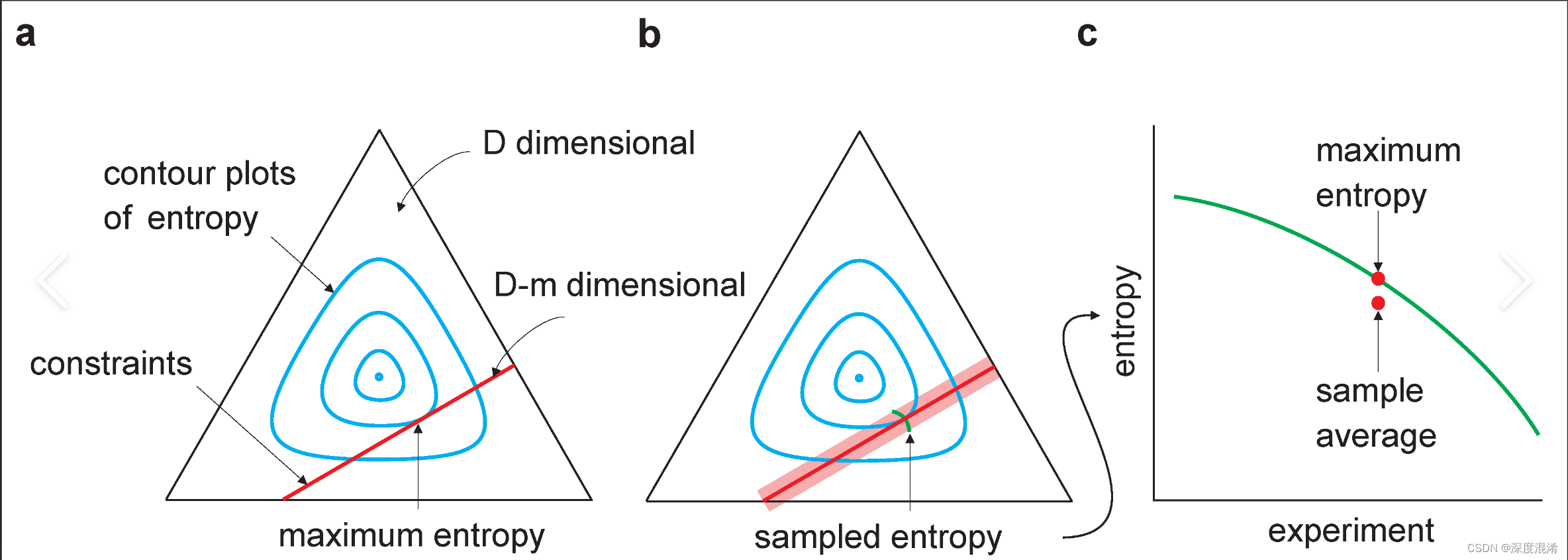

What is the Principle of Maximum Entropy?

The principle of maximum entropy is a model creation rule that requires selecting the most unpredictable (maximum entropy) prior assumption if only a single parameter is known about a probability distribution. The goal is to maximize “uniformitiveness,” or uncertainty when making a prior probability assumption so that subjective bias is minimized in the model’s results.

For example, if only the mean of a certain parameter is known (the average outcome over long-term trials), then a researcher could use almost any probability distribution to build the model. They might be tempted to choose a probability function like Normal distribution, since knowing the mean first lets them fill in more variables in the prior assumption. However, under the maximum entropy principle, the researcher should go with whatever probability distribution they know the least about already.

Common Probability Distribution Parameterizations in Machine Learning:

While all probability models follow either Bayesian or Frequentist inference, they can yield vastly different results depending upon what specific parameter distribution algorithm is employed.

Bernoulli distribution – one parameter

Beta distribution – multiple parameters

Binomial distribution – two parameters

Exponential distribution – multiple parameters

Gamma distribution – multiple parameters

Geometric distribution – one parameter

Gaussian (normal) distribution – multiple parameters

Lognormal distribution – one parameter

Negative binomial distribution – two parameters

Poisson distribution – one parameter

相关文章

- C#-CHTTPDownload

- C# winform 窗体设置固定大小不可被改变

- C# Math.Round()的银行家算法

- C#7.2——编写安全高效的C#代码 c# 中模拟一个模式匹配及匹配值抽取 走进 LINQ 的世界 移除Excel工作表密码保护小工具含C#源代码 腾讯QQ会员中心g_tk32算法【C#版】

- 利用反射快速给Model实体赋值 使用 Task 简化异步编程 Guid ToString 格式知多少?(GUID 格式) Parallel Programming-实现并行操作的流水线(生产者、消费者) c# 无损高质量压缩图片代码 8种主要排序算法的C#实现 (一) 8种主要排序算法的C#实现 (二)

- C# 插件热插拔 .NET:何时应该 “包装异常”? log4.net 自定义日志文件名称

- C#学习记录——如何使VisualStudio开发环境全屏显示及相关快捷方式汇总

- C#,图像二值化(24)——局部阈值算法的NiBlack算法及源程序

- C#,图像二值化(16)——全局阈值的力矩保持算法(Moment-proserving Thresholding)及其源代码

- C#,图像二值化(12)——基于谷底最小值的全局阈值算法(Valley-Minium Thresholding)与源代码

- C#,图像二值化(08)——全局阈值的优化算法(Optimization Thresholding)及其源代码

- C#,图像二值化(06)——全局阈值的大津算法(OTSU Thresholding)及其源代码

- C#,图像二值化(04)——全局阈值的凯勒算法(Kittler Thresholding)及源程序

- C#,图论与图算法,哈密顿环问题(Hamiltonian Cycle problem)的算法与源程序

- C#,图论与图算法,任意一对节点之间最短距离的弗洛伊德·沃肖尔(Floyd Warshall)算法与源程序

- C#,图论与图算法,有向图(Graph)之环(Cycle)判断的颜色算法与源代码

- C#,深度好文,精致好码,文本对比(Text Compare)算法与源代码

- C#,数值计算,矩阵的行列式(Determinant)、伴随矩阵(Adjoint)与逆矩阵(Inverse)的算法与源代码

- C#,超级阿格里数字(超级丑数,Super Ugly Number)的算法与源代码

- C#,煎饼排序问题(Pancake Sorting Problem)算法与源代码

- C#,字符串匹配(模式搜索)BF(Brute Force)暴力算法的源代码

- C#,数值计算(Numerical Recipes in C#),线性代数方程的求解,对角和带对角方程组(Tridiagonal and Band-Diagonal)求解算法源程序

- C#,图片分层(Layer Bitmap)绘制,反色、高斯模糊及凹凸贴图等处理的高速算法与源程序

- C# Remoting 简单实现

- C#/Asp.Net 获取各种Url的方法

- C#的StackExchange.Redis实现订阅分发模式