C#,图像二值化(08)——全局阈值的优化算法(Optimization Thresholding)及其源代码

1、全局阈值算法

基于灰度直方图的优化迭代算法之一。

Iterative Scheduler and Modified Iterative Water-Filling

In the downlink, the inter-cell interference is only function of the power levels and is independent of the user scheduling decisions. This suggests that the user scheduling and the power allocation can be carried out separately. An iterative scheduler can be derived so that the best user to schedule are first found assuming a fixed power allocation, then the best power allocation are computed for the fixed scheduled users [VPW09, YKS10]. If the iteration always increases the objective function monotonically, one can guarantee the convergence of the scheduler to at least a local optimum.

二值算法综述请阅读:

支持函数请阅读:

2、全局阈值算法的源程序

using System;

using System.Linq;

using System.Text;

using System.Drawing;

using System.Collections;

using System.Collections.Generic;

using System.Runtime.InteropServices;

using System.Drawing.Imaging;

namespace Legalsoft.Truffer.ImageTools

{

public static partial class BinarizationHelper

{

#region 灰度图像二值化 全局算法 全局阈值算法

/// <summary>

/// 基本全局阈值法

/// https://blog.csdn.net/xw20084898/article/details/17564957

/// </summary>

/// <param name="histogram"></param>

/// <returns></returns>

private static int Basic_Global_Threshold(int[] histogram)

{

double t = Histogram_Sum(histogram);

double u = Histogram_Sum(histogram, 1);

int k2 = (int)(u / t);

int k1;

do

{

k1 = k2;

double t1 = 0;

double u1 = 0;

for (int i = 0; i <= k1; i++)

{

t1 += histogram[i];

u1 += i * histogram[i];

}

int t2 = (int)(t - t1);

double u2 = u - u1;

if (t1 != 0)

{

u1 = u1 / t1;

}

else

{

u1 = 0;

}

if (t2 != 0)

{

u2 = u2 / t2;

}

else

{

u2 = 0;

}

k2 = (int)((u1 + u2) / 2);

} while (k1 != k2);

return (k1);

}

public static void Global_Threshold_Algorithm(byte[,] data)

{

int[] histogram = Gray_Histogram(data);

int threshold = Basic_Global_Threshold(histogram);

Threshold_Algorithm(data, threshold);

}

#endregion

}

}

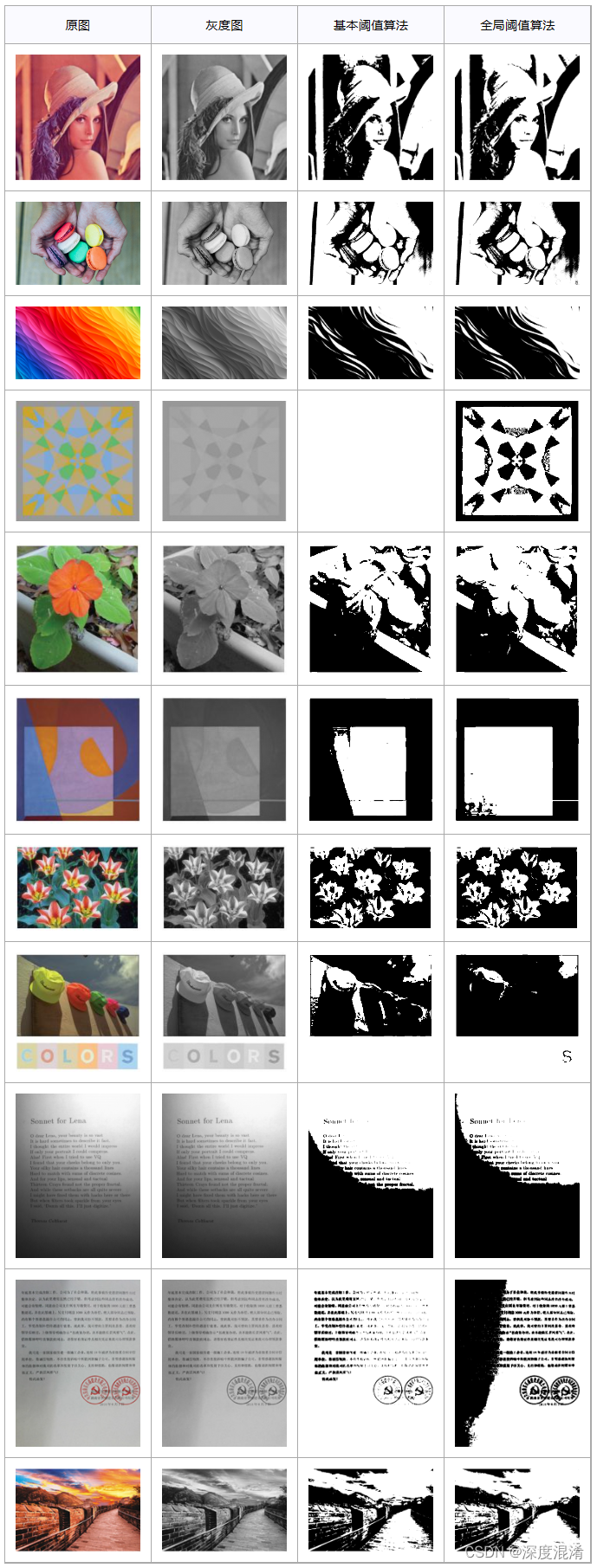

3、全局阈值算法的计算效果

The thematic series Iterative Methods and Optimization Algorithms is devoted to the latest achievements in the field of iterative methods and optimization theory for single-valued and multi-valued mappings. The series is related to the significant contributions in these fields of Professor Hong-Kun Xu, as well as to some important recent advances in theory, computation, and applications.

In this chapter we focus on general approach to optimization for multivariate functions. In the previous chapter, we have seen three different variants of gradient descent methods, namely, batch gradient descent, stochastic gradient descent, and mini-batch gradient descent. One of these methods is chosen depending on the amount of data and a trade-off between the accuracy of the parameter estimation and the amount time it takes to perform the estimation. We have noted earlier that the mini-batch gradient descent strikes a balance between the other two methods and hence commonly used in practice. However, this method comes with few challenges that need to be addressed. We also focus on these issues in this chapter.

Learning outcomes from this chapter:

Several first-order optimization methods

Basic second-order optimization methods

This chapter closely follows chapters 4 and 5 of (Kochenderfer and Wheeler 2019). There are many interesting textbooks on optimization, including (Boyd and Vandenberghe 2004), (Sundaram 1996), and (Nesterov 2004),

相关文章

- C#由转换二进制所引起的思考,了解下?

- C# 调用摄像头+保存视频

- c#代码 天气接口 一分钟搞懂你的博客为什么没人看 看完python这段爬虫代码,java流泪了c#沉默了 图片二进制转换与存入数据库相关 C#7.0--引用返回值和引用局部变量 JS直接调用C#后台方法(ajax调用) Linq To Json SqlServer 递归查询

- C#中泛型方法与泛型接口 C#泛型接口 List<IAll> arssr = new List<IAll>(); interface IPerson<T> c# List<接口>小技巧 泛型接口协变逆变的几个问题

- C#编译器优化那点事 c# 如果一个对象的值为null,那么它调用扩展方法时为甚么不报错 webAPI 控制器(Controller)太多怎么办? .NET MVC项目设置包含Areas中的页面为默认启动页 (五)Net Core使用静态文件 学习ASP.NET Core Razor 编程系列八——并发处理

- 算法(第四版)C#题解——2.1

- Word控件Spire.Doc 【脚注】教程(2): 在 C#和VB.NET中插入 Word 中的尾注

- Word控件Spire.Doc 【页眉页脚】教程(10): 锁定标题以防止在 C# 中编辑 word 文档

- C# .net生成Guid的几种方式

- C#【高级篇】 C# 接口(Interface)

- C#,图像二值化(11)——全局阈值的百分比优化算法(Percentage Thresholding)及其源程序

- C#,人工智能,机器人路径规划(Robotics Pathfinding)DStarLite(D* Lite Algorithm)优化算法与C#源程序

- C#,图论与图算法,输出无向图(Un-directed Graph)全部环(cycle)的算法与源代码

- C#,布尔表达式括号问题(Boolean Parenthesization Problem)的求解算法与源代码

- C#,铁蛋·奥纳奇数(Geek Onacci Number)的算法与源代码

- C#,桌面游戏编程,数独游戏(Sudoku Game)的算法与源代码

- C#,回文分割问题(Palindrome Partitioning Problem)算法与源代码

- C#,数组数据波形排序(Sort in Wave Form)的朴素算法与源代码

- C#,因数分解(质因子分解)Pollard‘s Rho算法的源代码

- C#,质数(Prime Number)的四种算法源代码和性能比较

- C#,冒泡排序算法(Bubble Sort)的源代码与数据可视化

- C#,码海拾贝(16)——求行列式值的全选主元高斯消去法,《C#数值计算算法编程》源代码升级改进版

- C#,码海拾贝(04)——拉格朗日(Lagrange)曲线插值算法及其拓展,《C#数值计算算法编程》源代码升级改进版

- C#,入门教程(28)——文件夹(目录)、文件读(Read)与写(Write)的基础知识

- C# 文件压缩与解压(ZIP格式)

- C#中使用委托、接口、匿名方法、泛型委托实现加减乘除算法

- 委托(C# 编程指南)

- 基于C# 利用工程活动图 AOE 网设计算法【100010705】

- c# 引用参数-ref

- C#中缓存的使用

- c# winform捕获全局异常,并记录日志

- C# Nginx平滑加权轮询算法

- c#类的定义,c#中的关健字,C#标识符