机器学习笔记 - TensorFlow2.0全卷积网络FCN语义分割

2023-09-14 09:01:36 时间

主要参考

https://blog.csdn.net/yx123919804/article/details/104811087

训练及数据等代码参考

https://blog.csdn.net/bashendixie5/article/details/115795171

代码及数据集下载

链接:https://pan.baidu.com/s/17s2uCQJ7OOB0ekmOjL3xEA

提取码:d9eq

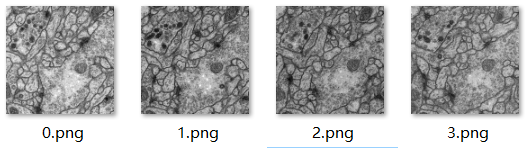

原图和预测效果如下,之后在自定义的数据集上测试,效果也不错

model.py - 模型代码

from keras.layers import *

from keras.optimizers import *

from keras.models import *

project_name = "fcn_segment"

channels = 1

std_shape = (256, 256, channels) # 输入尺寸, std_shape[0]: img_rows, std_shape[1]: img_cols

# 这个尺寸按你的图像来, 如果你的图大小不一, 那 img_rows 和 image_cols

# 都要设置成 None, 如果你在用 Generator 加载数据时有扩展边缘, 那 std_shape

# 就是扩展后的尺寸

img_input = Input(shape = std_shape, name = "input")

conv_1 = Conv2D(32, kernel_size = (3, 3), activation = "relu", padding = "same", name = "conv_1")(img_input)

max_pool_1 = MaxPool2D(pool_size = (2, 2), strides = (2, 2), name = "max_pool_1")(conv_1)

conv_2 = Conv2D(64, kernel_size = (3, 3), activation = "relu", padding = "same", name = "conv_2")(max_pool_1)

max_pool_2 = MaxPool2D(pool_size = (2, 2), strides = (2, 2), name = "max_pool_2")(conv_2)

conv_3 = Conv2D(128, kernel_size = (3, 3), activation = "relu", padding = "same", name = "conv_3")(max_pool_2)

max_pool_3 = MaxPool2D(pool_size = (2, 2), strides = (2, 2), name = "max_pool_3")(conv_3)

conv_4 = Conv2D(256, kernel_size = (3, 3), activation = "relu", padding = "same", name = "conv_4")(max_pool_3)

max_pool_4 = MaxPool2D(pool_size = (2, 2), strides = (2, 2), name = "max_pool_4")(conv_4)

conv_5 = Conv2D(512, kernel_size = (3, 3), activation = "relu", padding = "same", name = "conv_5")(max_pool_4)

max_pool_5 = MaxPool2D(pool_size = (2, 2), strides = (2, 2), name = "max_pool_5")(conv_5)

# max_pool_5 转置卷积上采样 2 倍和 max_pool_4 一样大

up6 = Conv2DTranspose(256, kernel_size = (3, 3), strides = (2, 2), padding = "same", kernel_initializer = "he_normal",

name = "upsamping_6")(max_pool_5)

_16s = add([max_pool_4, up6])

# _16s 转置卷积上采样 2 倍和 max_pool_3 一样大

up_16s = Conv2DTranspose(128, kernel_size = (3, 3), strides = (2, 2), padding = "same", kernel_initializer = "he_normal",

name = "Conv2DTranspose_16s")(_16s)

_8s = add([max_pool_3, up_16s])

# _8s 上采样 8 倍后与输入尺寸相同

up7 = UpSampling2D(size = (8, 8), interpolation = "bilinear", name = "upsamping_7")(_8s)

# 这里 kernel 也是 3 * 3, 也可以同 FCN-32s 那样修改的

conv_7 = Conv2D(1, kernel_size = (3, 3), activation = "sigmoid", padding = "same", name = "conv_7")(up7)

model = Model(img_input, conv_7, name = project_name)

model.compile(optimizer=Adam(lr=1e-4), loss = "binary_crossentropy", metrics = ["accuracy"])

model.summary()data.py - 数据处理

from __future__ import print_function

from keras.preprocessing.image import ImageDataGenerator

import numpy as np

import os

import glob

import skimage.io as io

import skimage.transform as trans

Sky = [128,128,128]

Building = [128,0,0]

Pole = [192,192,128]

Road = [128,64,128]

Pavement = [60,40,222]

Tree = [128,128,0]

SignSymbol = [192,128,128]

Fence = [64,64,128]

Car = [64,0,128]

Pedestrian = [64,64,0]

Bicyclist = [0,128,192]

Unlabelled = [0,0,0]

COLOR_DICT = np.array([Sky, Building, Pole, Road, Pavement,

Tree, SignSymbol, Fence, Car, Pedestrian, Bicyclist, Unlabelled])

def adjustData(img,mask,flag_multi_class,num_class):

if(flag_multi_class):

img = img / 255

mask = mask[:, :, :, 0] if(len(mask.shape) == 4) else mask[:, :, 0]

new_mask = np.zeros(mask.shape + (num_class,))

for i in range(num_class):

#for one pixel in the image, find the class in mask and convert it into one-hot vector

#index = np.where(mask == i)

#index_mask = (index[0],index[1],index[2],np.zeros(len(index[0]),dtype = np.int64) + i) if (len(mask.shape) == 4) else (index[0],index[1],np.zeros(len(index[0]),dtype = np.int64) + i)

#new_mask[index_mask] = 1

new_mask[mask == i, i] = 1

new_mask = np.reshape(new_mask, (new_mask.shape[0], new_mask.shape[1]*new_mask.shape[2], new_mask.shape[3])) if flag_multi_class else np.reshape(new_mask, (new_mask.shape[0]*new_mask.shape[1], new_mask.shape[2]))

mask = new_mask

elif(np.max(img) > 1):

img = img / 255

mask = mask /255

mask[mask > 0.5] = 1

mask[mask <= 0.5] = 0

return (img, mask)

def trainGenerator(batch_size,train_path,image_folder,mask_folder,aug_dict,image_color_mode = "grayscale",

mask_color_mode = "grayscale",image_save_prefix="image", mask_save_prefix="mask",

flag_multi_class = False,num_class = 2,save_to_dir=None, target_size=(256,256), seed=1):

'''

can generate image and mask at the same time

use the same seed for image_datagen and mask_datagen to ensure the transformation for image and mask is the same

if you want to visualize the results of generator, set save_to_dir = "your path"

'''

image_datagen = ImageDataGenerator(**aug_dict)

mask_datagen = ImageDataGenerator(**aug_dict)

image_generator = image_datagen.flow_from_directory(

train_path,

classes=[image_folder],

class_mode=None,

color_mode=image_color_mode,

target_size=target_size,

batch_size=batch_size,

save_to_dir=save_to_dir,

save_prefix=image_save_prefix,

seed=seed)

mask_generator = mask_datagen.flow_from_directory(

train_path,

classes=[mask_folder],

class_mode=None,

color_mode=mask_color_mode,

target_size=target_size,

batch_size=batch_size,

save_to_dir=save_to_dir,

save_prefix=mask_save_prefix,

seed=seed)

train_generator = zip(image_generator, mask_generator)

for (img, mask) in train_generator:

img, mask = adjustData(img, mask, flag_multi_class, num_class)

yield (img, mask)

def testGenerator(test_path, num_image = 30, target_size = (256,256),flag_multi_class = False,as_gray = True):

for i in range(num_image):

img = io.imread(os.path.join(test_path, "%d.png"%i), as_gray=as_gray)

img = img / 255

img = trans.resize(img, target_size)

img = np.reshape(img, img.shape+(1,)) if (not flag_multi_class) else img

img = np.reshape(img, (1,)+img.shape)

yield img

def geneTrainNpy(image_path, mask_path, flag_multi_class=False, num_class=2, image_prefix="image",mask_prefix = "mask",image_as_gray = True,mask_as_gray = True):

image_name_arr = glob.glob(os.path.join(image_path, "%s*.png"%image_prefix))

image_arr = []

mask_arr = []

for index, item in enumerate(image_name_arr):

img = io.imread(item,as_gray=image_as_gray)

img = np.reshape(img, img.shape + (1,)) if image_as_gray else img

mask = io.imread(item.replace(image_path,mask_path).replace(image_prefix, mask_prefix),as_gray = mask_as_gray)

mask = np.reshape(mask, mask.shape + (1,)) if mask_as_gray else mask

img, mask = adjustData(img, mask, flag_multi_class,num_class)

image_arr.append(img)

mask_arr.append(mask)

image_arr = np.array(image_arr)

mask_arr = np.array(mask_arr)

return image_arr, mask_arr

def labelVisualize(num_class, color_dict, img):

img = img[:, :, 0] if len(img.shape) == 3 else img

img_out = np.zeros(img.shape + (3,))

for i in range(num_class):

img_out[img == i, :] = color_dict[i]

return img_out / 255

def saveResult(save_path,npyfile,flag_multi_class = False,num_class = 2):

for i,item in enumerate(npyfile):

img = labelVisualize(num_class, COLOR_DICT, item) if flag_multi_class else item[:, :, 0]

io.imsave(os.path.join(save_path, "%d_predict.png"%i), img)main.py - 训练

from model import *

from data import *

import os

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = "true"

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

data_gen_args = dict(rotation_range=0.2,

width_shift_range=0.05,

height_shift_range=0.05,

shear_range=0.05,

zoom_range=0.05,

horizontal_flip=True,

fill_mode='nearest')

myGene = trainGenerator(2,'data/membrane/train','image','label',data_gen_args,save_to_dir = None)

model = unet()

model_checkpoint = ModelCheckpoint('unet_membrane.hdf5', monitor='loss', verbose=1, save_best_only=True)

model.fit_generator(myGene, steps_per_epoch=2000, epochs=5, callbacks=[model_checkpoint])test.py - 测试

from model import *

from data import *

import matplotlib

import os

from keras.models import load_model

import numpy as np

from PIL import Image

import cv2

import tensorflow as tf

import tensorflow_hub as hub

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2

def test():

model = load_model("unet_membrane.hdf5")

testGene = testGenerator("data/membrane/test", 5)

results = model.predict_generator(testGene, 5, verbose=1)

saveResult("data/membrane/result", results)

test()

相关文章

- Coursera台大机器学习课程笔记3 – 机器学习的分类和机器学习的可能性

- 机器学习之决策树(ID3)算法与Python实现

- 机器学习笔记 - 使用TensorFlow的Spatial Transformer网络

- 机器学习笔记 - 图像搜索的常见网络模型

- 机器学习笔记 - 探索性数据分析(EDA) 概念理解

- 机器学习笔记 - 使用Visual Studio 2019的机器学习预览功能

- titit 切入一个领域的方法总结 attilax这里,机器学习为例子

- 部署在SAP Cloud Platform CloudFoundry环境的应用如何消费SAP Leonardo机器学习API

- AI开发者大会之AI学习与进阶实践:2020年7月3日《如何转型搞AI?》、《基于AI行业价值的AI学习与进阶路径》、《自动机器学习与前沿AI开源项目》、《使用TensorFlow实现经典模型》

- 已解决(机器学习中查看数据信息报错)AttributeError: target_names

- 机器学习——K-Means算法

- NLP模型笔记2022-15:深度机器学习模型原理与源码复现(lstm模型+论文+源码)

- darktrace 亮点是使用的无监督学习(贝叶斯网络、聚类、递归贝叶斯估计)发现未知威胁——使用无人监督 机器学习反而允许系统发现罕见的和以前看不见的威胁,这些威胁本身并不依赖 不完善的训练数据集。 学习正常数据,发现异常!

- AI机器学习模型python到C/C++的转换播

- 【机器学习】解释对偶的概念及SVM中的对偶算法?(面试回答)

- 【机器学习】11、贝叶斯网络

- 最常用的Python库--机器学习和数据科学必备神器