Hadoop2.x源码-编译剖析

最近,有小伙伴涉及到源码编译。然而,在编译期间也是遇到各种坑,在求助于搜索引擎,技术博客,也是难以解决自身所遇到的问题。笔者在被询问多 次的情况下,今天打算为大家来写一篇文章来剖析下编译的细节,以及遇到编译问题后,应该如何去解决这样类似的问题。因为,编译的问题,对于后期业务拓展, 二次开发,编译打包是一个基本需要面临的问题。

2.编译准备在编译源码之前,我们需要准备编译所需要的基本环境。下面给大家列举本次编译的基础环境,如下所示:

在准备好这些环境之后,我们需要去将这些环境安装到操作系统当中。步骤如下:

2.1 基础环境安装 关于JDK,Maven,ANT的安装较为简单,这里就不多做赘述了,将其对应的压缩包解压,然后在/etc/profile文件当中添加对应

的路径到PATH中即可。下面笔者给大家介绍安装Protobuf,其安装需要对Protobuf进行编译,故我们需要编译的依赖环境gcc、gcc-

c++、cmake、openssl-devel、ncurses-devel,安装命令如下所示:

yum -y install gcc yum -y install gcc-c++ yum -y install cmake yum -y install openssl-devel yum -y install ncurses-devel

验证GCC是否安装成功,命令如下所示:

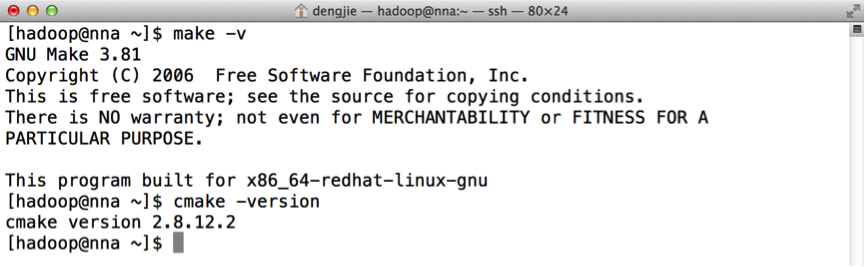

验证Make核CMake是否安装成功,命令如下所示:

在准备完这些环境之后,开始去编译Protobuf,编译命令如下所示:

[hadoop@nna ~]$ cd protobuf-2.5.0/ [hadoop@nna protobuf-2.5.0]$ ./configure --prefix=/usr/local/protoc [hadoop@nna protobuf-2.5.0]$ make [hadoop@nna protobuf-2.5.0]$ make install

PS:这里安装的时候有可能提示权限不足,若出现该类问题,使用sudo进行安装。

验证Protobuf安装是否成功,命令如下所示:

下面,我们开始进入编译环境,在编译的过程当中会遇到很多问题,大家遇到问题的时候,要认真的去分析这些问题产生的原因,这里先给大家列举一些

可以避免的问题,在使用Maven进行编译的时候,若使用默认的JVM参数,在编译到hadoop-hdfs模块的时候,会出现溢出现象。异常信息如下所

示:

java.lang.OutOfMemoryError: Java heap space

这里,我们在编译Hadoop源码之前,可以先去环境变量中设置其参数即可,内容修改如下:

export MAVEN_OPTS="-Xms256m -Xmx512m"

接下来,我们进入到Hadoop的源码,这里笔者使用的是Hadoop2.6的源码进行编译,更高版本的源码进行编译,估计会有些许差异,编译命令如下所示:

[hadoop@nna tar]$ cd hadoop-2.6.0-src [hadoop@nna tar]$ mvn package -DskipTests -Pdist,native

PS:这里笔者是直接将其编译为文件夹,若需要编译成tar包,可以在后面加上tar的参数,命令为 mvn package -DskipTests -Pdist,native -Dtar

笔者在编译过程当中,出现过在编译KMS模块时,下载tomcat不完全的问题,Hadoop采用的tomcat是apache-

tomcat-6.0.41.tar.gz,若是在此模块下出现异常,可以使用一下命令查看tomcat的文件大小,该文件正常大小为6.9M左右。查看

命令如下所示:

[hadoop@nna downloads]$ du -sh *若出现只有几K的tomcat安装包,表示tomcat下载失败,我们将其手动下载到/home/hadoop/tar/hadoop-2.6.0- src/hadoop-common-project/hadoop-kms/downloads目录下即可。在编译成功后,会出现以下信息:

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Apache Hadoop Main ................................. SUCCESS [ 1.162 s] [INFO] Apache Hadoop Project POM .......................... SUCCESS [ 0.690 s] [INFO] Apache Hadoop Annotations .......................... SUCCESS [ 1.589 s] [INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.164 s] [INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 1.064 s] [INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 2.260 s] [INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 1.492 s] [INFO] Apache Hadoop Auth ................................. SUCCESS [ 2.233 s] [INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 2.102 s] [INFO] Apache Hadoop Common ............................... SUCCESS [01:00 min] [INFO] Apache Hadoop NFS .................................. SUCCESS [ 3.891 s] [INFO] Apache Hadoop KMS .................................. SUCCESS [ 5.872 s] [INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.019 s] [INFO] Apache Hadoop HDFS ................................. SUCCESS [04:04 min] [INFO] Apache Hadoop HttpFS ............................... SUCCESS [01:47 min] [INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [04:58 min] [INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 2.492 s] [INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.020 s] [INFO] hadoop-yarn ........................................ SUCCESS [ 0.018 s] [INFO] hadoop-yarn-api .................................... SUCCESS [01:05 min] [INFO] hadoop-yarn-common ................................. SUCCESS [01:00 min] [INFO] hadoop-yarn-server ................................. SUCCESS [ 0.029 s] [INFO] hadoop-yarn-server-common .......................... SUCCESS [01:03 min] [INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [01:10 min] [INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 1.810 s] [INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 4.041 s] [INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 11.739 s] [INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 3.332 s] [INFO] hadoop-yarn-client ................................. SUCCESS [ 4.762 s] [INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.017 s] [INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 1.586 s] [INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 1.233 s] [INFO] hadoop-yarn-site ................................... SUCCESS [ 0.018 s] [INFO] hadoop-yarn-registry ............................... SUCCESS [ 3.270 s] [INFO] hadoop-yarn-project ................................ SUCCESS [ 2.164 s] [INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.032 s] [INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 13.047 s] [INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 10.890 s] [INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 2.534 s] [INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 6.429 s] [INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 4.866 s] [INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [02:04 min] [INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 1.183 s] [INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 3.655 s] [INFO] hadoop-mapreduce ................................... SUCCESS [ 1.775 s] [INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 11.478 s] [INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 15.399 s] [INFO] Apache Hadoop Archives ............................. SUCCESS [ 1.359 s] [INFO] Apache Hadoop Rumen ................................ SUCCESS [ 3.736 s] [INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 2.822 s] [INFO] Apache Hadoop Data Join ............................ SUCCESS [ 1.791 s] [INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 1.350 s] [INFO] Apache Hadoop Extras ............................... SUCCESS [ 1.858 s] [INFO] Apache Hadoop Pipes ................................ SUCCESS [ 5.805 s] [INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 3.061 s] [INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [07:14 min] [INFO] Apache Hadoop Client ............................... SUCCESS [ 2.986 s] [INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 0.053 s] [INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 2.917 s] [INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 5.702 s] [INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.015 s] [INFO] Apache Hadoop Distribution ......................... SUCCESS [ 8.587 s] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 28:25 min [INFO] Finished at: 2015-10-22T15:12:10+08:00 [INFO] Final Memory: 89M/451M [INFO] ------------------------------------------------------------------------

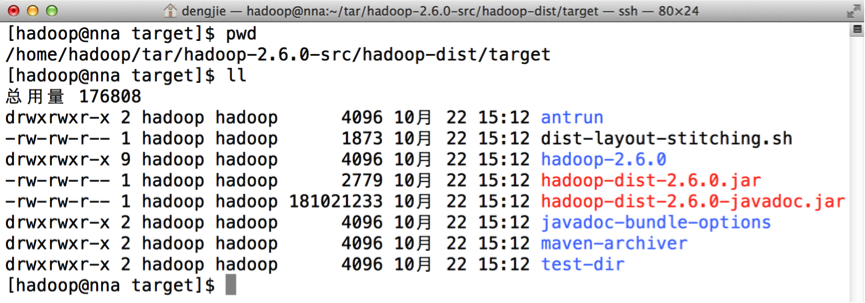

在编译完成之后,会在Hadoop源码的dist目录下生成编译好的文件,如下图所示:

图中hadoop-2.6.0即表示编译好的文件。

在编译的过程当中,会出现各种各样的问题,有些问题可以借助搜索引擎去帮助我们解决,有些问题搜索引擎却难以直接的给出解决方案,这时,我们需 要冷静的分析编译错误信息,大胆的去猜测,然后去求证我们的想法。简而言之,解决问题的方法是有很多的。当然,大家也可以在把遇到的编译问题,贴在评论下 方,供后来者参考或借鉴。

4.结束语这篇文章就和大家分享到这里,如果大家在研究和学习的过程中有什么疑问,可以加群进行讨论或发送邮件给我,我会尽我所能为您解答,与君共勉!

Hadoop运行环境搭建(开发重点三)、在hadoop102安装JDK、配置JDK环境变量、测试JDK是否安装成功 为什么只在hadoop102上安装JDK,因为在hadoop102中安装后将JDK拷贝到hadoop103和hadoop104中,同样后面安装Hadoop的时候也是这样的操作、解压JDK到/opt/module目录下、配置好后需要source一下,重新加载一下内容、在Linux系统下的opt目录中查看软件包是否导入成功、用Xftp传输工具将JDK导入到opt目录下面的software文件夹下面、系统启动的时候就会加载/etc/profile.d这里面的文件.........

通过源码的方式编译hadoop的安装文件 Hadoop2.4.0 重新编译 64 位本地库原创作者:大鹏鸟 时间:2014-07-28环境:虚拟机 VirtualBox,操作系统 64 位 CentOS 6.4下载重新编译需要的软件包apache-ant-1.9.4-bin.tar.gzfindbugs-3.0.0.tar.gzprotobuf-2.5.0.tar.gzapache-maven-3.0.5-bin.tar.gz下载

本地编译Hadoop2.8.0源码总结和问题解决(转自:http://blog.csdn.net/young_kim1/article/details/50324345) 1、下载所需的软件 先去官网下载hadoop2.8.0源码并解压,打开解压目录下的BUILDING.txt,编译过程和需要的软件其实就是根据这个文档里的描述来的。 (可以通过命令下载:wget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-2.8.0/hadoop-2.8.0-src.tar.gz) Requireme

相关文章

- 交流群里的两个实例--直接放源码了

- Postgresql源码(80)plpgsql中异常处理编译与执行流程分析(sqlstate)

- curl源码编译安装

- 麒麟系统V10 SP2 Bind9.18.7 源码编译安装

- Go-Excelize API源码阅读(三十一)——ProtectSheet(sheet string, settings *SheetProtectionOptions)

- ThingsBoard 源码编译

- Go-Excelize API源码阅读(二十五)——GetSheetName、GetSheetIndex、GetSheetMap()

- 从零开始构建向量数据库:Milvus 的源码编译安装(一)

- 图解K8s源码 - kube-apiserver篇

- 【流媒体开发】VLC Media Player - Android 平台源码编译 与 二次开发详解 (提供详细800M下载好的编译源码及eclipse可调试播放器源码下载)

- 【Android RTMP】RTMPDumb 源码导入 Android Studio ( 交叉编译 | 配置 CMakeList.txt 构建脚本 )

- 【Android 进程保活】提升进程优先级 ( 1 像素 Activity 提高进程优先级 | taskAffinity 亲和性说明 | 运行效果 | 源码资源 )

- 【Linux 内核】编译 Linux 内核 ① ( 下载指定版本的 Linux 内核源码 | Linux 内核版本号含义 | 主版本号 | 次版本号 | 小版本号 | 稳定版本 )

- Java中小学智慧校园电子班牌系统项目源码

- SkeyePlayer rtsp播放器源码解析之64位编译方案

- Ubuntu环境源码编译安装xdebug的方法

- Hadoop2.x源码-编译剖析详解大数据

- [PHP] 编译构建最新版PHP源码详解编程语言

- Spring Cloud(十二):Spring Cloud Zuul 限流详解(附源码)编程语言

- Linux协议栈源码分析:深入洞悉内核运行机制(linux协议栈源码分析)

- MySQL编译安装:从源码到运行(源码编译安装mysql)

- 源码安装Linux系统下Redis源码安装指南(linux下redis)

- 学习MySQL:从源码入门(mysql源码)

- 码编译Linux源码编译:实现自主定制(linux源)

- How to Compile Redis from Source Code: A StepbyStep Guide(redis源码编译)

- xcache 源码包编译安装

- 腾讯深度剖析Redis源码(腾讯专家redis源码)

- 用 ASP 管理 MySQL 源码,轻松获取开发效率(asp管理mysql源码)

- XLC编译Redis源码一次挑战成功(xlc编译redis源码)

- 这才是 TensorFlow 自带可视化工具 TensorBoard 的正确打开方式!(附项目源码)

- apachemysqlphp源码编译使用方法

- C语言读取BMP图像数据的源码

- Android笔记之:CM9源码下载与编译的应用

- SUSELinux下源码编译方式安装MySQL5.6过程分享