deep learning学习笔记---MemN2N

2023-09-27 14:27:54 时间

1. Summary

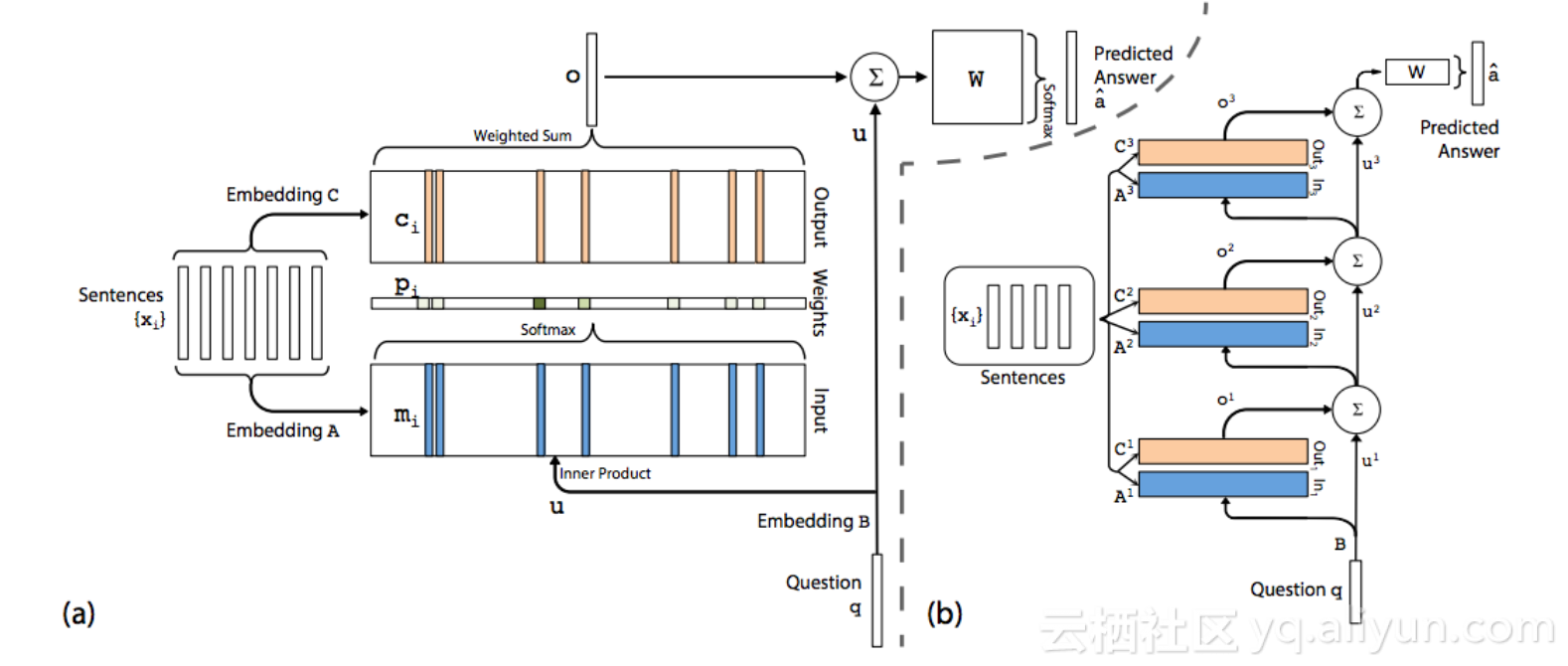

MemN2N is a generalization of RNN

1) The sentence in MemN2N is equivalent to the word in RNN;

2. Kernel Code

Build Modeldef build_model(self): self.build_memory() self.W = tf.Variable(tf.random_normal([self.edim, self.nwords], stddev=self.init_std)) z = tf.matmul(self.hid[-1], self.W) self.loss = tf.nn.softmax_cross_entropy_with_logits(logits=z, labels=self.target) self.lr = tf.Variable(self.current_lr) self.opt = tf.train.GradientDescentOptimizer(self.lr) params = [self.A, self.B, self.C, self.T_A, self.T_B, self.W] grads_and_vars = self.opt.compute_gradients(self.loss,params) clipped_grads_and_vars = [(tf.clip_by_norm(gv[0], self.max_grad_norm), gv[1]) for gv in grads_and_vars] inc = self.global_step.assign_add(1) with tf.control_dependencies([inc]): self.optim = self.opt.apply_gradients(clipped_grads_and_vars) tf.global_variables_initializer().run() self.saver = tf.train.Saver()Build Memory

def build_memory(self): self.global_step = tf.Variable(0, name="global_step") self.A = tf.Variable(tf.random_normal([self.nwords, self.edim], stddev=self.init_std)) self.B = tf.Variable(tf.random_normal([self.nwords, self.edim], stddev=self.init_std)) self.C = tf.Variable(tf.random_normal([self.edim, self.edim], stddev=self.init_std)) # Temporal Encoding self.T_A = tf.Variable(tf.random_normal([self.mem_size, self.edim], stddev=self.init_std)) self.T_B = tf.Variable(tf.random_normal([self.mem_size, self.edim], stddev=self.init_std)) # m_i = sum A_ij * x_ij + T_A_i Ain_c = tf.nn.embedding_lookup(self.A, self.context) Ain_t = tf.nn.embedding_lookup(self.T_A, self.time) Ain = tf.add(Ain_c, Ain_t) # c_i = sum B_ij * u + T_B_i Bin_c = tf.nn.embedding_lookup(self.B, self.context) Bin_t = tf.nn.embedding_lookup(self.T_B, self.time) Bin = tf.add(Bin_c, Bin_t) for h in xrange(self.nhop): self.hid3dim = tf.reshape(self.hid[-1], [-1, 1, self.edim]) Aout = tf.matmul(self.hid3dim, Ain, adjoint_b=True) Aout2dim = tf.reshape(Aout, [-1, self.mem_size]) P = tf.nn.softmax(Aout2dim) probs3dim = tf.reshape(P, [-1, 1, self.mem_size]) Bout = tf.matmul(probs3dim, Bin) Bout2dim = tf.reshape(Bout, [-1, self.edim]) Cout = tf.matmul(self.hid[-1], self.C) Dout = tf.add(Cout, Bout2dim) self.share_list[0].append(Cout) if self.lindim == self.edim: self.hid.append(Dout) elif self.lindim == 0: self.hid.append(tf.nn.relu(Dout)) else: F = tf.slice(Dout, [0, 0], [self.batch_size, self.lindim]) G = tf.slice(Dout, [0, self.lindim], [self.batch_size, self.edim-self.lindim]) K = tf.nn.relu(G) self.hid.append(tf.concat(axis=1, values=[F, K]))

3. Reference

Blog: Memory-network

Paper: End-To-End Memory Networks

Deep learning 三巨头从机器学习谈起,指出传统机器学习的不足,总览深度学习理论、模型,给出了深度学习的发展历史,以及DL中最重要的算法和理论。

Supervised learning demo 监督学习案例 假设函数: 使用h(hypothesis, 假设)表示 输入(input value) 向量或者实数: 使用小写字母x等 矩阵: 使用大写字母X等

论文笔记系列-Neural Architecture Search With Reinforcement Learning 神经网络在多个领域都取得了不错的成绩,但是神经网络的合理设计却是比较困难的。在本篇论文中,作者使用 递归网络去省城神经网络的模型描述,并且使用 增强学习训练RNN,以使得生成得到的模型在验证集上取得最大的准确率。

论文笔记之:Collaborative Deep Reinforcement Learning for Joint Object Search Collaborative Deep Reinforcement Learning for Joint Object Search CVPR 2017 Motivation: 传统的 bottom-up object region proposals 的方法,由于提取了较多的 proposal,导致后续计算必须依赖于抢的计算能力,如 GPU 等。

相关文章

- TensorFlow学习笔记(三)-- feed_dict 使用

- HTML入门学习笔记+详细案例

- JVM学习笔记

- 【数据库和SQL学习笔记】5.SELECT查询3:多表查询、连接查询

- 数据分析---《Python for Data Analysis》学习笔记【02】

- 23 DesignPatterns学习笔记:C++语言实现 --- 1.5 Prototype

- C语言学习笔记 (006) - 二维数组传参的三种表现形式

- Linux学习笔记之grep命令和使用正则表达式

- Linux学习笔记之rsync配置

- Prometheus监控学习笔记之Prometheus 2.0 告警规则介绍

- Docker学习笔记之从镜像仓库获得镜像

- Python Web学习笔记之SOCK_STREAM和SOCK_DGRAM

- Asp.net core 学习笔记 ( ef core transaction scope & change level )

- Angular 学习笔记 ( CDK - Portal )

- Yii框架学习笔记(二)将html前端模板整合到框架中

- Java精选笔记_Servlet事件监听器

- 黑马程序员&传智播客python 协程greenlet gevent学习笔记

- 3DE学习笔记1

- cocos2d-x 3.0游戏实例学习笔记 《跑酷》第一步--- 开始界面

- python基础教程_学习笔记19:标准库:一些最爱——集合、堆和双端队列

- scrapy学习笔记---初识

- JNI 学习笔记系列(一)

- [Linux]学习笔记(2)