chainer-骨干网络backbone-DenseNet代码重构【附源码】

2023-09-27 14:21:00 时间

前言

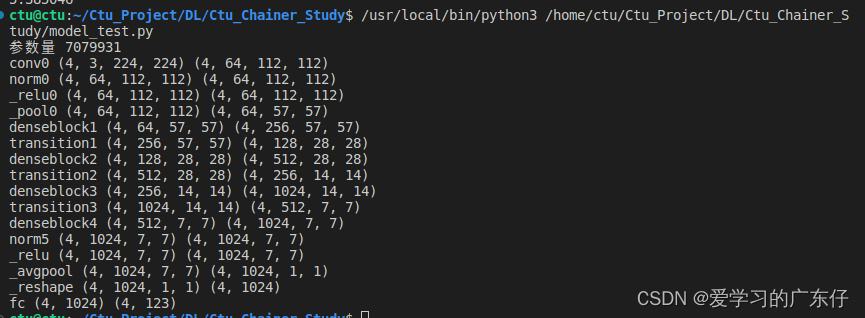

本文基于chainer实现DenseNet网络结构,并基于torch的结构方式构建chainer版的,并计算DenseNet的参数量。

代码实现

class _DenseLayer(chainer.Chain):

def __init__(self, input_c: int, growth_rate: int, bn_size: int, drop_rate: float):

super(_DenseLayer, self).__init__()

self.layers = []

self.layers += [('norm1',L.BatchNormalization(input_c))]

self.layers += [('_relu1',ReLU())]

self.layers += [('conv1',L.Convolution2D(in_channels=input_c,out_channels=bn_size * growth_rate,ksize=1,stride=1,nobias=True))]

self.layers += [('norm2',L.BatchNormalization(bn_size * growth_rate))]

self.layers += [('_relu2',ReLU())]

self.layers += [('conv2',L.Convolution2D(in_channels=bn_size * growth_rate,out_channels=growth_rate,ksize=3,stride=1,pad=1,nobias=True))]

if drop_rate > 0:

self.layers += [("_dropout1",Dropout(drop_rate))]

with self.init_scope():

for n in self.layers:

if not n[0].startswith('_'):

setattr(self, n[0], n[1])

def forward(self, x):

x = F.concat(x)

for n, f in self.layers:

if not n.startswith('_'):

x = getattr(self, n)(x)

else:

x = f.apply((x,))[0]

return x

class _DenseBlock(chainer.Chain):

def __init__(self, num_layers: int, input_c: int, bn_size: int, growth_rate: int, drop_rate: float):

super(_DenseBlock, self).__init__()

self.layers = []

for i in range(num_layers):

self.layers += [("denselayer%d" % (i + 1),_DenseLayer(input_c + i * growth_rate, growth_rate=growth_rate, bn_size=bn_size, drop_rate=drop_rate))]

with self.init_scope():

for n in self.layers:

setattr(self, n[0], n[1])

def forward(self, x):

features = [x]

for n, f in self.layers:

new_features = getattr(self, n)(features)

features.append(new_features)

return F.concat(features)

class _Transition(chainer.Chain):

def __init__(self, input_c: int, output_c: int):

super(_Transition, self).__init__()

self.layers = []

self.layers += [('norm',L.BatchNormalization(input_c))]

self.layers += [('_relu1',ReLU())]

self.layers += [('conv',L.Convolution2D(in_channels=input_c,out_channels=output_c,ksize=1,stride=1,nobias=True))]

self.layers += [("_pool",AveragePooling2D(ksize=2,stride=2,pad=0))]

with self.init_scope():

for n in self.layers:

if not n[0].startswith('_'):

setattr(self, n[0], n[1])

def forward(self, x):

for n, f in self.layers:

if not n.startswith('_'):

x = getattr(self, n)(x)

else:

x = f.apply((x,))[0]

return x

class DenseNet(chainer.Chain):

cfgs={

'densenet121':{'image_size':224,'growth_rate':32,'block_config':(6, 12, 24, 16),'num_init_features':64},

'densenet169':{'image_size':224,'growth_rate':32,'block_config':(6, 12, 32, 32),'num_init_features':64},

'densenet201':{'image_size':224,'growth_rate':32,'block_config':(6, 12, 48, 32),'num_init_features':64},

'densenet264':{'image_size':224,'growth_rate':48,'block_config':(6, 12, 36, 24),'num_init_features':96},

}

def __init__(self,model_name='densenet121', batch_size=4,channels=3,image_size = 224, bn_size: int = 4, drop_rate: float = 0, num_classes: int = 1000,**kwargs):

super(DenseNet, self).__init__()

self.image_size = image_size

self.layers = []

self.layers += [('conv0',L.Convolution2D(in_channels=channels,out_channels=self.cfgs[model_name]['num_init_features'],ksize=7,stride=2,pad=3,nobias=True))]

output_size = int((self.image_size-7+2*3)/2+1)

self.layers += [('norm0',L.BatchNormalization(self.cfgs[model_name]['num_init_features']))]

self.layers += [('_relu0',ReLU())]

self.layers += [('_pool0',MaxPooling2D(ksize=3, stride=2,pad=1))]

output_size = math.ceil((output_size-3+2*1)/2+1)

# each dense block

num_features = self.cfgs[model_name]['num_init_features']

for i, num_layers in enumerate(self.cfgs[model_name]['block_config']):

block = _DenseBlock(num_layers=num_layers,

input_c=num_features,

bn_size=bn_size,

growth_rate=self.cfgs[model_name]['growth_rate'],

drop_rate=drop_rate)

self.layers += [("denseblock%d" % (i + 1),block)]

num_features = num_features + num_layers * self.cfgs[model_name]['growth_rate']

if i != len(self.cfgs[model_name]['block_config']) - 1:

trans = _Transition(input_c=num_features, output_c=num_features // 2)

self.layers += [("transition%d" % (i + 1),trans)]

output_size = int((output_size-2+2*0)/2+1)

num_features = num_features // 2

self.layers += [("norm5",L.BatchNormalization(num_features))]

self.layers += [("_relu",ReLU())]

self.layers += [('_avgpool',AveragePooling2D(ksize=output_size,stride=1,pad=0))]

self.layers += [('_reshape',Reshape((batch_size,num_features)))]

self.layers += [('fc',L.Linear(num_features, num_classes))]

with self.init_scope():

for n in self.layers:

if not n[0].startswith('_'):

setattr(self, n[0], n[1])

def forward(self, x):

for n, f in self.layers:

origin_size = x.shape

if not n.startswith('_'):

x = getattr(self, n)(x)

else:

x = f.apply((x,))[0]

print(n,origin_size,x.shape)

if chainer.config.train:

return x

return F.softmax(x)

注意此类就是DenseNet的实现过程,注意网络的前向传播过程中,分了训练以及测试。

训练过程中直接返回x,测试过程中会进入softmax得出概率

调用方式

if __name__ == '__main__':

batch_size = 4

n_channels = 3

image_size = 224

num_classes = 123

model = DenseNet(num_classes=num_classes, channels=n_channels,image_size=image_size,batch_size=batch_size)

print("参数量",model.count_params())

x = np.random.rand(batch_size, n_channels, image_size, image_size).astype(np.float32)

t = np.random.randint(0, num_classes, size=(batch_size,)).astype(np.int32)

with chainer.using_config('train', True):

y1 = model(x)

loss1 = F.softmax_cross_entropy(y1, t)

相关文章

- Python网络爬虫(一):初步认识网络爬虫

- C#编写局域网抓包工具源码、网络编程

- (《机器学习》完整版系列)第5章 神经网络——5.2 RBF网络(单层RBF就可解决异或问题)与ART网络(实现“自适应谐振”)

- 认知无线电网络中的频谱切换

- 微信小程序 - 引入并使用 Fly.js 请求库(超级详细的教程及运行示例)提供 Fly.js 源码源文件下载,贴心的配置示例及注释,优雅快速的发起 http 网络请求

- OkHttp 3.7源码分析(二)——拦截器&一个实际网络请求的实现

- YOLOV5学习笔记(三)——网络组件详解

- SwiftUI 音乐和网络大全之网络音乐播放App支持iTunes搜索与播放(教程含源码)

- SwiftUI 载入URL网络图片和缓存 (教程含源码)

- SwiftUI 2.0 Image如何载入网络图片(教程含源码Combine)

- macOS SwiftUI 网络编程之如何获取网络图片并高性能展示 解决SPM慢问题(教程含源码)

- Python Streamlit教程大全之 02 获取网络数据数据,缓存网络数据(教程含源码)

- Python 3D之如何使用 Python Plotly 可视化交互式 3D 网络(教程含源码)

- js 网络请求框架 ajax和axios、fetch的区别

- 【毕业设计_课程设计】基于网络爬虫的新闻采集和订阅系统的设计与实现(源码+论文)

- torch.nn中的网络模型介绍

- 涉足网络功能虚拟化,EMC发布PCS参考架构