python代码实现将PDF文件转为文本及其对应的音频

代码地址:

https://github.com/TiffinTech/python-pdf-audo

============================================

import pyttsx3,PyPDF2 #insert name of your pdf pdfreader = PyPDF2.PdfReader(open('book.pdf', 'rb')) speaker = pyttsx3.init() for page_num in range(len(pdfreader.pages)): text = pdfreader.pages[page_num].extract_text() clean_text = text.strip().replace('\n', ' ') print(clean_text) #name mp3 file whatever you would like speaker.save_to_file(clean_text, 'story.mp3') speaker.runAndWait() speaker.stop()

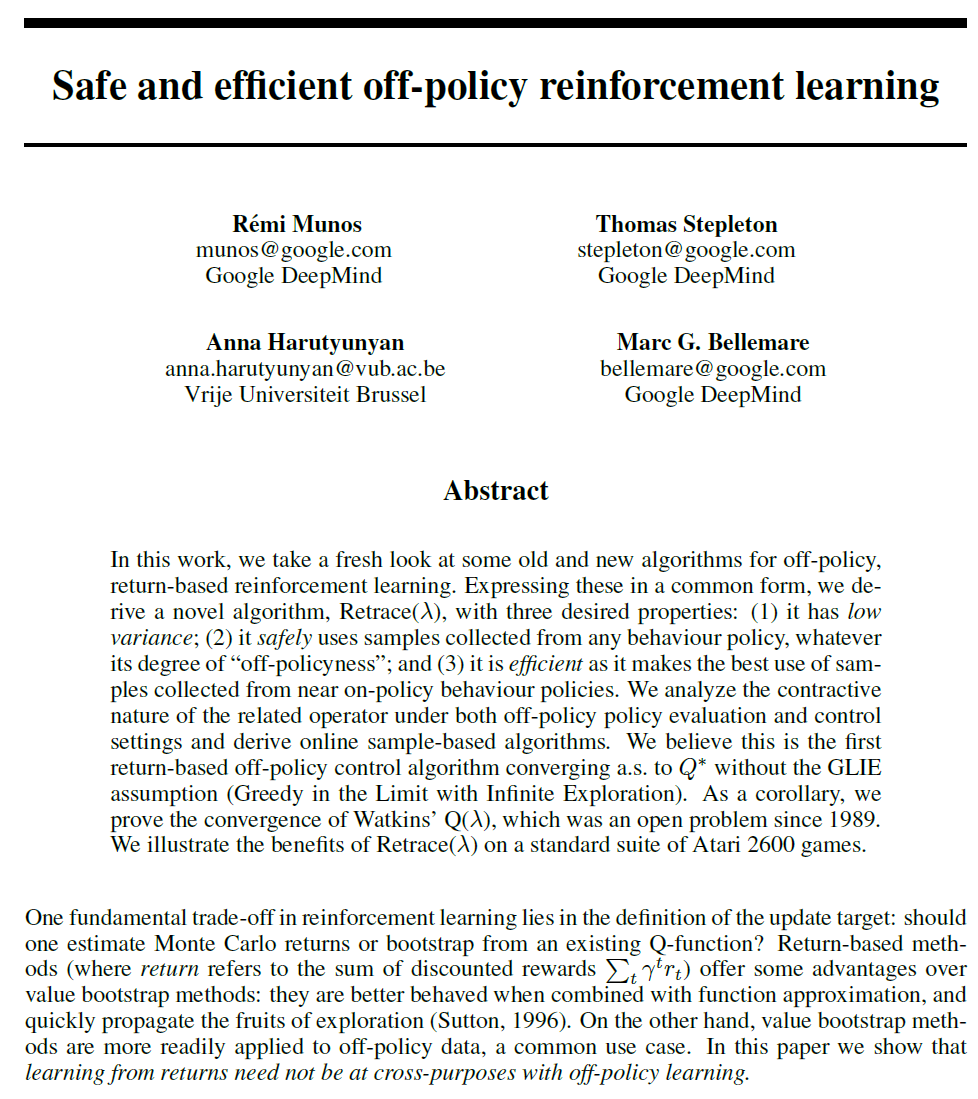

首先说下PDF文字提取的功能,大概还是可以凑合的,给出Demo:

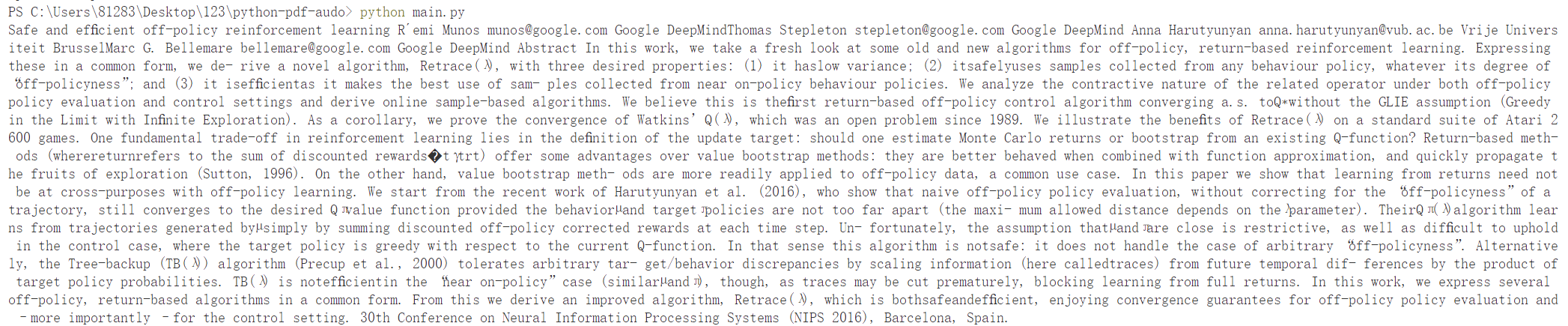

提取的文字为:

Safe and efficient off-policy reinforcement learning R´emi Munos munos@google.com Google DeepMindThomas Stepleton stepleton@google.com Google DeepMind Anna Harutyunyan anna.harutyunyan@vub.ac.be Vrije Universiteit BrusselMarc G. Bellemare bellemare@google.com Google DeepMind Abstract In this work, we take a fresh look at some old and new algorithms for off-policy, return-based reinforcement learning. Expressing

these in a common form, we de- rive a novel algorithm, Retrace(λ), with three desired properties: (1) it haslow variance; (2) itsafelyuses samples collected from any behaviour policy, whatever its degree of

“off-policyness”; and (3) it isefficientas it makes the best use of sam- ples collected from near on-policy behaviour policies. We analyze the contractive nature of the related operator under both off-policy

policy evaluation and control settings and derive online sample-based algorithms. We believe this is thefirst return-based off-policy control algorithm converging a.s. toQ∗without the GLIE assumption (Greedy

in the Limit with Infinite Exploration). As a corollary, we prove the convergence of Watkins’ Q(λ), which was an open problem since 1989. We illustrate the benefits of Retrace(λ) on a standard suite of Atari 2600 games. One fundamental trade-off in reinforcement learning lies in the definition of the update target: should one estimate Monte Carlo returns or bootstrap from an existing Q-function? Return-based meth- ods (wherereturnrefers to the sum of discounted rewards� tγtrt) offer some advantages over value bootstrap methods: they are better behaved when combined with function approximation, and quickly propagate the fruits of exploration (Sutton, 1996). On the other hand, value bootstrap meth- ods are more readily applied to off-policy data, a common use case. In this paper we show that learning from returns need not be at cross-purposes with off-policy learning. We start from the recent work of Harutyunyan et al. (2016), who show that naive off-policy policy evaluation, without correcting for the “off-policyness” of a

trajectory, still converges to the desired Qπvalue function provided the behaviorµand targetπpolicies are not too far apart (the maxi- mum allowed distance depends on theλparameter). TheirQπ(λ)algorithm learns from trajectories generated byµsimply by summing discounted off-policy corrected rewards at each time step. Un- fortunately, the assumption thatµandπare close is restrictive, as well as difficult to uphold in the control case, where the target policy is greedy with respect to the current Q-function. In that sense this algorithm is notsafe: it does not handle the case of arbitrary “off-policyness”. Alternatively, the Tree-backup (TB(λ)) algorithm (Precup et al., 2000) tolerates arbitrary tar- get/behavior discrepancies by scaling information (here calledtraces) from future temporal dif- ferences by the product of target policy probabilities. TB(λ) is notefficientin the “near on-policy” case (similarµandπ), though, as traces may be cut prematurely, blocking learning from full returns. In this work, we express several

off-policy, return-based algorithms in a common form. From this we derive an improved algorithm, Retrace(λ), which is bothsafeandefficient, enjoying convergence guarantees for off-policy policy evaluation and – more importantly – for the control setting. 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain.

上面这些这就是文字提取的效果,而对于音频转换这部分就效果实在是糟糕的很,转换的音频是无法贴合原文的,因此这里认为上面代码中这个PDF文字提取功能还是可以勉强用的,为以后项目需要做一定的技术积累,而这个音频转换就无法考虑使用了。

=============================================

对应的视频:

相关文章

- 第三百五十一节,Python分布式爬虫打造搜索引擎Scrapy精讲—将selenium操作谷歌浏览器集成到scrapy中

- Python操作Mysql实例代码教程在线版(查询手册)_python

- Python中的文件IO操作(读写文件、追加文件)

- 小白学 Python(18):基础文件操作

- Python-装饰器-案例-获取文件列表

- Python Django 文件上传代码示例

- 华为OD机试 - 最少面试官数(Java & JS & Python)

- 成功解决tensorflow.python.framework.errors_impl.NotFoundError: FindFirstFile failed for: ../checkpoints

- 已解决Python读取20GB超大文件内存溢出报错MemoryError

- 已解决2. Set PROTOCOL_BUPFERS_PYTHON_iMPLEMENTATION=python (but this will use pure-Python parsing and w

- 〖Python 数据库开发实战 - Python与MySQL交互篇⑩〗- 创建新闻管理系统的具体python文件

- 使用python读取word文件里的表格信息

- Python编程:yaml文件读写

- python通过pdfminer或pdfminer3k读取pdf文件

- python基础4--文件操作

- Python操作ppt和pdf基础

- 〖Python自动化办公篇⑫〗- Excel 文件自动化 - 读取 excel 数据

- 〖Python自动化办公篇⑰〗- PPT 文件自动化 - PPT 插入表格与图片

- python零基础学编程:Python是什么?为什么Python这么火,学Python能干什么?