MongoDB 分片集群

分片集群

1. 什么是分片?

将数据水平拆分到不同的服务器上

2. 为什么要使用分片集群

数据量突破单机瓶颈,数据量大,恢复很慢,不利于数据管理

并发量突破单机性能瓶颈

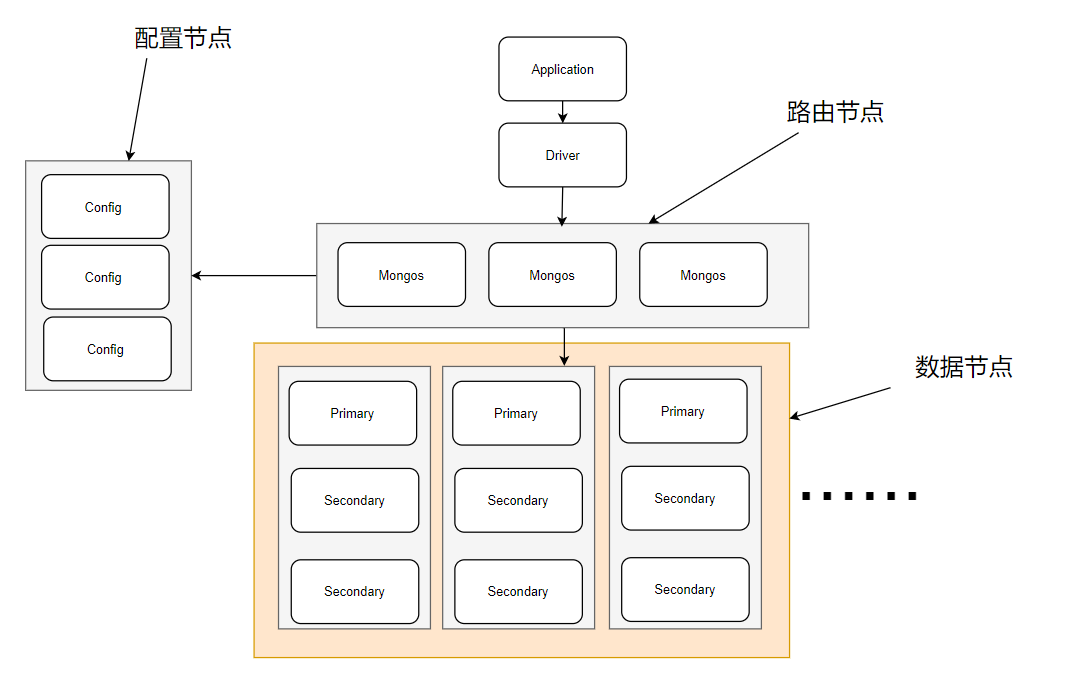

MongoDB 分片集群由一下几部分组成

分片集群角色

路由节点

mongos, 提供集群单一入口,转发应用端请求,选择合适的数据节点进行读写,合并多个数据节点的返回。无状态,建议 mongos节点集群部署以提供高可用性。客户请求应发给mongos,而不是 分片服务器,当查询包含分片片键时,mongos将查询发送到指定分片,否则,mongos将查询发送到所有分片,并汇总所有查询结果。

配置节点

就是普通的mongod进程, 建议以复制集部署,提供高可用

提供集群元数据存储分片数据分布的数据。主节点故障时,配置服务器进入只读模式,

只读模式下,数据段分裂和集群平衡都不可执行。整个复制集故障时,分片集群不可用

数据节点

以复制集为单位,横向扩展最大1024分片,分片之间数据不重复,所有数据在一起才可以完整工作。

分片键

可以是单个字段, 也可以是复合字段

1. 范围分片

比如 key 的值 从 min - max

可以把数据进行范围分片

2. hash 分片

通过 hash(key ) 进行数据分段

片键值用来将集合中的文档划分为数据段,片键必须对应一个索引或索引前缀(单键、复合键),可以使用片键的值 或者片键值的哈希值进行分片

选择片键

1. 片键值的范围更广(可以使用复合片键扩大范围)

2. 片键值的分布更平衡(可使用复合片键平衡分布)

3. 片键值不要单向增大、减小(可使用哈希片键)

数据段的分裂

当数据段尺寸过大,或者包含过多文档时,触发数据段分裂,只有新增、更新文档时才可能自动触发数据段分裂,数据段分裂通过更新元数据来实现

集群的平衡

后台运行的平衡器负责监视和调整集群的平衡,当最大和最小分片之间的数据段数量相差过大时触发;集群中添加或移除分片时也会触发

MongoDB分片集群特点

1.应用全透明

2.数据自动均衡

3.动态扩容,无需下线

搭建分片集群

搭建一个2个分片的集群

1. 创建数据目录 : 准备给两个复制集使用,每个复制集有三个实例 ,共 6 个数据节点

mkdir -p shard1 shard1second1 shard1second2 shard2 shard2second1 shard2second2

2. 创建日志文件,共6 个文件

[root@node01 shard]# touch shard1second1/mongod.log

[root@node01 shard]# touch shard1second2/mongod.log

[root@node01 shard]# touch shard2second2/mongod.log

[root@node01 shard]# touch shard2second1/mongod.log

[root@node01 shard]# touch shard1/mongod.log

[root@node01 shard]# touch shard2/mongod.log

3. 启动第一个 mongod 分片实例( 一共三个实例)

mongod --bind_ip 0.0.0.0 --replSet shard1 --dbpath /home/admin/mongDB/shard/shard1 --logpath //home/admin/mongDB/shard/shard1/mongod.log --port 27010 --fork --shardsvr

mongod --bind_ip 0.0.0.0 --replSet shard1 --dbpath /home/admin/mongDB/shard/shard1second1 --logpath /home/admin/mongDB/shard/shard1second1/mongod.log --port 27011 --fork --shardsvr

mongod --bind_ip 0.0.0.0 --replSet shard1 --dbpath /home/admin/mongDB/shard/shard1second2 --logpath /home/admin/mongDB/shard/shard1second2/mongod.log --port 27012 --fork --shardsvr4. 第一个分片的mongod 实例都启动好了后,将其加入到复制集中

rs.initiate(

{_id:"shard1",

"members":[

{"_id":0,"host":"node01:27010"},

{"_id":1,"host":"node01:27011"},

{"_id":2,"host":"node01:27012"}

]

});

搭建第二个分片 ,和上面一样只需要修改下路径和端口

mongod --bind_ip 0.0.0.0 --replSet shard2 --dbpath /home/admin/mongDB/shard/shard2 --logpath //home/admin/mongDB/shard/shard2/mongod.log --port 28010 --fork --shardsvr

mongod --bind_ip 0.0.0.0 --replSet shard2 --dbpath /home/admin/mongDB/shard/shard2second1 --logpath /home/admin/mongDB/shard/shard2second1/mongod.log --port 28011 --fork --shardsvr

mongod --bind_ip 0.0.0.0 --replSet shard2 --dbpath /home/admin/mongDB/shard/shard2second2 --logpath /home/admin/mongDB/shard/shard2second2/mongod.log --port 28012 --fork --shardsvr

rs.initiate(

{_id:"shard2",

"members":[

{"_id":0,"host":"node01:28010"},

{"_id":1,"host":"node01:28011"},

{"_id":2,"host":"node01:28012"}

]

});

等待集群选举

5. 查看状态

shard2:PRIMARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("2022-05-17T13:19:41.393Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 3,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1652793580, 1),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1652793580, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1652793580, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1652793580, 1),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastDurableWallTime" : ISODate("2022-05-17T13:19:40.542Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1652793570, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2022-05-17T13:18:50.505Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(1652793519, 1),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1652793519, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 2,

"priorityAtElection" : 1,

"electionTimeoutMillis" : NumberLong(10000),

"numCatchUpOps" : NumberLong(0),

"newTermStartDate" : ISODate("2022-05-17T13:18:50.530Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2022-05-17T13:18:51.427Z")

},

"members" : [

{

"_id" : 0,

"name" : "node01:28010",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 415,

"optime" : {

"ts" : Timestamp(1652793580, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-05-17T13:19:40Z"),

"lastAppliedWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastDurableWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1652793530, 1),

"electionDate" : ISODate("2022-05-17T13:18:50Z"),

"configVersion" : 1,

"configTerm" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "node01:28011",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 61,

"optime" : {

"ts" : Timestamp(1652793570, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1652793570, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-05-17T13:19:30Z"),

"optimeDurableDate" : ISODate("2022-05-17T13:19:30Z"),

"lastAppliedWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastDurableWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastHeartbeat" : ISODate("2022-05-17T13:19:40.536Z"),

"lastHeartbeatRecv" : ISODate("2022-05-17T13:19:39.531Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "node01:28010",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1,

"configTerm" : 1

},

{

"_id" : 2,

"name" : "node01:28012",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 61,

"optime" : {

"ts" : Timestamp(1652793570, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1652793570, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2022-05-17T13:19:30Z"),

"optimeDurableDate" : ISODate("2022-05-17T13:19:30Z"),

"lastAppliedWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastDurableWallTime" : ISODate("2022-05-17T13:19:40.542Z"),

"lastHeartbeat" : ISODate("2022-05-17T13:19:40.533Z"),

"lastHeartbeatRecv" : ISODate("2022-05-17T13:19:39.531Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "node01:28010",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1,

"configTerm" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1652793580, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1652793580, 1)

}

配置Config 复制集:一共三个实例

mkdir -p config configsecond1 configsecond2

[root@node01 config]# touch config/mongod.log configsecond1/mongod.log configsecond2/mongod.log启动配置复制集

mongod --bind_ip 0.0.0.0 --replSet config --dbpath /home/admin/mongDB/config/config --logpath /home/admin/mongDB/config/config/mongod.log --port 37010 --fork --configsvr

mongod --bind_ip 0.0.0.0 --replSet config --dbpath /home/admin/mongDB/config/configsecond1 --logpath /home/admin/mongDB/config/configsecond1/mongod.log --port 37011 --fork --configsvr

mongod --bind_ip 0.0.0.0 --replSet config --dbpath /home/admin/mongDB/config/configsecond2 --logpath /home/admin/mongDB/config/configsecond2/mongod.log --port 37012 --fork --configsvr 配置复制集进行初始化

rs.initiate(

{_id:"config",

"members":[

{"_id":0,"host":"node01:37010"},

{"_id":1,"host":"node01:37011"},

{"_id":2,"host":"node01:37012"}

]

})mongo --port 37011

配置mongs 路由节点

1. 启动mongos 实例,需要指定配置服务器的地址列表

mongos --bind_ip 0.0.0.0 --logpath /home/admin/mongDB/mongos/mongos.log --port 4000 --fork --configdb config/node01:37010,node01:37011,node01:37012

其中 configdb 为配置服务器的地址列表

2. 连接到mongos中,并添加分片

直接通过mongo shell 客户端进行连接

mongo --port 4000 本地直连

执行脚本

sh.addShard("shard1/node01:27010,node01:27011,node01:27012");

sh.addShard("shard2/node01:28010,node01:28011,node01:28012");

添加分片

mongos> sh.addShard("shard1/node01:27010,node01:27011,node01:27012");

{

"shardAdded" : "shard1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1652794062, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1652794062, 5)

}

mongos> sh.addShard("shard2/node01:28010,node01:28011,node01:28012");

{

"shardAdded" : "shard2",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1652794092, 81),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1652794092, 67)

}

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6283a1ef72a1b724b895aa03")

}

shards:

{ "_id" : "shard1", "host" : "shard1/node01:27010,node01:27011,node01:27012", "state" : 1, "topologyTime" : Timestamp(1652794062, 2) }

{ "_id" : "shard2", "host" : "shard2/node01:28010,node01:28011,node01:28012", "state" : 1, "topologyTime" : Timestamp(1652794092, 64) }

active mongoses:

"5.0.8" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration results for the last 24 hours:

6 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1018

shard2 6

too many chunks to print, use verbose if you want to force print查看分片状态:

sh.status();

mongos 可以用同样的方式,创建多个

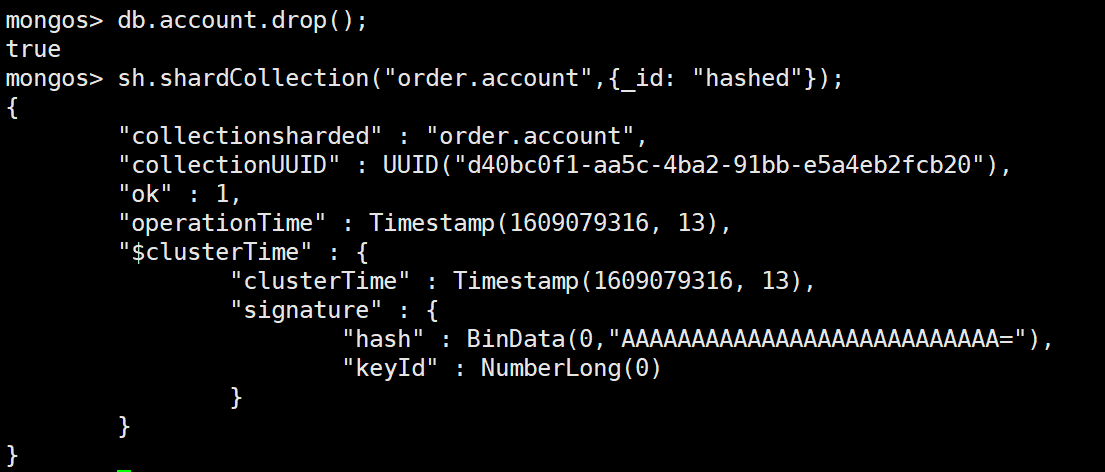

创建分片表

1. MongoDB的分片时基于集合的,就算有分片集群不等于数据会自动分片,需要显示分片表

首先需要 启用数据库分片

sh.enableSharding("库名");

如:

sh.enableSharding("order");

对collection进行分片

sh.shardCollection("库名.集合名",{_id: "hashed"});

sh.shardCollection("order.orders",{_id: "hashed"});

sh.shardCollection("records.people", { "zipcode": 1, "name": 1 } ) sh.shardCollection("people.addresses", { "state": 1, "_id": 1 } ) sh.shardCollection("assets.chairs", { "type": 1, "_id": 1 } )

加入到集群分片

mongo --port 4000

sh.addShard("shard2/node01:27013,node01:27014,node01:27015");

移除分片

确认balancer已经开启

mongos> sh.getBalancerState()

true移除分片

mongos> use admin

switched to db admin

mongos> db.runCommand( { removeShard: "shard3" } )

{

"msg" : "draining started successfully",

"state" : "started",

"shard" : "shard3",

"ok" : 1

}

检查迁移状态

mongos> use admin

switched to db admin

mongos> db.runCommand( { removeShard: "shard3" } )

{

"msg" : "draining ongoing",

"state" : "ongoing",

"remaining" : {

"chunks" : NumberLong(3),

"dbs" : NumberLong(0)

},

"ok" : 1

}

remaining中的chunks表示还有多少数据块未迁移。

移除未分片数据

1)要确定您要删除的分片是否是任何集群数据库的主分片,请发出以下方法之一:sh.status()

db.printShardingStatus()

在生成的文档中,databases 字段列出了每个数据库及其主分片。

例如,以下数据库字段显示 products 数据库使用 mongodb0 作为主分片:

{ "_id" : "products", "partitioned" : true, "primary" : "mongodb0" }

2)要将数据库移动到另一个分片,请使用 movePrimary 命令。例如,要将所有剩余的未分片数据从 mongodb0 迁移到 mongodb1,

发出以下命令:

使用管理员

db.runCommand( { movePrimary: "products", to: "mongodb1" }) --products为数据库名

完成迁移

mongos> use admin

switched to db admin

mongos> db.runCommand( { removeShard: "shard3" } )

{

"msg" : "removeshard completed successfully",

"state" : "completed",

"shard" : "shard3",

"ok" : 1

}

mongos> sh.enableSharding("order.orders",{"_id":"hashed"});

{

"ok" : 0,

"errmsg" : "wrong type for field (primaryShard) object != string",

"code" : 13111,

"codeName" : "Location13111",

"$clusterTime" : {

"clusterTime" : Timestamp(1652794808, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1652794808, 2)

}

如果前面已经有数据了,再分配会报错

相关文章

- Mongodb集群搭建及spring和java连接配置记录

- 【华为云技术分享】MongoDB经典故障系列五:sharding集群执行sh.stopBalancer()命令被卡住怎么办?

- MongoDB短连接Auth性能优化

- java mongodb 基础系列---查询,排序,limit,$in,$or,输出为list,创建索引,$ne 非操作

- mongodb搭建集群

- mongodb安装最新版本并恢复成相同的旧环境

- [MongoDB] Mongodb攻略

- 如何在SAP云平台上使用MongoDB服务

- mongoDB BI 分析利器 - PostgreSQL FDW (MongoDB Connector for BI)

- 〖Python 数据库开发实战 - MongoDB篇⑥〗- MongoDB的用户管理

- mongodb远程链接命令

- Node.js使用mongodb.js操作MongoDB数据库

- MongoDB一个基于分布式文件存储的数据库(介于关系数据库和非关系数据库之间的数据库)

- 如何通过命令查看MongoDB集群情况

- mongodb的分布式集群(4、分片和副本集的结合)

- mongodb 3.2配置内存缓存大小为MB/MongoDB 3.x内存限制配置

- YCSB benchmark测试mongodb性能——和web服务器测试性能结果类似

- MongoDB搭建ReplSet复制集群