【大数据开发运维解决方案】hadoop+kylin安装及官方cube/steam cube案例文档

2023-09-14 09:16:30 时间

对于hadoop+kylin的安装过程在上一篇文章已经详细的写了,这里只给出链接:

Hadoop+Mysql+Hive+zookeeper+kafka+Hbase+Sqoop+Kylin单机伪分布式安装及官方案例详细文档

请读者先看完上一篇文章再看本本篇文章,本文主要大致介绍kylin官官方提供的常规批量cube创建和kafka+kylin流式构建cube(steam cube)的操作过程,具体详细过程请看官方文档。

1、常规cube创建案例

[root@hadoop ~]# cd /hadoop/kylin/bin/

[root@hadoop bin]# ./sample.sh

。。。。。。。

2019-03-12 13:47:05,533 INFO [close-hbase-conn] client.ConnectionManager$HConnectionImplementation:1774 : Closing zookeeper sessionid=0x1696ffeb12f0016

2019-03-12 13:47:05,536 INFO [close-hbase-conn] zookeeper.ZooKeeper:684 : Session: 0x1696ffeb12f0016 closed

2019-03-12 13:47:05,536 INFO [main-EventThread] zookeeper.ClientCnxn:519 : EventThread shut down for session: 0x1696ffeb12f0016

Sample cube is created successfully in project 'learn_kylin'.

Restart Kylin Server or click Web UI => System Tab => Reload Metadata to take effect

看到上面restart一行则说明案例创建完了,重启kylin或则去web页面system窗口点击reload metadatas刷新后界面如下:

发现左上角选择项目中有了学习项目,然后选择第二个kylin_sales_cube

选择bulid,随意选择一个12年以后的日期

在这里插入图片描述

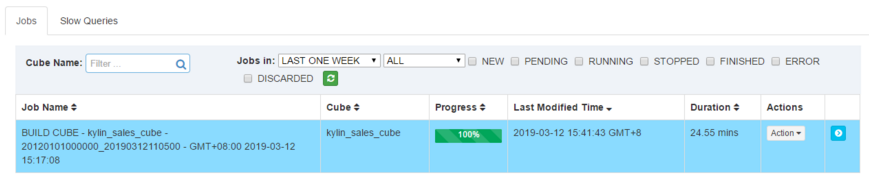

然后切换到monitor界面:

等待cube创建完成。

做sql查询

正常创建cube没问题了。接下来看流式构建cube

2、流式构建cube

在流式构建cube之前,需要修改一下官方的案例shell脚本:

[root@hadoop bin]# pwd

/hadoop/kylin/bin

[root@hadoop bin]# vim sample

sample.sh sample-streaming.sh

[root@hadoop bin]# vim sample-streaming.sh

将localhost改成ip,因为我kafka配置监听是用的ip,不然会报连接问题

bin/kafka-topics.sh --create --zookeeper 192.168.1.66:2181 --replication-factor 1 --partitions 1 --topic kylin_streaming_topic

echo "Generate sample messages to topic: kylin_streaming_topic."

cd ${KYLIN_HOME}

${dir}/kylin.sh org.apache.kylin.source.kafka.util.KafkaSampleProducer --topic kylin_streaming_topic --broker 192.168.1.66:9092

然后再执行:

[root@hadoop bin]# ./sample-streaming.sh

Retrieving kafka dependency...

WARNING: Due to limitations in metric names, topics with a period ('.') or underscore ('_') could collide. To avoid issues it is best to use either, but not both.

Created topic "kylin_streaming_topic".

Generate sample messages to topic: kylin_streaming_topic.

Retrieving hadoop conf dir...

KYLIN_HOME is set to /hadoop/kylin

Retrieving hive dependency...

Retrieving hbase dependency...

Retrieving hadoop conf dir...

Retrieving kafka dependency...

Retrieving Spark dependency...

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/hadoop/kylin/tool/kylin-tool-2.4.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/hbase/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop/kylin/spark/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2019-03-12 16:08:56,015 INFO [main] util.KafkaSampleProducer:58 : args: [--topic, kylin_streaming_topic, --broker, 192.168.1.66:9092]

2019-03-12 16:08:56,057 INFO [main] util.KafkaSampleProducer:67 : options: ' topic=kylin_streaming_topic broker=192.168.1.66:9092'

2019-03-12 16:08:56,118 INFO [main] producer.ProducerConfig:279 : ProducerConfig values:

acks = all

batch.size = 16384

bootstrap.servers = [192.168.1.66:9092]

buffer.memory = 33554432

client.id =

compression.type = none

connections.max.idle.ms = 540000

enable.idempotence = false

interceptor.classes = []

key.serializer = class org.apache.kafka.common.serialization.StringSerializer

linger.ms = 1

max.block.ms = 60000

max.in.flight.requests.per.connection = 5

max.request.size = 1048576

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes = 32768

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retries = 0

retry.backoff.ms = 100

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.timeout.ms = 60000

transactional.id = null

value.serializer = class org.apache.kafka.common.serialization.StringSerializer

2019-03-12 16:08:56,336 INFO [main] utils.AppInfoParser:109 : Kafka version : 1.1.1

2019-03-12 16:08:56,340 INFO [main] utils.AppInfoParser:110 : Kafka commitId : 8e07427ffb493498

Sending 1 message: {"country":"AUSTRALIA","amount":89.73931475539533,"qty":6,"currency":"USD","order_time":1552378136345,"category":"ELECTRONIC","device":"iOS","user":{"gender":"Male","id":

"6a0b60b5-1775-4859-9de3-1474d3dfb3d8","first_name":"unknown","age":16}}2019-03-12 16:08:57,057 INFO [kafka-producer-network-thread | producer-1] clients.Metadata:265 : Cluster ID: gw1oND0HR7aCl7QfbQnuKg

Sending 1 message: {"country":"US","amount":11.886638622312795,"qty":2,"currency":"USD","order_time":1552378137097,"category":"CLOTH","device":"iOS","user":{"gender":"Male","id":"e822dfba-9

543-4eaf-b59d-6ec76c8efabd","first_name":"unknown","age":15}}Sending 1 message: {"country":"Other","amount":13.719149755619153,"qty":6,"currency":"USD","order_time":1552378137115,"category":"CLOTH","device":"Windows","user":{"gender":"Female","id":"2

bf2a3c2-87a1-4afb-a80f-d3fe4b60cbd6","first_name":"unknown","age":18}}

kylin创建了一个topic,并且每秒轮询的往topic生产数据,我这里生产数据只生产了一部分就停了,然后去kylin的web界面修改他的案例表对应的kafka topic的连接配置:

点击edit

修改localhost为ip

保存退出,现在去build案例给的流式cube

现在就可以查询了。

相关文章

- commons-lang里面StringUtils方法说明以及案例

- shell脚本案例-目录判断

- 【愚公系列】2022年11月 .NET CORE工具案例-.NET Core执行JavaScript

- Cocos 3D开源游戏案例

- HW防守 | 溯源案例之百度ID层层拨茧

- Python和Excel的完美结合:常用操作汇总(案例详析)

- Thinkphp5学习008-项目案例-学生列表模板设计

- sam和bam处理案例

- 软件7大设计原则(附案例所敲代码)

- 不背锅运维:粗讲:K8S的Service及分享现撸案例

- 智能合约DAPP系统,智能合约DAPP系统开发功能,智能合约DAPP流动性质押挖矿分红系统开发应用案例及源码

- 文档流code案例小汇【处理高度塌陷】

- 两个入门案例带你入门SpringMVC 注解版&&XML版

- 【愚公系列】2023年02月 .NET CORE工具案例-办公文档神器Toxy的使用

- 【愚公系列】2023年02月 .NET CORE工具案例-ToolGood.Words敏感词过滤

- 【Android 高性能音频】OboeTest 音频性能测试应用 ( 应用简介 | 测试内容 | 输出测试 | Oboe 缓冲区 与 工作负载修改 | 测试案例 )

- RabbitMQ 七种队列模式应用场景案例分析(通俗易懂)

- MSSQL OLAP 数据库技术简介与应用案例分享(mssqlolap)

- Oracle应用案例研究:PDF文档版(oracle案例pdf)

- 【信息诈骗典型案例】之“低价购买电子产品”

- 利用Redis搭建高效稳定项目实践(使用redis的项目案例)

- Mysql主从复制(master-slave)实际操作案例