k8s学习之路 | Day6 kubeadm 引导 k8s 集群

我先尝试使用官方推荐工具 kubeadm 引导一个简单的 k8s 集群,后续还得学习二进制安装

参考地址:https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/

安装方式

Kubeadm 是一个提供了 kubeadm init 和 kubeadm join 的工具, 作为创建 Kubernetes 集群的 “快捷途径” 的最佳实践。

kubeadm 通过执行必要的操作来启动和运行最小可用集群。 按照设计,它只关注启动引导,而非配置机器。同样的, 安装各种 “锦上添花” 的扩展,例如 Kubernetes Dashboard、 监控方案、以及特定云平台的扩展,都不在讨论范围内。

相反,我们希望在 kubeadm 之上构建更高级别以及更加合规的工具, 理想情况下,使用 kubeadm 作为所有部署工作的基准将会更加易于创建一致性集群。

多种方式比较

- 二进制文件安装方式:建议生产使用,后期维护升级规范更方便,更新升级、排查故障有利,也更能理解整个 k8s 集群工作之间的关系,

- Minikube:学习环境使用

- kubeadm 引导:官方推荐使用,后期二开功能和自身维护工具引入支持是个问题(大佬的话)

环境说明

环境信息

三台虚拟机

192.168.204.53 2核2G 150G 可访问外网 CentOS 7.9最小化系统 vmwareworkstation NAT网络

192.168.204.54 2核2G 150G 可访问外网 CentOS 7.9最小化系统 vmwareworkstation NAT网络

192.168.204.55 2核2G 150G 可访问外网 CentOS 7.9最小化系统 vmwareworkstation NAT网络

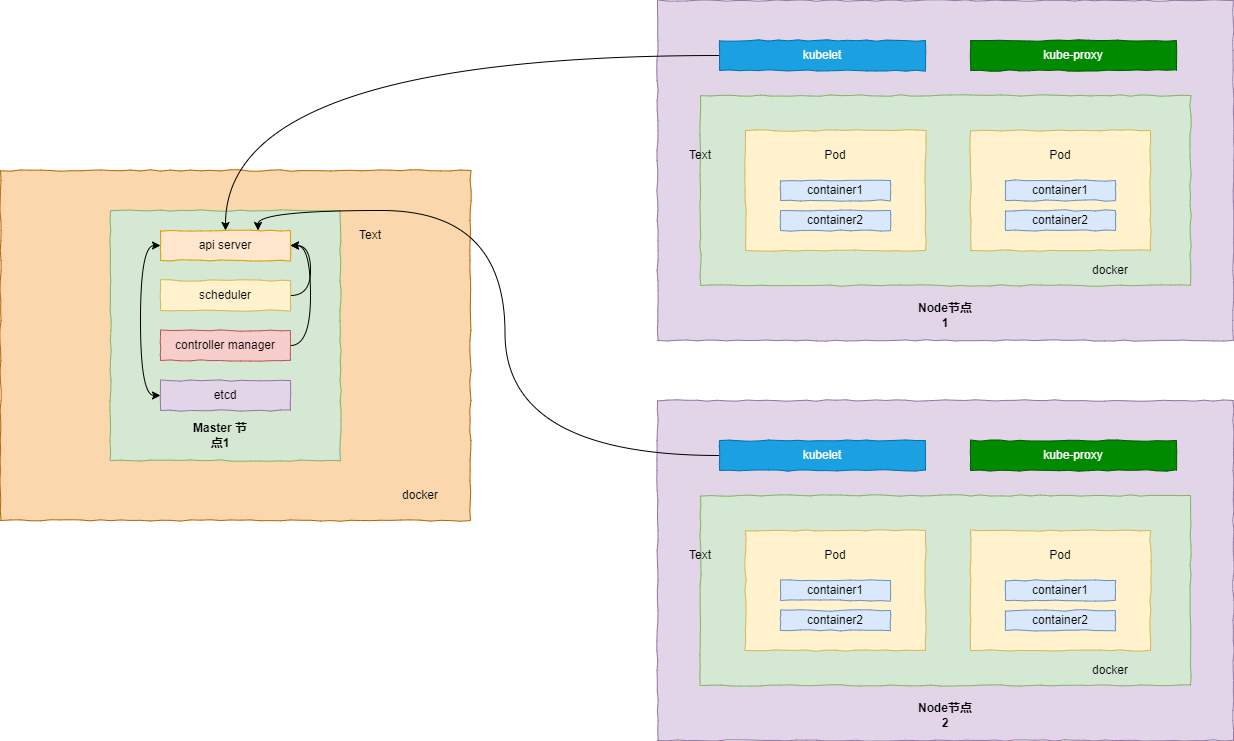

部署架构

初始化环境

关闭防火墙

##########第一台##########

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]#

##########第二台##########

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]#

##########第三台##########

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]#

关闭 NetworkManager

##########第一台##########

[root@localhost ~]# systemctl stop NetworkManager

[root@localhost ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@localhost ~]#

##########第二台##########

[root@localhost ~]# systemctl stop NetworkManager

[root@localhost ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@localhost ~]#

##########第三台##########

[root@localhost ~]# systemctl stop NetworkManager

[root@localhost ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@localhost ~]#

关闭 selinux

重启后重启

##########第一台##########

[root@localhost ~]# sed --in-place=.bak 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

[root@localhost ~]# reboot

Remote side unexpectedly closed network connection

##########第二台##########

[root@localhost ~]# sed --in-place=.bak 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

[root@localhost ~]# reboot

Remote side unexpectedly closed network connection

##########第三台##########

[root@localhost ~]# sed --in-place=.bak 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

[root@localhost ~]# reboot

Remote side unexpectedly closed network connection

设置 hosts

##########第一台##########

[root@localhost ~]# hostnamectl set-hostname k8s-01

[root@localhost ~]# bash

[root@k8s-01 ~]#

##########第二台##########

[root@localhost ~]# hostnamectl set-hostname k8s-02

[root@localhost ~]# bash

[root@k8s-02 ~]#

##########第三台##########

[root@localhost ~]# hostnamectl set-hostname k8s-03

[root@localhost ~]# bash

[root@k8s-03 ~]#

##########每台执行##########

[root@k8s-01 ~]# cat >> /etc/hosts <<EOF

> 192.168.204.53 k8s-01

> 192.168.204.54 k8s-02

> 192.168.204.55 k8s-03

> EOF

[root@k8s-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.204.53 k8s-01

192.168.204.54 k8s-02

192.168.204.55 k8s-03

[root@k8s-01 ~]#

检查地址唯一性

确保每个节点上 MAC 地址和 product_uuid 的唯一性

##########第一台##########

[root@k8s-01 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:b6:10:3f brd ff:ff:ff:ff:ff:ff

[root@k8s-01 ~]# cat /sys/class/dmi/id/product_uuid

D1624D56-D05A-EBAD-73BD-6C38BBB6103F

[root@k8s-01 ~]#

##########第二台##########

[root@k8s-02 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:31:80:05 brd ff:ff:ff:ff:ff:ff

[root@k8s-02 ~]# cat /sys/class/dmi/id/product_uuid

43524D56-9F3E-72BE-A236-660C7B11CEC3

[root@k8s-02 ~]#

##########第三台##########

[root@k8s-03 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:33:64:8d brd ff:ff:ff:ff:ff:ff

[root@k8s-03 ~]# cat /sys/class/dmi/id/product_uuid

33204D56-CCE2-E035-68B1-307A77903F01

[root@k8s-03 ~]#

关闭交换分区 swap

##########第一台##########

[root@k8s-01 ~]# sed --in-place=.bak 's/.*swap.*/#&/' /etc/fstab

[root@k8s-01 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Feb 7 10:51:23 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=2308d143-dd56-4183-b171-9d741257eeaf /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-01 ~]# swapoff -a

[root@k8s-01 ~]#

##########第二台##########

[root@k8s-02 ~]# sed --in-place=.bak 's/.*swap.*/#&/' /etc/fstab

[root@k8s-02 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Feb 7 10:51:23 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=2308d143-dd56-4183-b171-9d741257eeaf /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-02 ~]# swapoff -a

[root@k8s-02 ~]#

##########第三台##########

[root@k8s-03 ~]# sed --in-place=.bak 's/.*swap.*/#&/' /etc/fstab

[root@k8s-03 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Feb 7 10:51:23 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=2308d143-dd56-4183-b171-9d741257eeaf /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-03 ~]# swapoff -a

[root@k8s-03 ~]#

允许 iptables 检查桥接流量

遇到“sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: 没有那个文件或目录”,需要执行modprobe br_netfilter加载一下模块后生效

##########第一台##########

[root@k8s-01 ~]# echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

[root@k8s-01 ~]# echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

[root@k8s-01 ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

[root@k8s-01 ~]# sysctl -p

net.ipv4.ip_forward = 1

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: 没有那个文件或目录

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: 没有那个文件或目录

[root@k8s-01 ~]# modprobe br_netfilter

[root@k8s-01 ~]# sysctl -p

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@k8s-01 ~]#

##########第二台##########

[root@k8s-02 ~]# echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

[root@k8s-02 ~]# echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

[root@k8s-02 ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

[root@k8s-02 ~]# sysctl -p

net.ipv4.ip_forward = 1

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: 没有那个文件或目录

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: 没有那个文件或目录

[root@k8s-02 ~]# modprobe br_netfilter

[root@k8s-02 ~]# sysctl -p

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@k8s-02 ~]#

##########第三台##########

[root@k8s-03 ~]# echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

[root@k8s-03 ~]# echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

[root@k8s-03 ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

[root@k8s-03 ~]# sysctl -p

net.ipv4.ip_forward = 1

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: 没有那个文件或目录

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: 没有那个文件或目录

[root@k8s-03 ~]# modprobe br_netfilter

[root@k8s-03 ~]# sysctl -p

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@k8s-03 ~]#

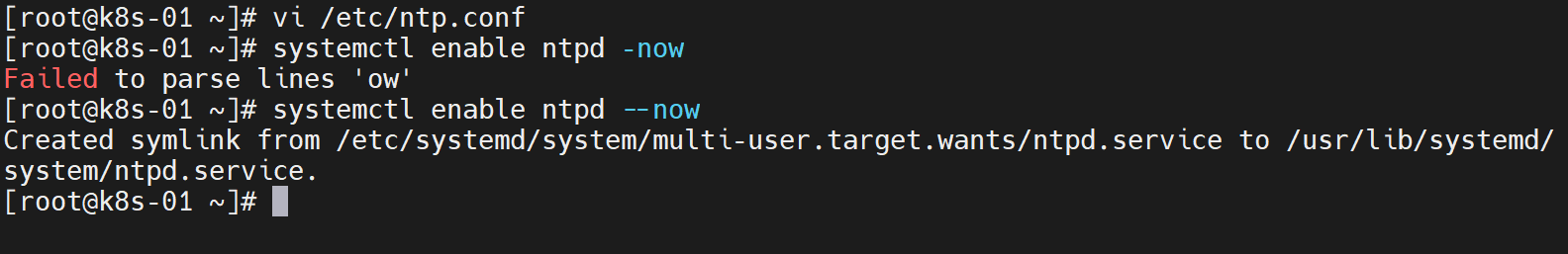

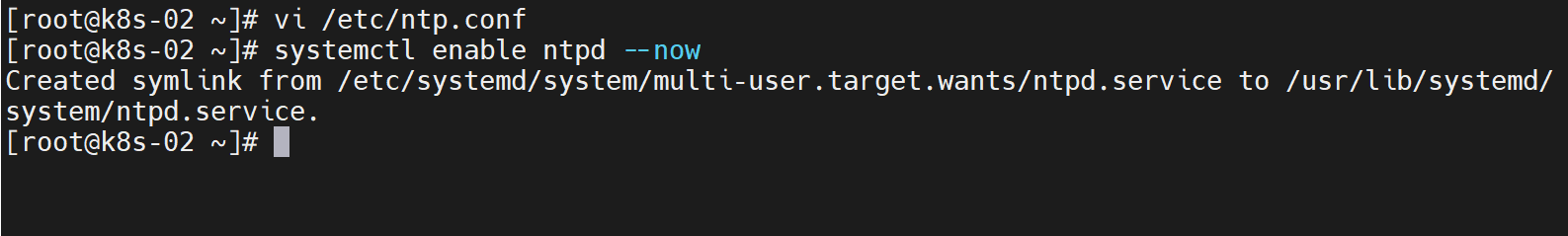

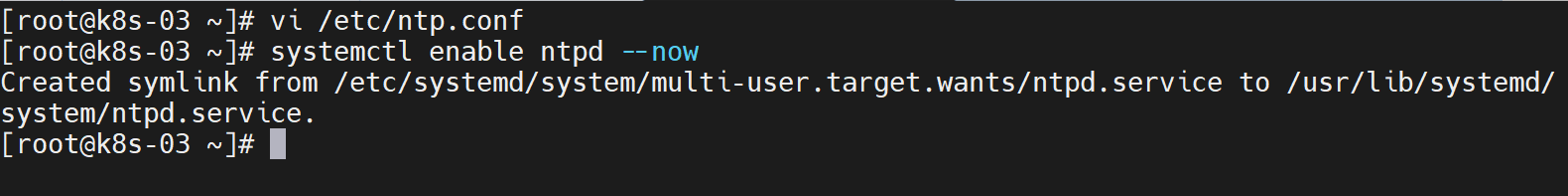

时间同步配置

参考:https://developer.aliyun.com/mirror/NTP?spm=a2c6h.13651102.0.0.39661b11cnexxJ

##########三台执行##########

yum install -y ntp

##########三台/etc/ntp.conf##########

driftfile /var/lib/ntp/drift

pidfile /var/run/ntpd.pid

logfile /var/log/ntp.log

restrict default kod nomodify notrap nopeer noquery

restrict -6 default kod nomodify notrap nopeer noquery

restrict 127.0.0.1

server 127.127.1.0

fudge 127.127.1.0 stratum 10

server ntp.aliyun.com iburst minpoll 4 maxpoll 10

restrict ntp.aliyun.com nomodify notrap nopeer noquery

##########启动ntp服务##########

systemctl enable ntpd --now

安装 docker 环境

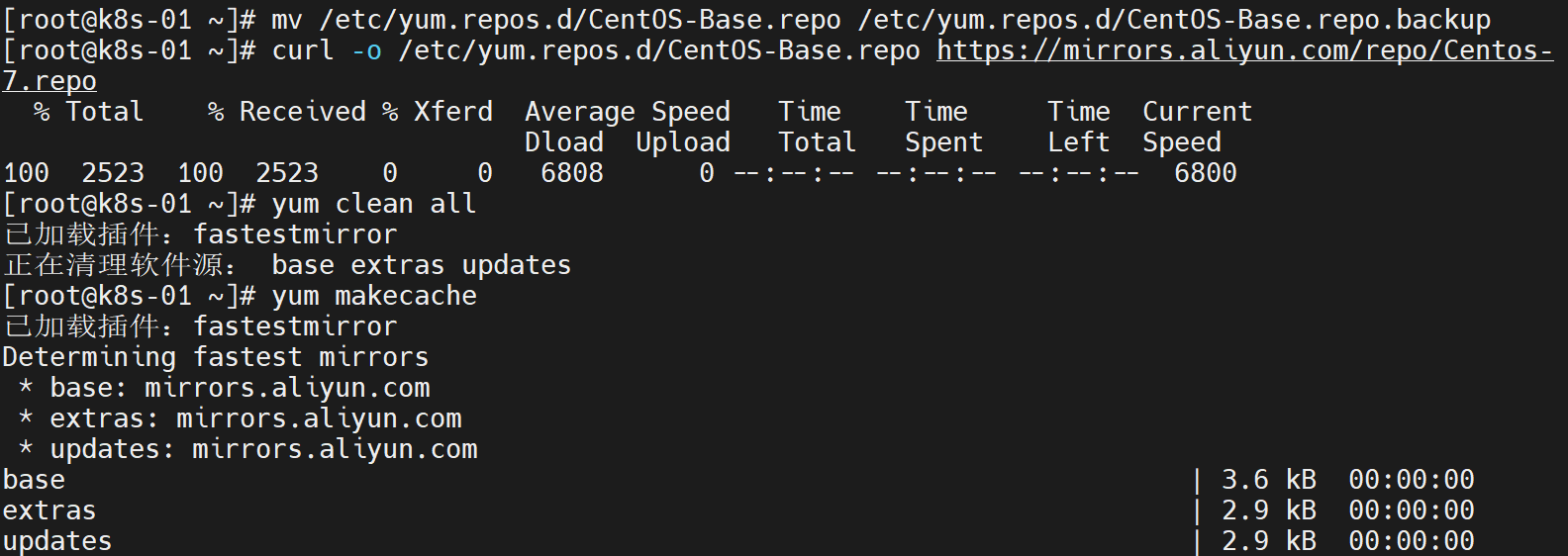

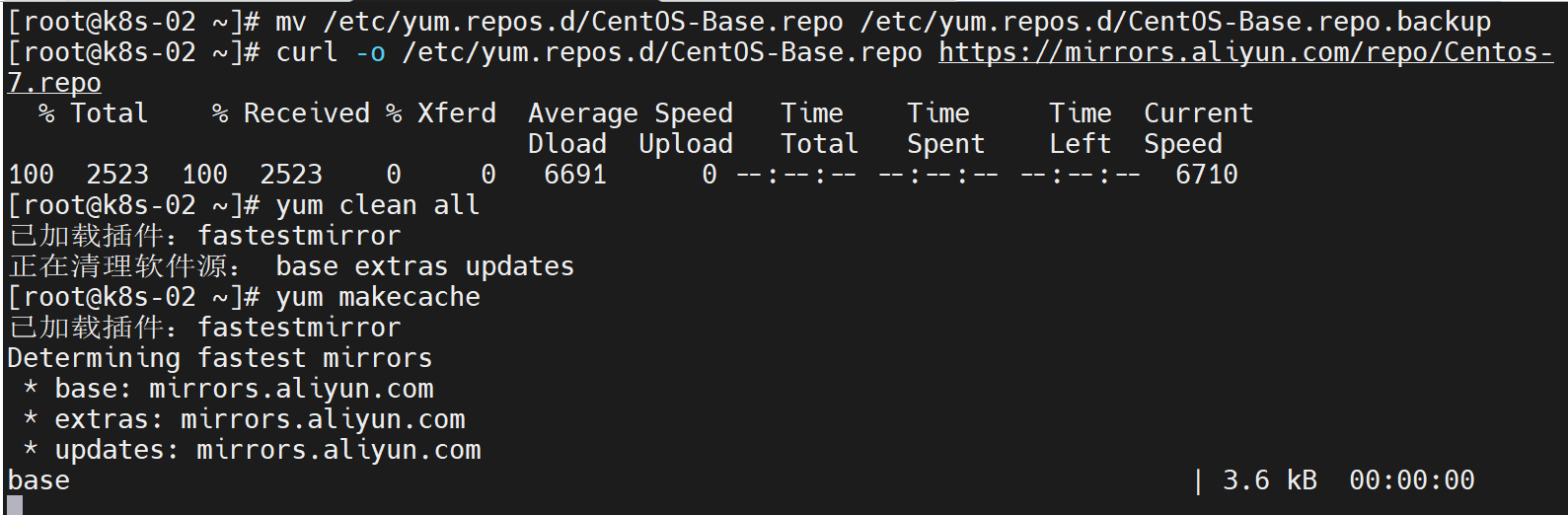

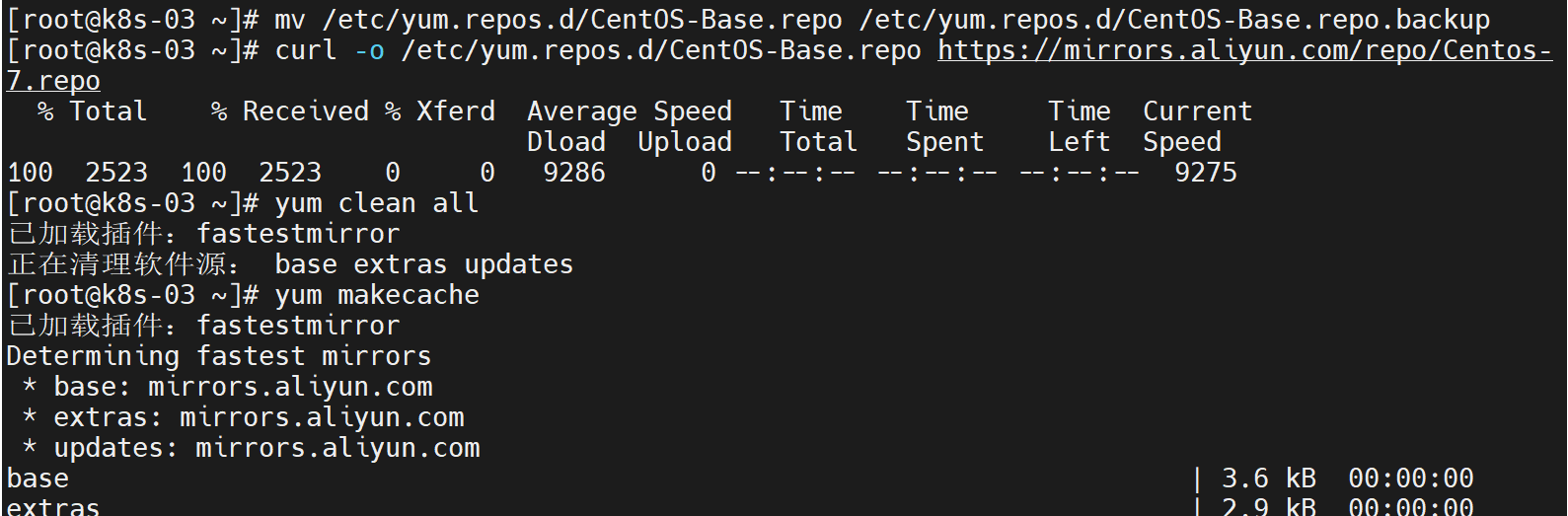

更换国内 yum 源

阿里云的 yum 源有很多连接用不了,不晓得为啥,

##########三台执行##########

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum clean all

yum makecache

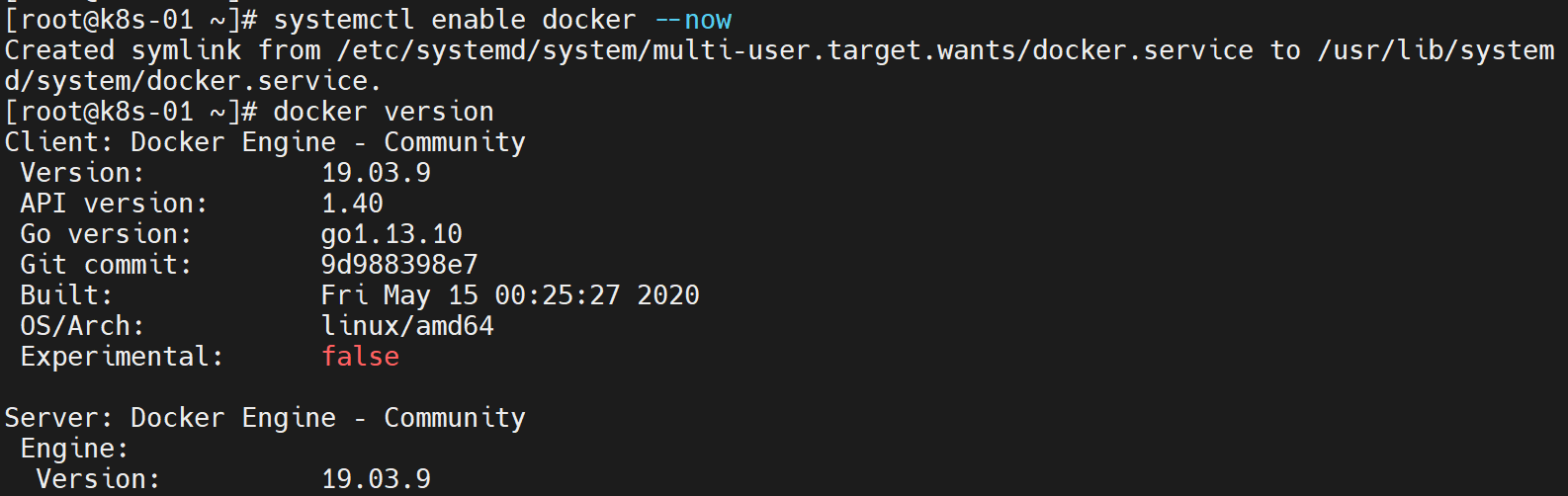

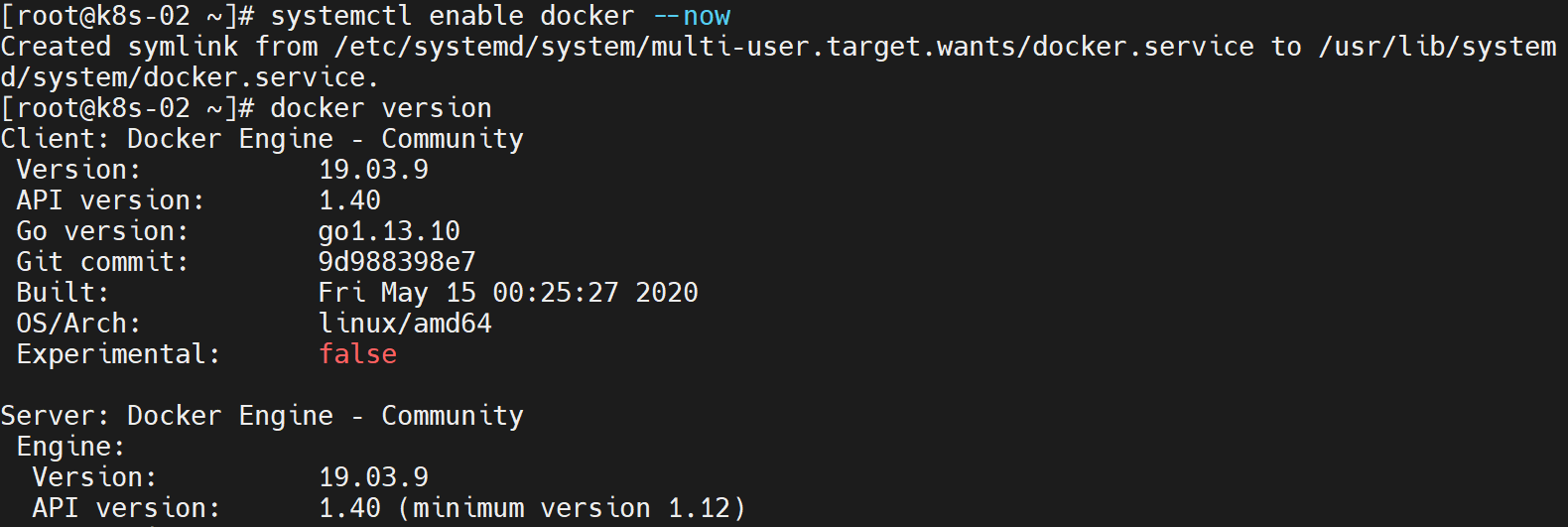

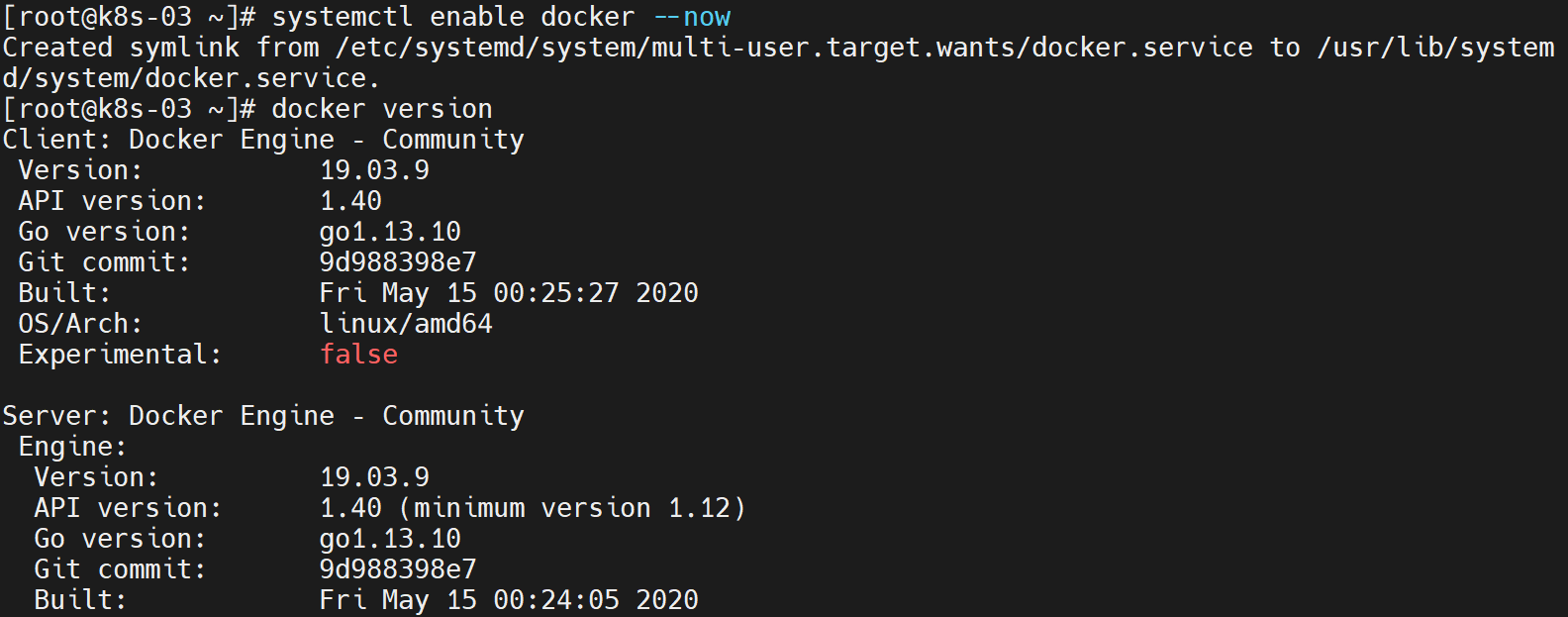

安装 docker

安装版本:19.03.9,后面再试试安装最新版本的

阿里云的源感觉不行了呢,下载好慢

##########三台执行##########

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

yum install -y docker-ce-3:19.03.9-3.el7.x86_64 docker-ce-cli-3:19.03.9-3.el7.x86_64 containerd.io

##启动和配置docker

systemctl enable docker --now

##验证版本

docker version

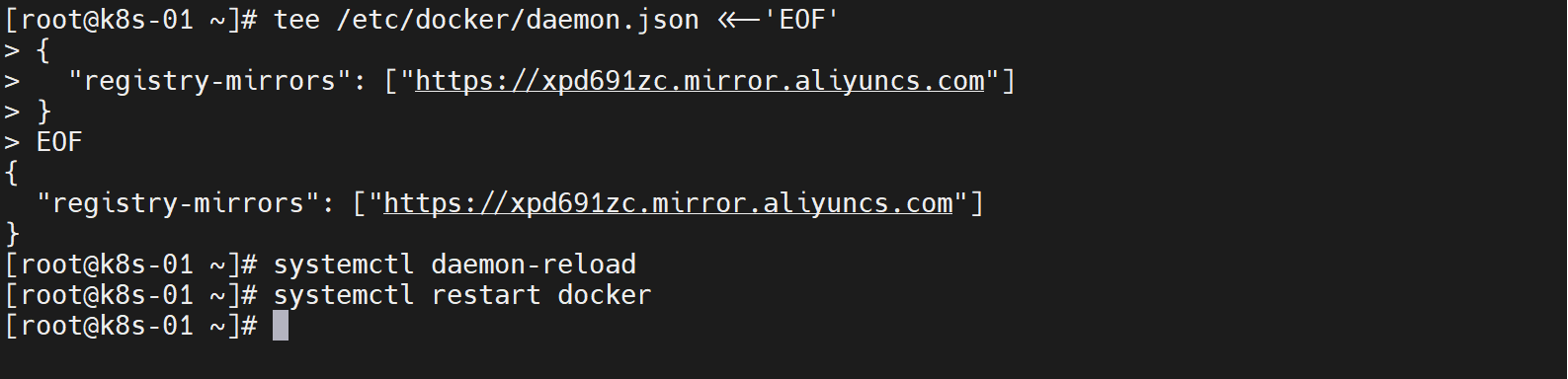

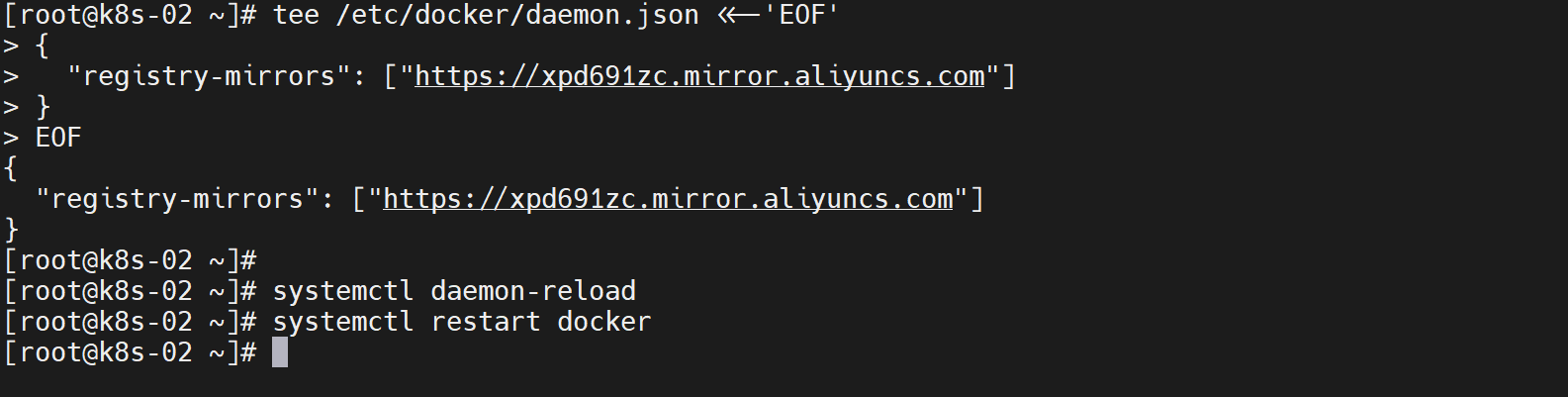

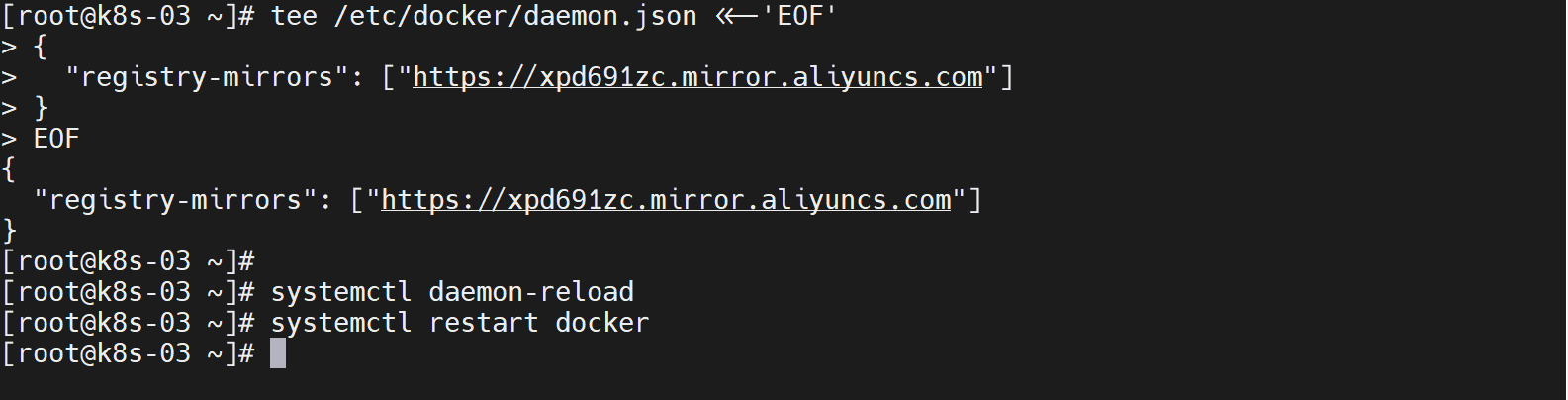

配置镜像加速

##########三台执行##########

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xpd691zc.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

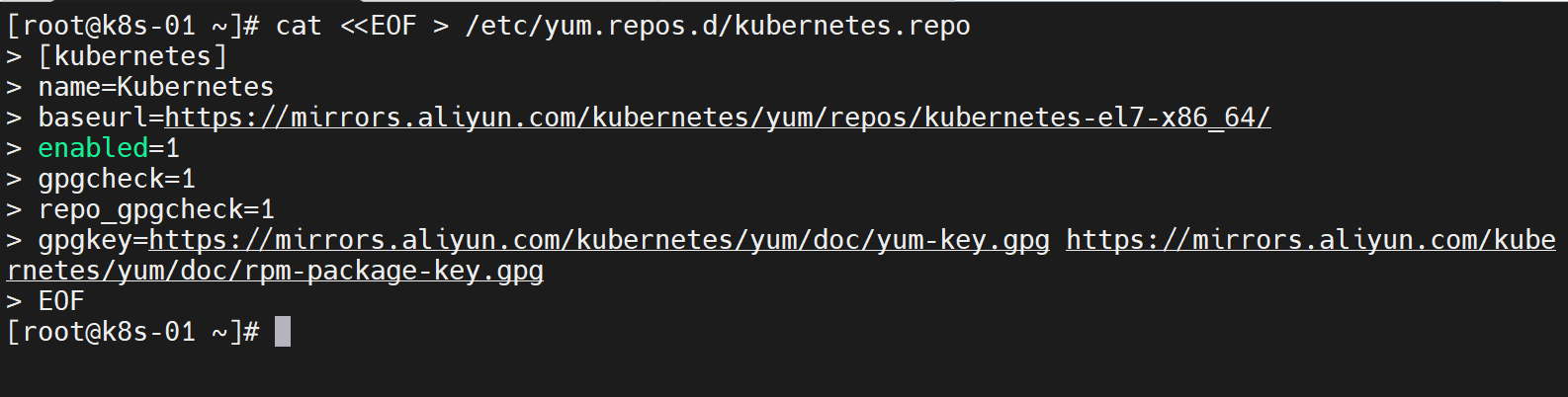

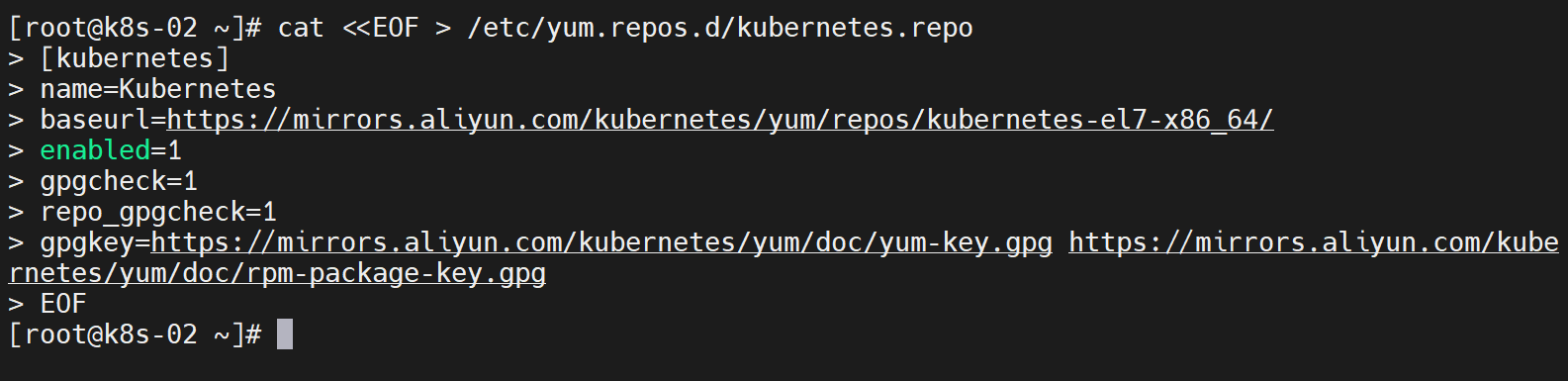

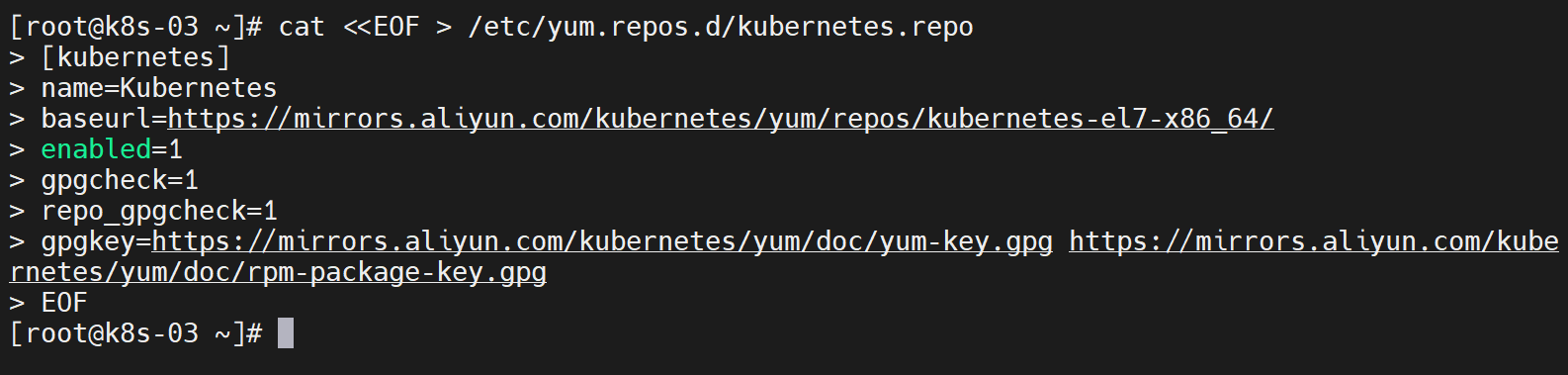

安装 k8s 核心组件

配置 k8s yum 源

阿里云链接地址:https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.6ad71b11m60Bji

##########三台执行##########

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装核心组件

这个阿里云源咋这么慢

##########三台执行##########

yum install -y --nogpgcheck kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0

systemctl enable kubelet --now

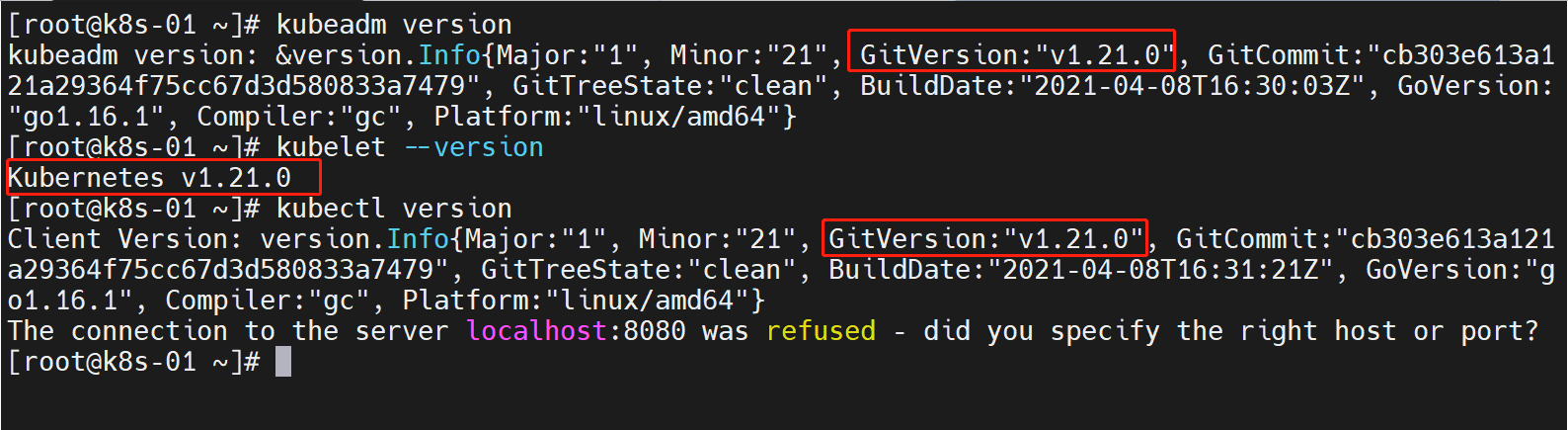

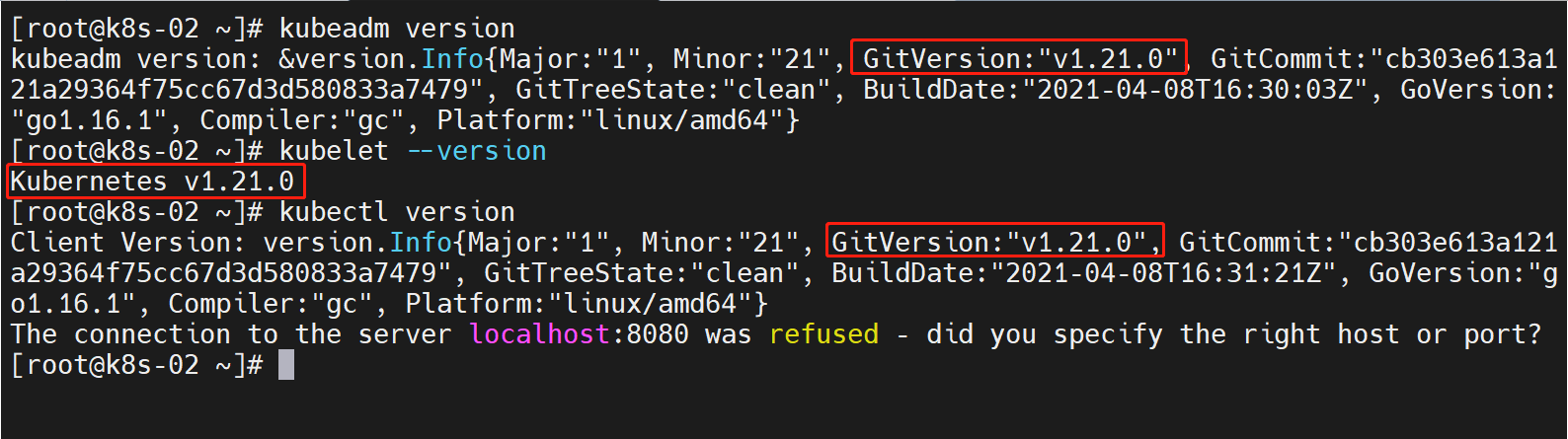

##检查版本

kubeadm version

kubelet --version

kubectl version

初始化 Master

下载核心镜像

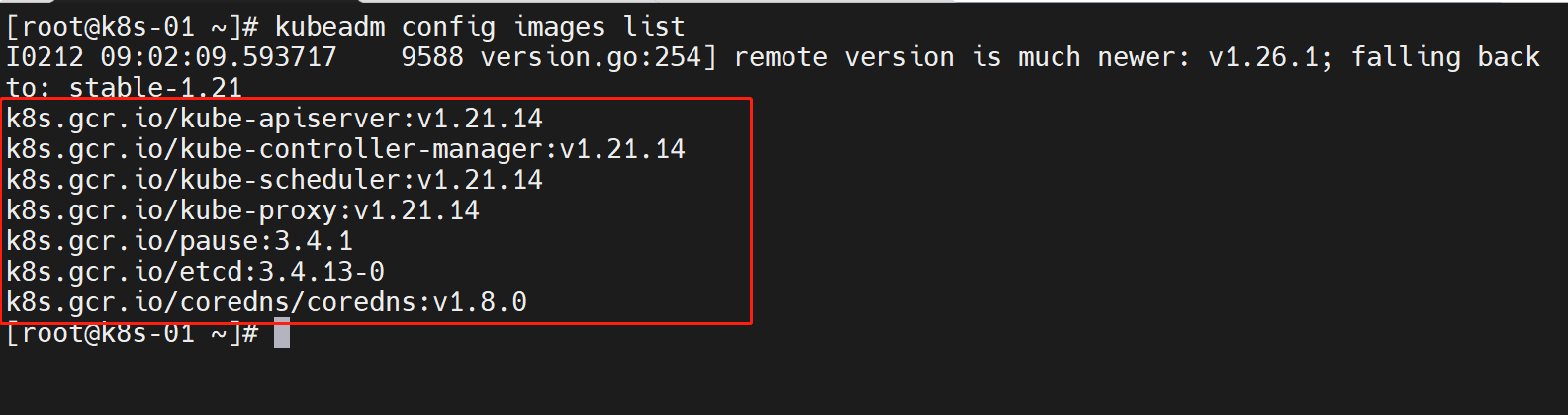

查看需要下载镜像列表

##########第一台执行##########

kubeadm config images list

##########得到的结果##########

k8s.gcr.io/kube-apiserver:v1.21.14

k8s.gcr.io/kube-controller-manager:v1.21.14

k8s.gcr.io/kube-scheduler:v1.21.14

k8s.gcr.io/kube-proxy:v1.21.14

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

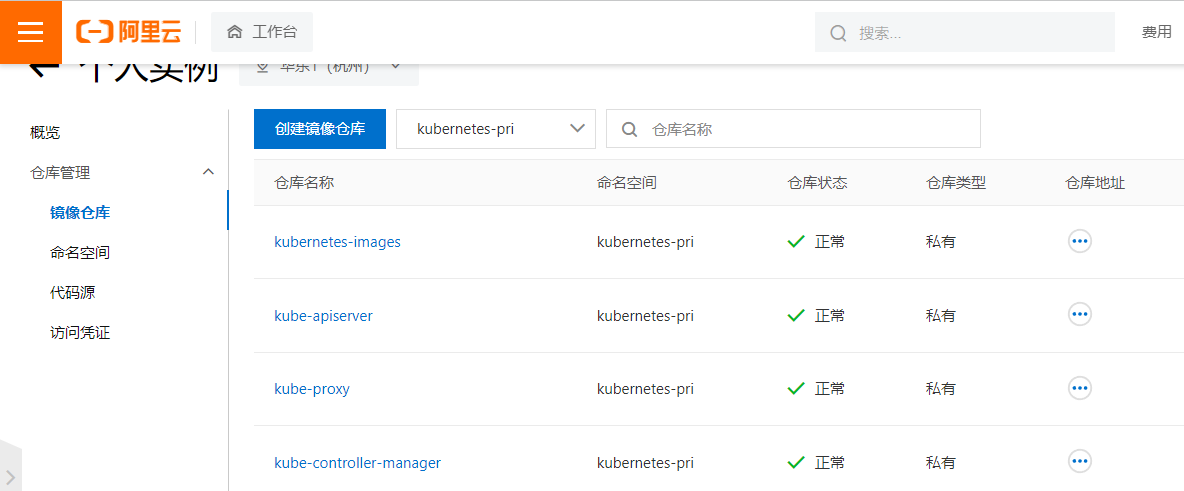

准备这些镜像

- 从网上找到这些镜像下载下来

- 然后我放在了阿里云的个人镜像仓库(免费配置一下就行)

- 我们将这些镜像导入所有的节点(不是非要导所有节点)

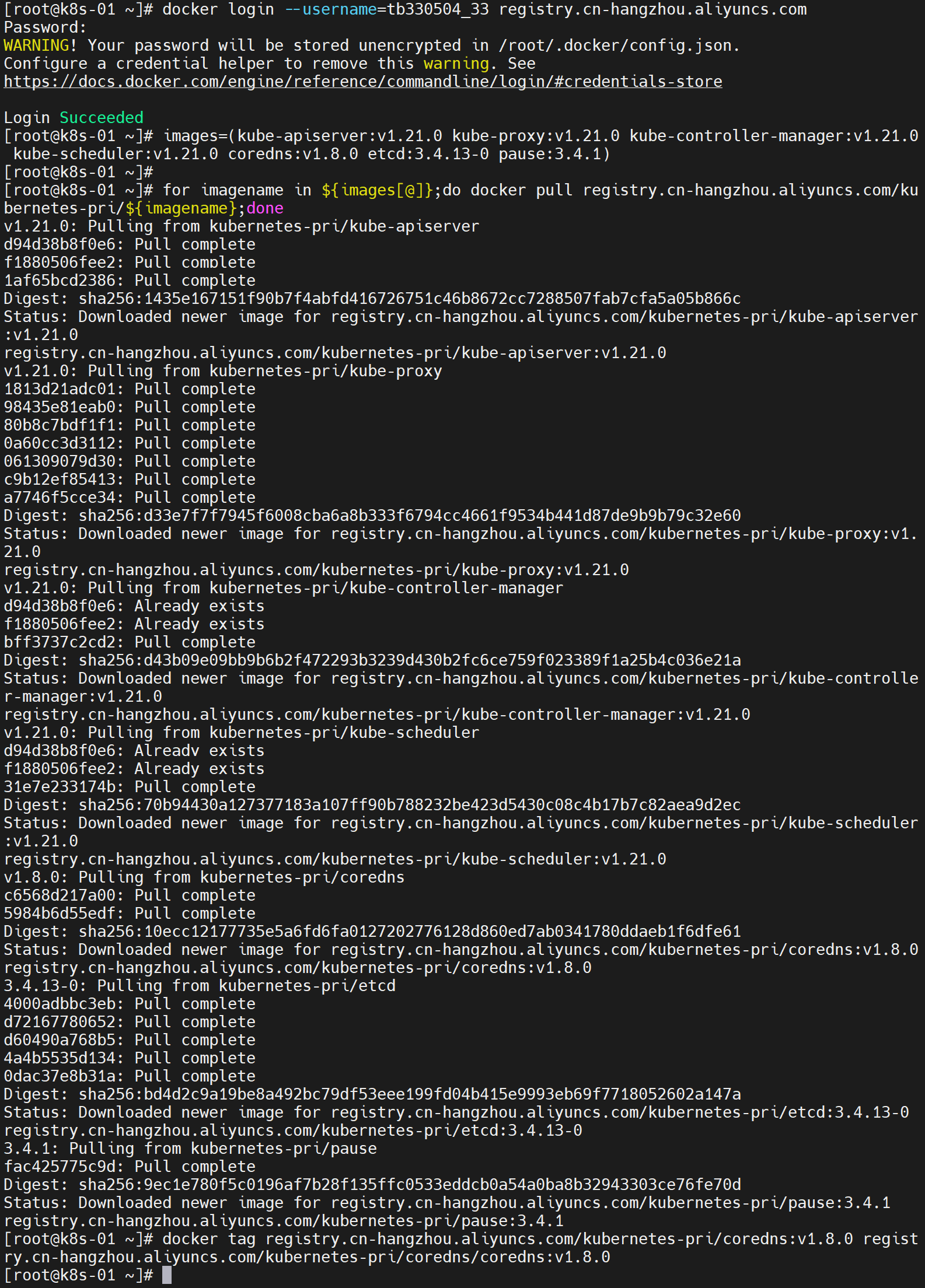

拉取这些镜像到本地

我的阿里云私有镜像公网地址是:registry.cn-hangzhou.aliyuncs.com/kubernetes-pri/

##########每台执行##########

docker login --username=tb330504_33 registry.cn-hangzhou.aliyuncs.com

##########每台执行##########

images=(kube-apiserver:v1.21.0 kube-proxy:v1.21.0 kube-controller-manager:v1.21.0 kube-scheduler:v1.21.0 coredns:v1.8.0 etcd:3.4.13-0 pause:3.4.1)

for imagename in ${images[@]};do docker pull registry.cn-hangzhou.aliyuncs.com/kubernetes-pri/${imagename};done

##########每台执行##########

docker tag registry.cn-hangzhou.aliyuncs.com/kubernetes-pri/coredns:v1.8.0 registry.cn-hangzhou.aliyuncs.com/kubernetes-pri/coredns/coredns:v1.8.0

第一台截图参考

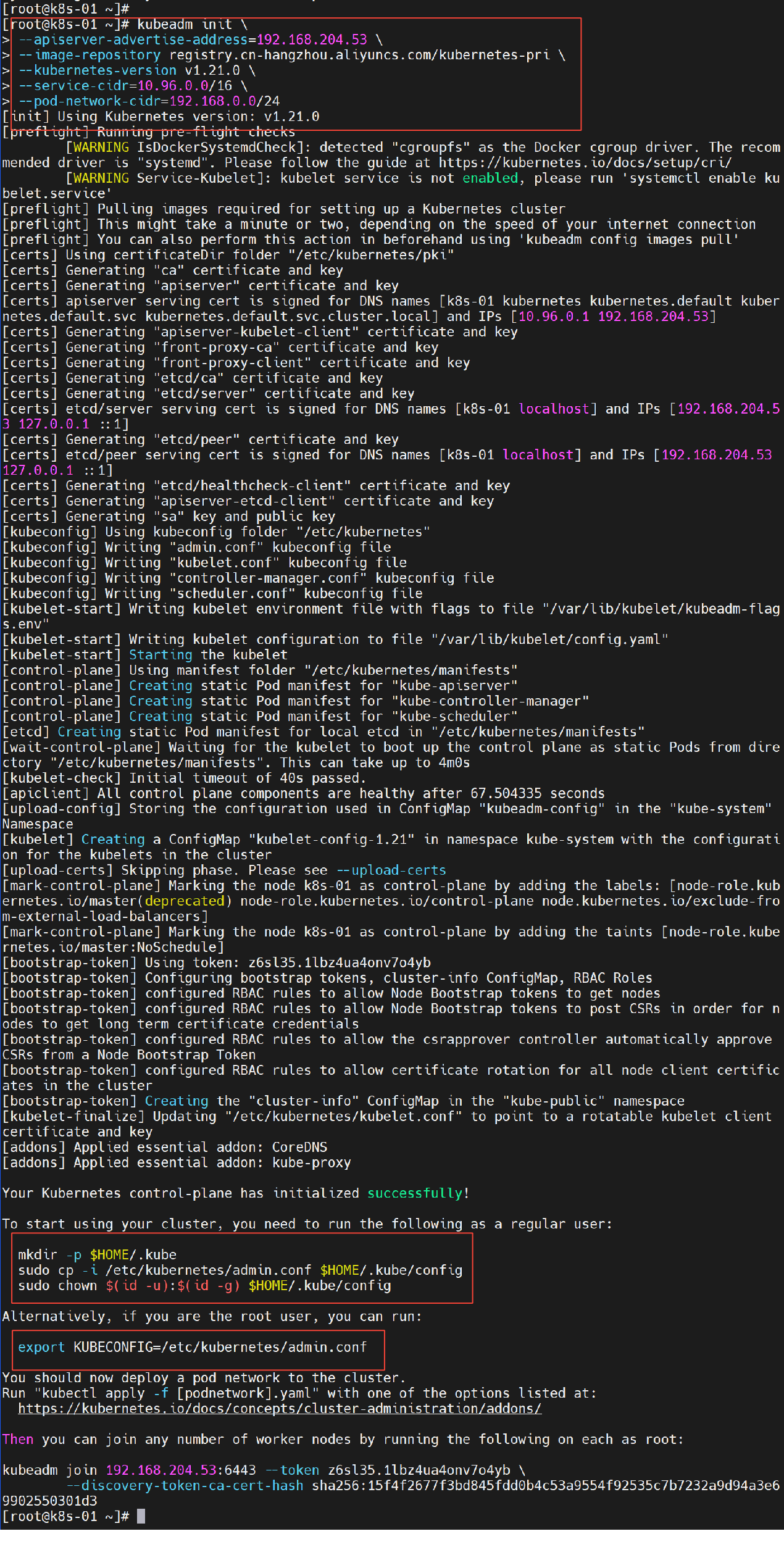

初始化一个 Master

##########第一台执行##########

kubeadm init \

--apiserver-advertise-address=192.168.204.53 \

--image-repository registry.cn-hangzhou.aliyuncs.com/kubernetes-pri \

--kubernetes-version v1.21.0 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/24

##########选项解释##########

##########pod的子网范围+service负载均衡网络的子网范围+本机ip的子网范围不能有重复

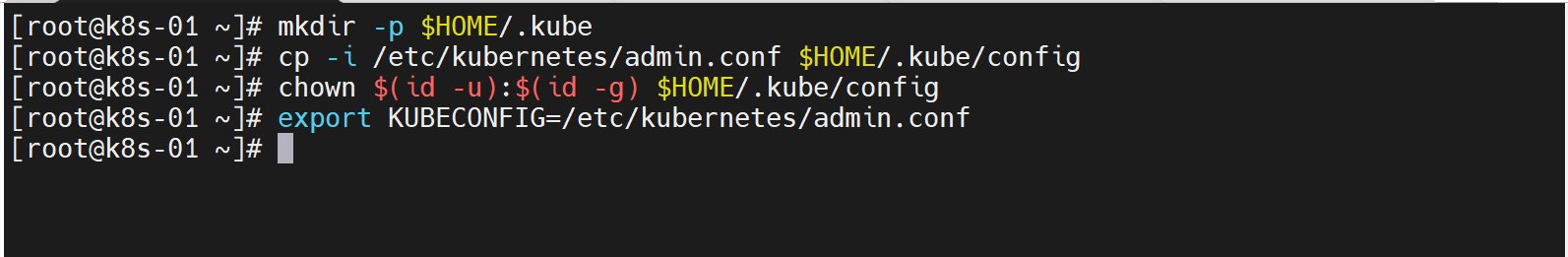

复制相关配置

复制相关配置

##########第一台执行##########

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

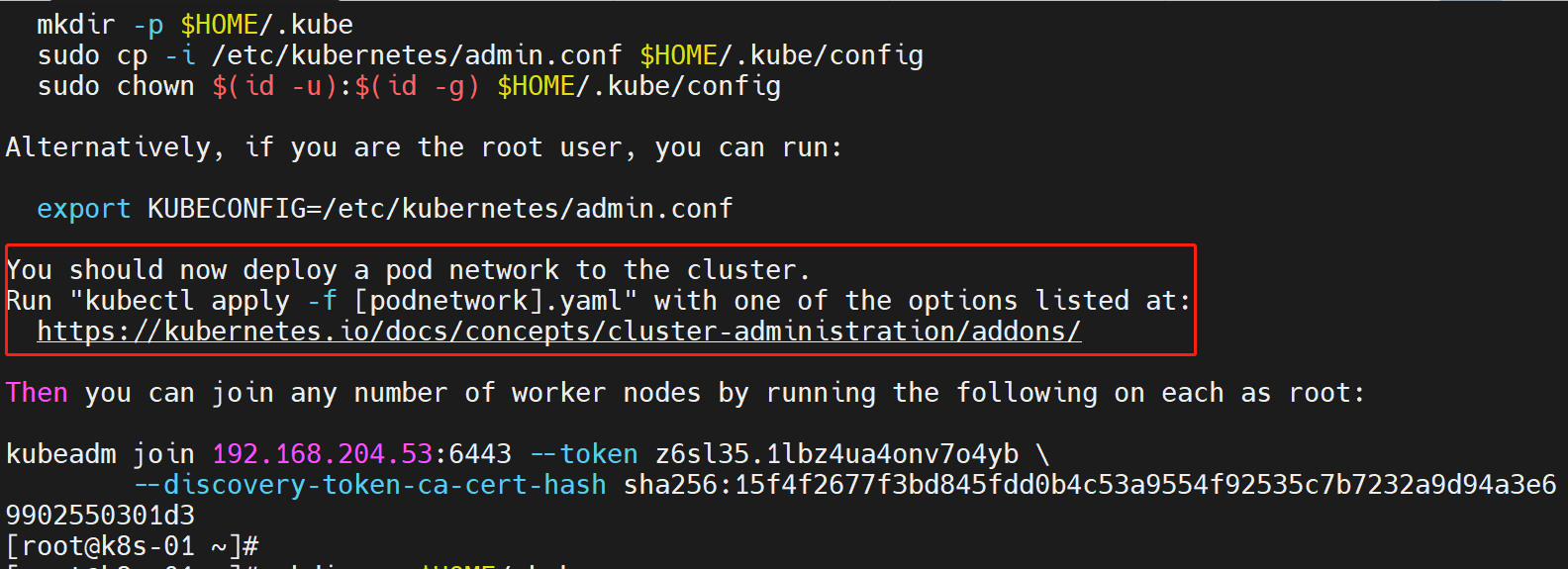

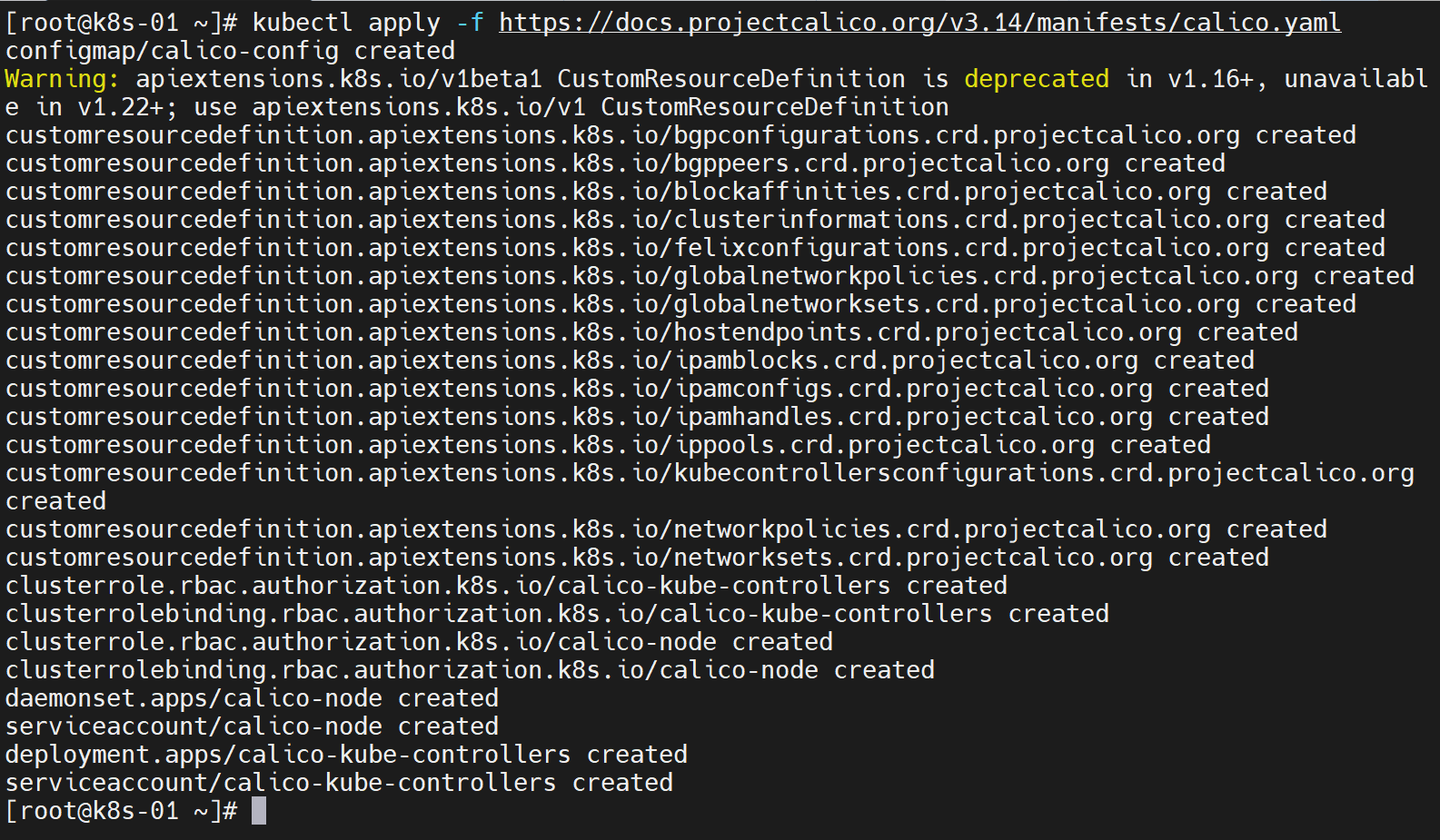

部署 Pod 网络

init 后有提示需要部署这个

##########第一台执行##########

kubectl apply -f https://docs.projectcalico.org/v3.14/manifests/calico.yaml

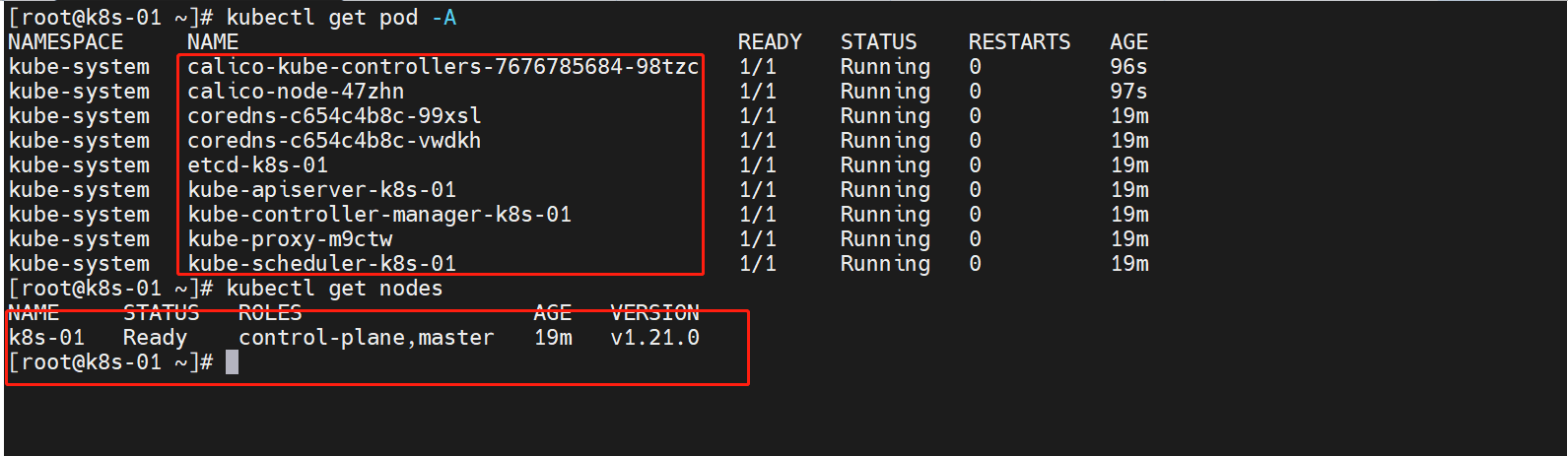

检查状态

##########第一台执行##########

kubectl get pod -A

kubectl get nodes

可以看到我们集群的组件的 Pod 都启动好了

- etcd

- kube-apiserver

- kube-controller-manager

- kube-scheduler

用于 Pod 之间网络的插件calico也正常运行

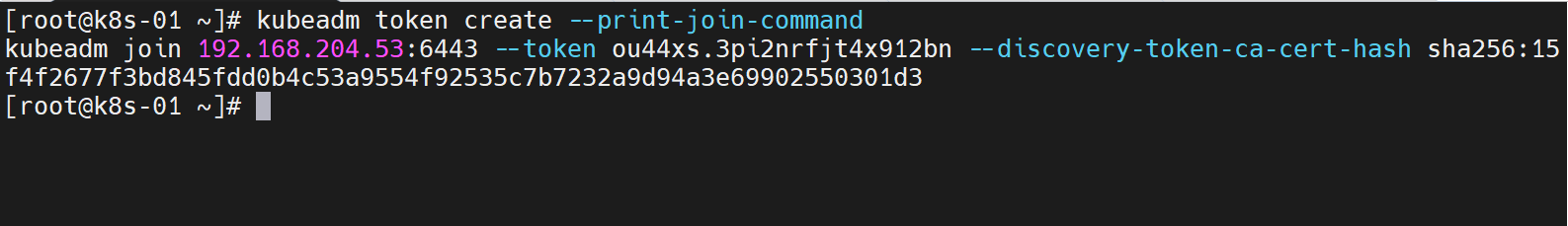

初始化 Worker

查找 token

我们从kubeadm init后会生成一个 token,这个就是加入集群的方式,也可以手动查找

##########第一台执行##########

kubeadm token create --print-join-command

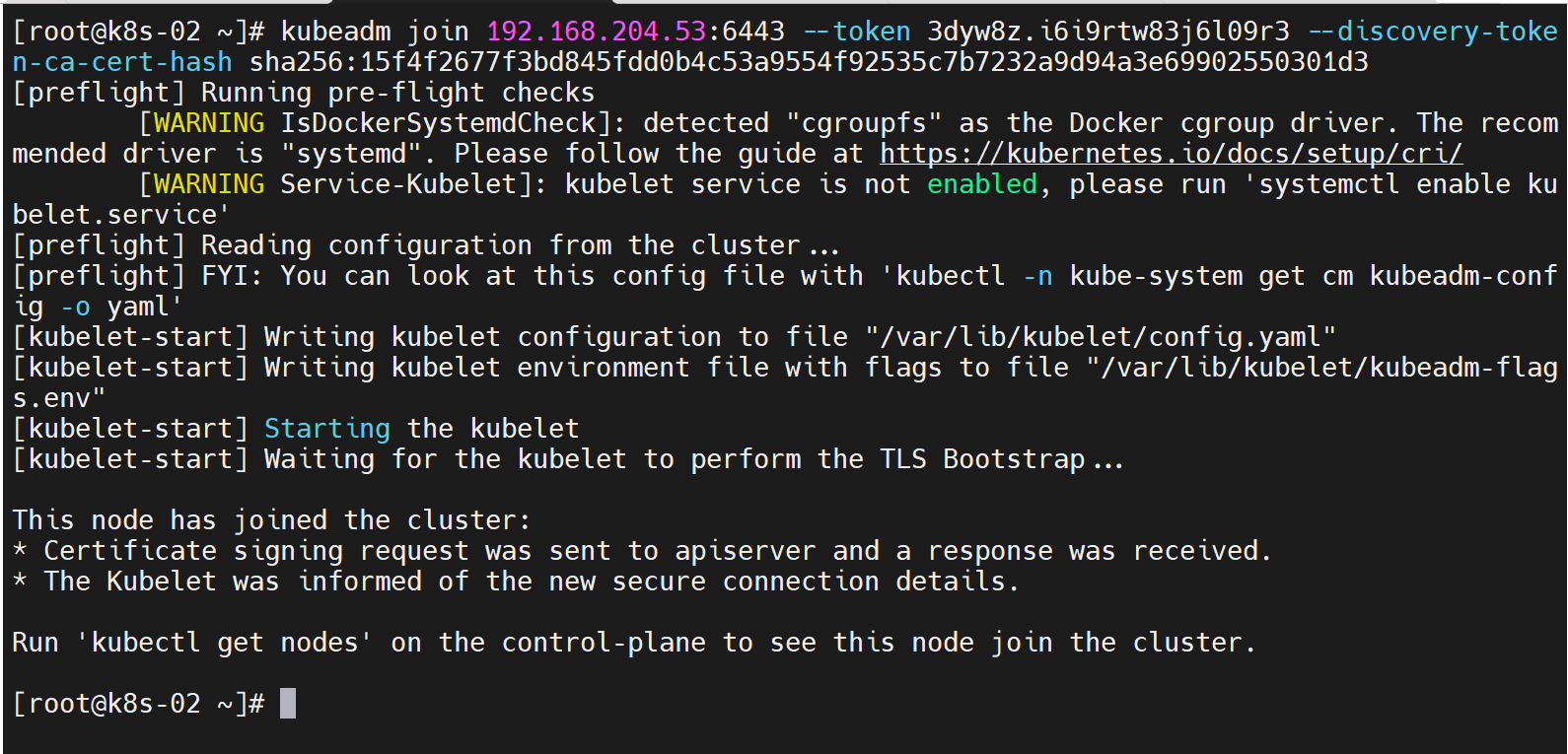

加入 worker 节点

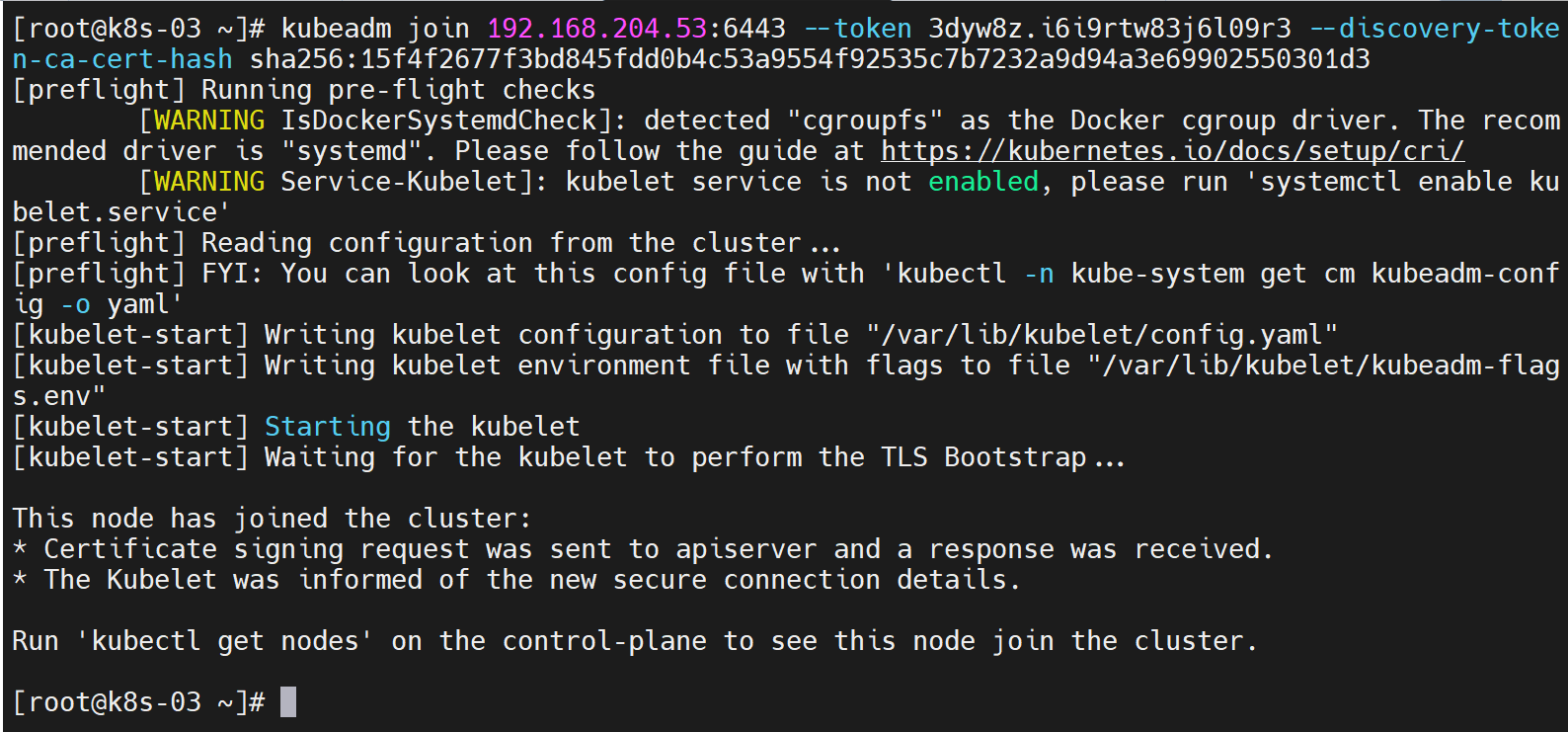

##########第二台、第三台执行##########

kubeadm join 192.168.204.53:6443 --token 3dyw8z.i6i9rtw83j6l09r3 --discovery-token-ca-cert-hash sha256:15f4f2677f3bd845fdd0b4c53a9554f92535c7b7232a9d94a3e69902550301d3

集群验证和配置

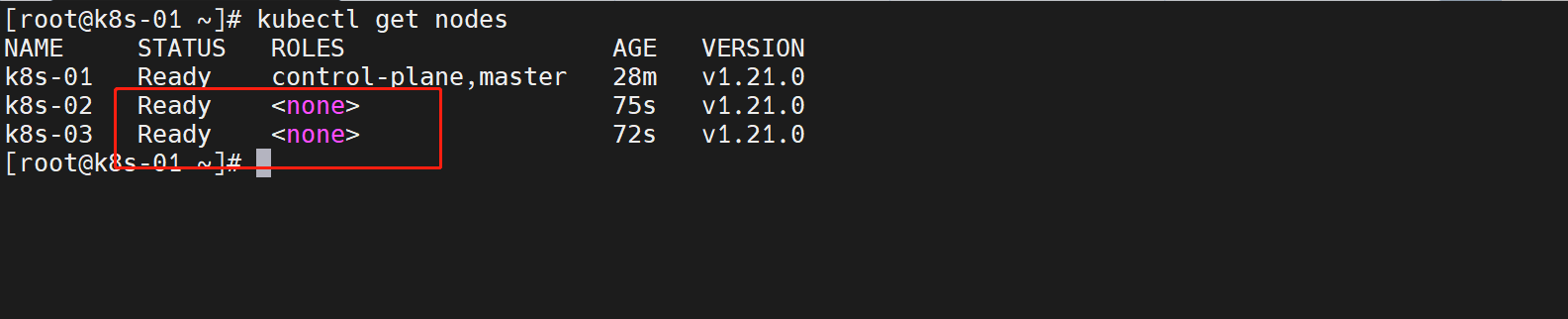

节点验证

##########第一台执行##########

kubectl get nodes

可以看到其他2个工作节点没有角色说明,我们可以设置一下

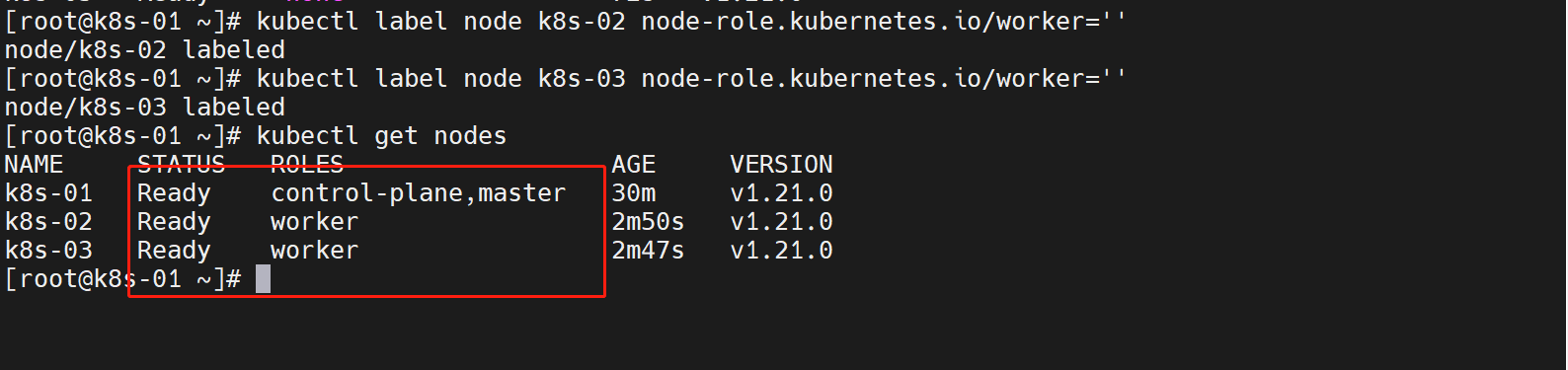

设置节点角色

##########第一台执行##########

kubectl label node k8s-02 node-role.kubernetes.io/worker=''

kubectl label node k8s-03 node-role.kubernetes.io/worker=''

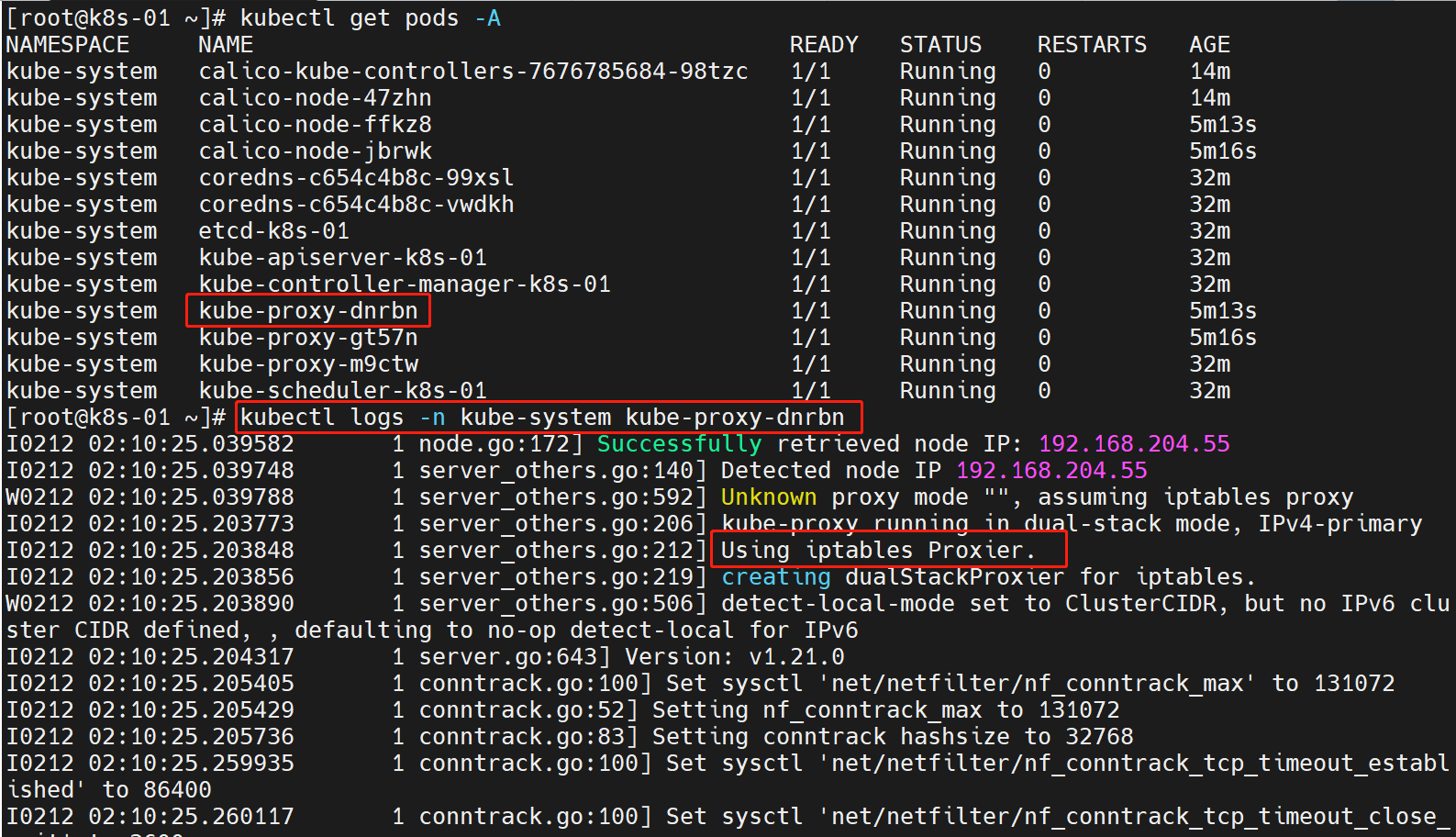

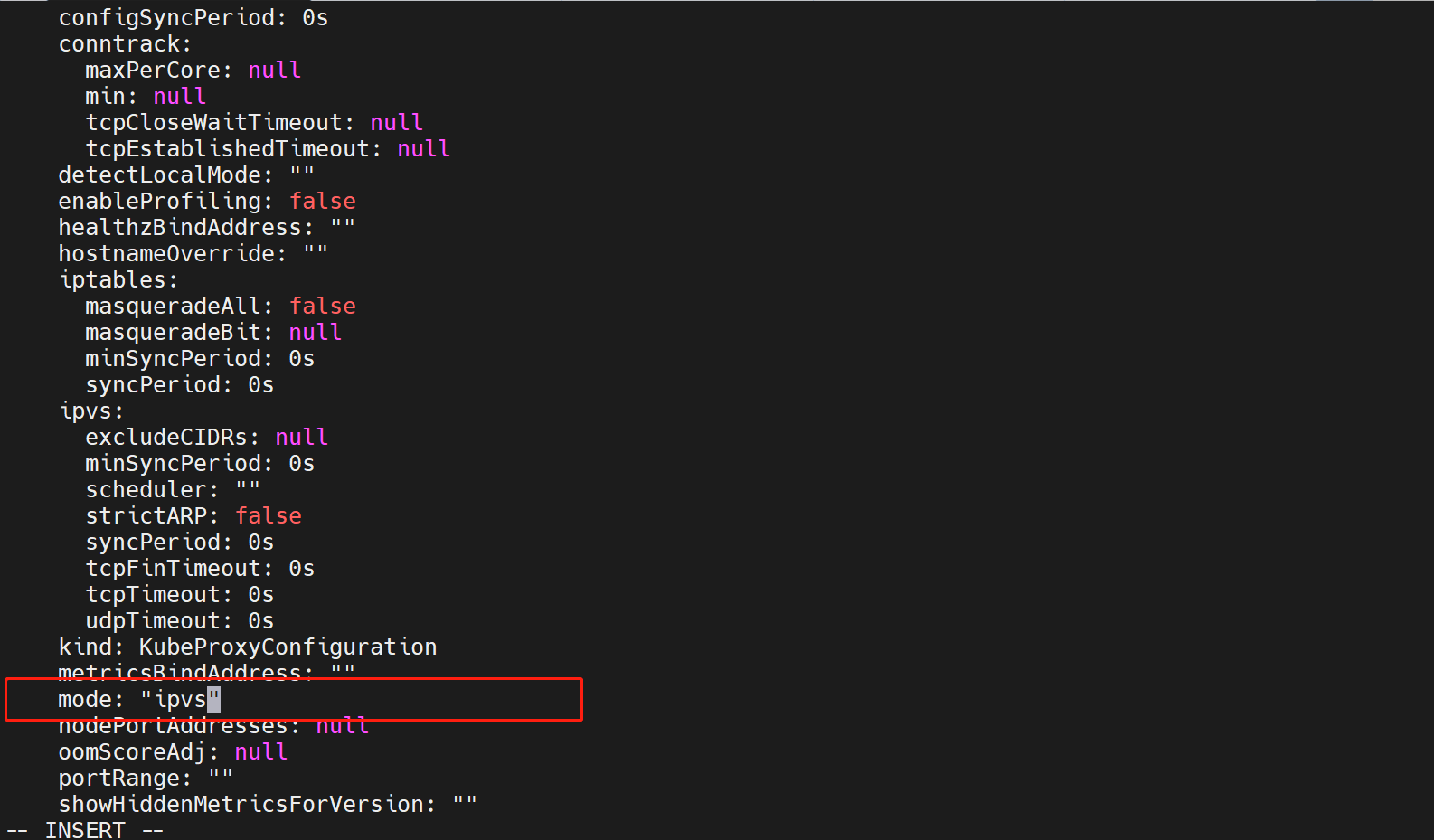

集群配置 ipvs 模式

k8s 整个集群默认是用 iptables 规则进行集群内部通信,性能较低(kube-proxy在集群之间同步iptables的内容)

##########第一台执行##########

#查看默认 kube-proxy 使用的模式

kubectl get pods -A

kubectl logs -n kube-system kube-proxy-dnrbn

#修改kube-proxy的配置文件,修改mode为ipvs。默认iptables,集群大了以后就很慢

##########第一台执行##########

kubectl edit cm kube-proxy -n kube-system

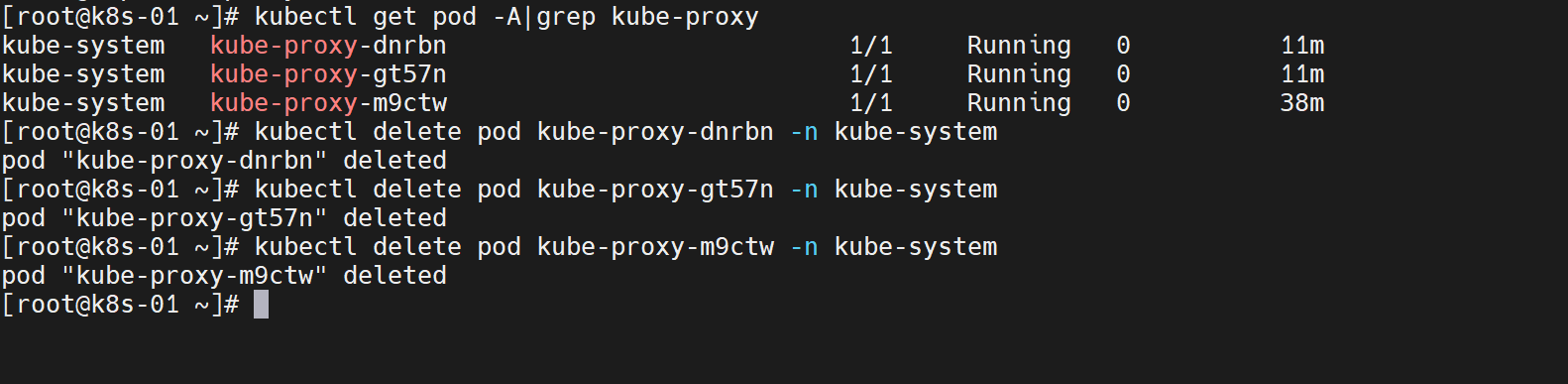

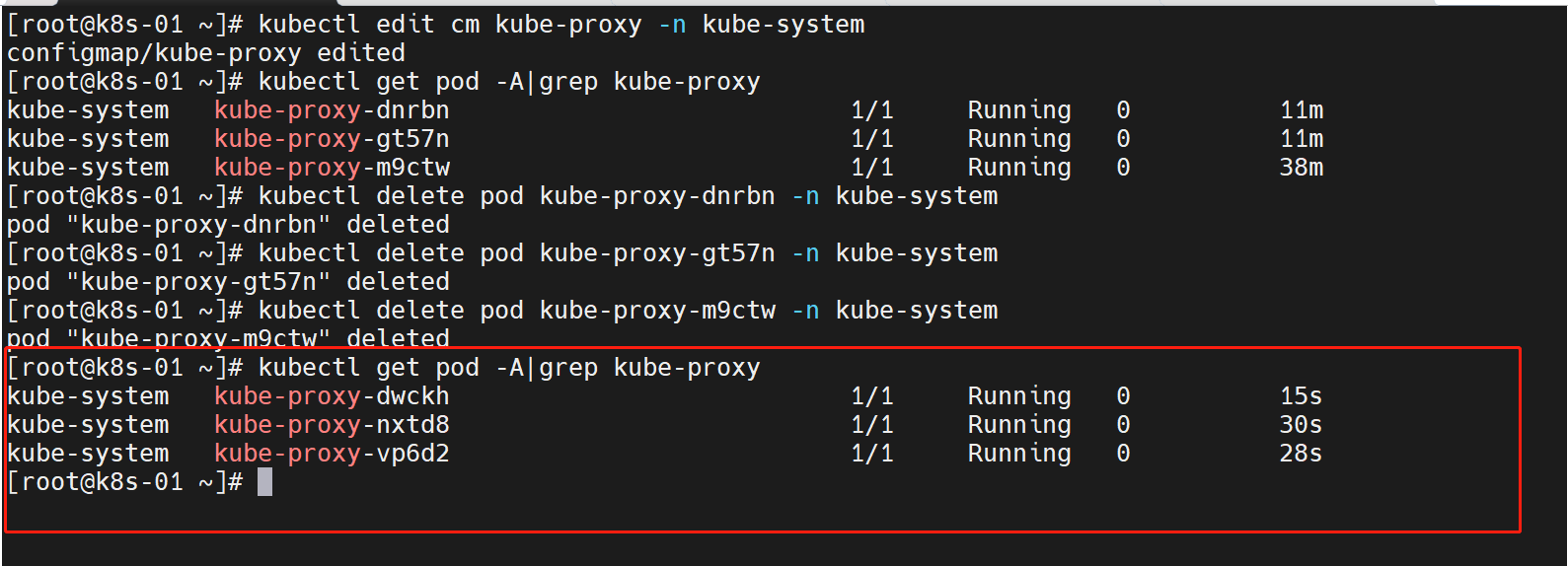

#需要重启下所有的kube-proxy

##########第一台执行##########

kubectl get pod -A|grep kube-proxy

kubectl delete pod kube-proxy-dnrbn -n kube-system

kubectl delete pod kube-proxy-gt57n -n kube-system

kubectl delete pod kube-proxy-m9ctw -n kube-system

#删除以后好像是会自动重启

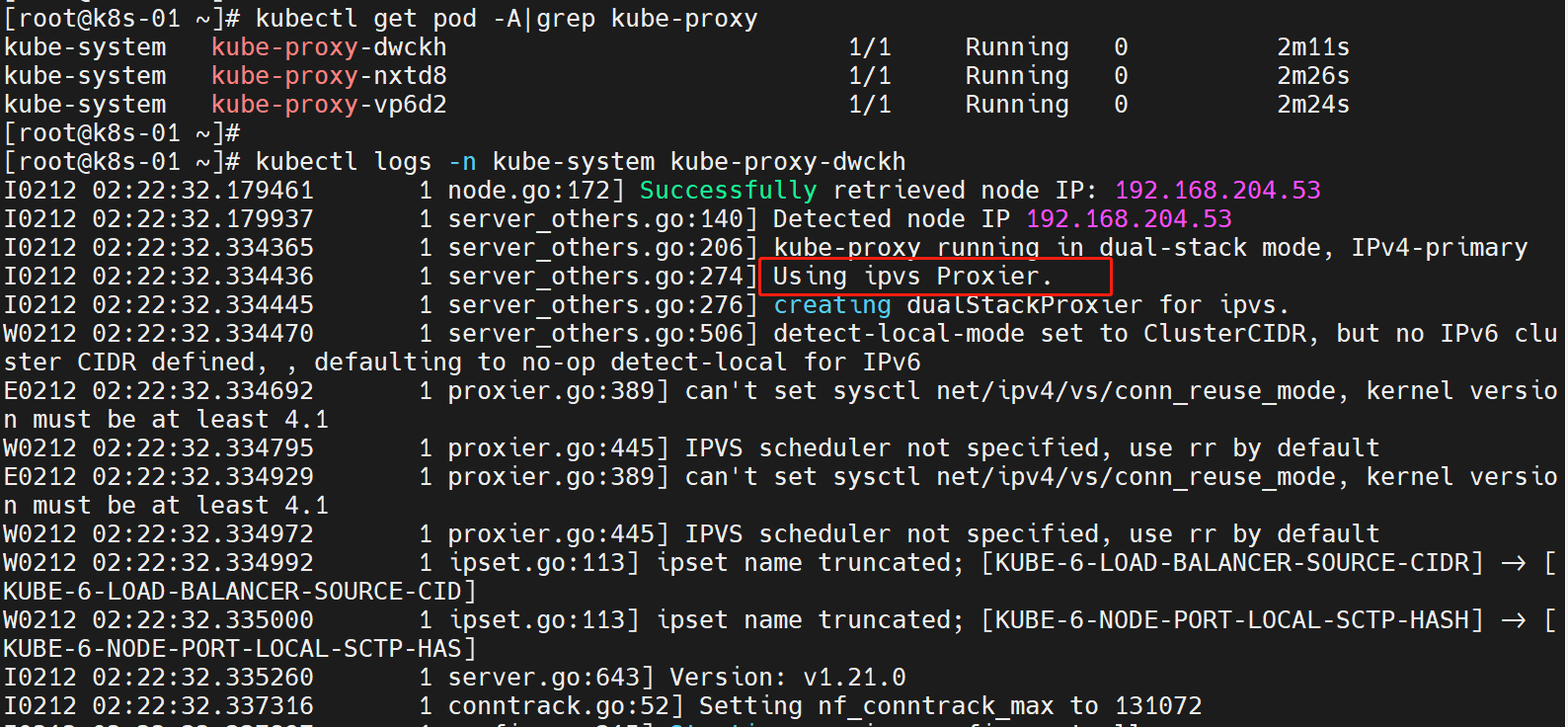

#验证一下

kubectl logs -n kube-system kube-proxy-dwckh

安装总结

引导流程

使用kubeadm去引导一个集群还是很方便,省去了很多证书环节,大致流程一般是:

- 准备好服务器,让他们网络互通

- 每台服务器初始化环境

- 安装好 docker 环境,不过后面版本好像已经不能用 docker 环境,这个明天我在详细学习一下

- 每台服务器装好必备的组件(kubeadm、kubelet、kubectl)

- 准备好对应版本的控制面板组件的镜像(这个可能因为网络原因,有时候很难下载下来)

- 直接使用

kubeadm inti和kubeadm join创建集群

命令总结

##检查版本号

kubeadm version

kubelet --version

kubectl version

##初始化

kubeadm init \

--apiserver-advertise-address=192.168.204.53 \

--image-repository registry.cn-hangzhou.aliyuncs.com/kubernetes-pri \

--kubernetes-version v1.21.0 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/24

##查看所有的pod

kubectl get pod -A

##查看集群所有节点

kubectl get nodes

##查看kubeadmin 加入工作节点的token

kubeadm token create --print-join-command

##给节点设置标签角色

kubectl label node k8s-02 node-role.kubernetes.io/worker=''

##查看一个pod的日志

kubectl logs -n kube-system kube-proxy-dnrbn

##删除一个pod

kubectl delete pod kube-proxy-dnrbn -n kube-system

一点技巧

-

一个基础的 k8s 集群安装好了,我们可以对三台虚拟机进行一个快照备份,以后学习的时候直接恢复使用就行,后续环境还要进行一个二进制安装的学习过程,【定时快照备份】是一个很好的习惯。

-

pod 子网范围、server 子网范围、主机子网范围一定不能冲突

相关文章

- K8S实战入门

- K8s小白?应用部署太难?看这篇就够了!

- k8s pod的状态为evicted

- k8s集群pod出现Evicted状态

- 联邦学习KubeFATE开源项目的K8s和Ingress详解

- docker安全三:k8s集群环境搭建

- k8s学习一:使用kubeadm安装k8s

- 【K8S专栏】Kubernetes工作负载管理

- 【废亿点k8s】k8s单master集群安装(1.24版本)

- K8s源码分析(20)-client go组件之request和result

- k8s 网络转发问题记录

- 部署k8s集群(k8s集群搭建详细实践版)

- 公网环境搭建 k8s 集群

- 01-k8s集群搭建-基础环境准备

- Elasticsearch+Filebeat+Kibana+Metricbeat)搭建K8s集群统一日志管理平台Demo

- 【K8S 系列】k8s 学习二,kubernetes 核心概念及初步了解安装部署方式

- k8s集群搭建

- 14 张图详解 Zookeeper + Kafka on K8S 环境部署

- 月薪 5w+,2023 懂点 K8s/Docker 真的太有必要了!| 极客时间

- Ansible自动化部署K8S集群

- k8s部署redis cluster集群的实现

- K8s 搭建 MySQL 数据库集群服务(k8smysql)

- k8s架构分析(二)

- 迈向K8s Redis大门,开启新的发展空间(进入k8s redis)

- Redis如何用K8s进行自动部署与扩容(redis需要k8s吗)

- Docker与k8s的恩怨情仇(六)—— “容器编排”上演“终结者”大片