ICLR论文2

文章目录

Decomposed Prompting: A Modular Approach for Solving Complex Tasks

Decomposed Prompting: A Modular Approach for Solving Complex Tasks is a research paper that proposes a new method for using large language models (LLMs) to perform complex tasks by breaking them down into simpler sub-tasks¹³. The paper claims that this method can improve the accuracy and efficiency of LLMs, as well as make them more interpretable and adaptable³.

how it work?

Decomposed prompting works by splitting a complex task into simpler sub-tasks that can be solved by different LLMs with specific prompts¹². For example, if the task is to answer a question about a passage of text, decomposed prompting can divide it into sub-tasks such as extracting relevant sentences, finding entities and relations, and generating an answer². Each sub-task can be assigned to an LLM that has been trained or fine-tuned on a related dataset with a suitable prompt². The outputs of each sub-task can be passed as inputs to the next sub-task until the final answer is obtained².

How do they evaluate this method?

They evaluate this method by applying it to three complex tasks: question answering over long documents, natural language inference with explanations, and multi-hop reasoning over knowledge graphs¹. They compare their method with other prompting methods such as PET and LM-BFF on various datasets and metrics¹. They report that decomposed prompting achieves better or comparable results than other methods, while using fewer parameters and less computation¹.

bing 给的example

Sure, I can show you an example of decomposed prompting. Suppose the task is to answer a question about a passage of text, such as:

Passage: The Sun is the star at the center of the Solar System. It is a nearly perfect sphere of hot plasma, heated to incandescence by nuclear fusion reactions in its core, radiating the energy mainly as light and infrared radiation.

Question: What is the main source of energy for the Sun?

Decomposed prompting can break down this task into three sub-tasks:

- Extract relevant sentences from the passage that contain information related to the question.

- Find entities and relations in the relevant sentences that are relevant to the question.

- Generate an answer based on the entities and relations.

Each sub-task can be solved by a different LLM with a specific prompt. For example:

- For sub-task 1, we can use an LLM that has been fine-tuned on a sentence extraction dataset with a prompt like “Given a passage and a question, extract one or more sentences from the passage that contain information related to the question.”

- For sub-task 2, we can use an LLM that has been trained on a named entity recognition and relation extraction dataset with a prompt like “Given one or more sentences, find all entities and relations in them.”

- For sub-task 3, we can use an LLM that has been fine-tuned on a question answering dataset with a prompt like “Given one or more entities and relations, generate an answer to the question.”

The outputs of each sub-task can be passed as inputs to the next sub-task until we get the final answer. For example:

- Sub-task 1 output: “heated to incandescence by nuclear fusion reactions in its core”

- Sub-task 2 output: “heated (relation) incandescence (entity) by (relation) nuclear fusion reactions (entity) in (relation) its core (entity)”

- Sub-task 3 output: “The main source of energy for the Sun is nuclear fusion reactions in its core.”

This is how decomposed prompting works. I hope this example helps you understand it better.

ng-a-modular-approach-for-solving-complex-tasks 访问时间 2023/3/18.

Contrastive Corpus Attribution for Explaining Representations

According to the search results123, this paper talks about a new method for explaining unsupervised models that output representation vectors. The method is called Contrastive Corpus Attribution (CCA) and it compares the explicand (the sample being explained) with other samples from different corpora to find out which corpus contributes most to its representation. The paper also proposes a way to visualize the CCA results using word clouds and heat maps.

CCA is a method that uses contrastive corpus similarity as a scalar explanation output for unsupervised models123. Contrastive corpus similarity measures how similar the explicand’s representation is to a reference corpus compared to a foil set of samples. For example, if you want to explain why a sentence belongs to a certain topic, you can use CCA to compare its representation with other sentences from the same topic (reference corpus) and different topics (foil set).

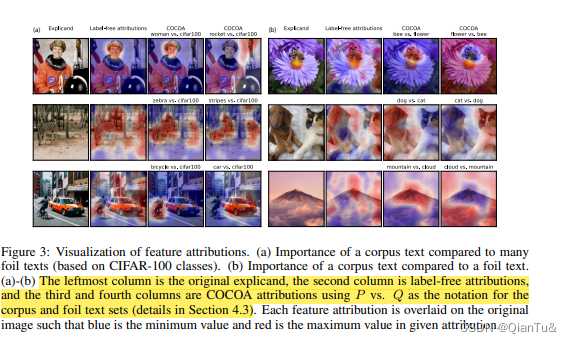

CCA can be combined with many feature attribution methods to generate COntrastive COrpus Attributions (COCOA)123, which identify the features (e.g., words or tokens) that are important for the contrastive corpus similarity. COCOA can be visualized using word clouds and heat maps to show which features contribute positively or negatively to the similarity.

CCA can help draw insights from unsupervised models and explain how they learn from different corpora. For example, CCA can explain how augmentations of the same sentence affect its representation, or how different pre-training tasks influence the representation of downstream tasks.

see an example

下面这组图片是在使用COCOA做定位,最左侧的是原图,越往右,代表随机选择的图,COCOA定位的“P”图,和COCOA定位的“Q”图。

我们发现,使用COCOA,我们可以提出具体的问题。例如,我们可以问:“与其他许多类相比,这幅图像中哪些像素看起来像P?”(图3a)。我们在前三幅图像中评估了一些这样的问题。在宇航员的图像中,我们可以看到女人的脸和火箭被定位了。同样,在既有自行车又有汽车的图像中,我们可以对自行车或汽车进行定位。在斑马图像中,通过使用旨在识别 "斑马 "或 "条纹 "的语料库文本,我们能够对斑马进行定位。最后,在最后三幅图像中,我们发现我们可以制定具体的对比性问题,问:“这幅图像中哪些像素看起来像P而不是Q?”(图3b)。这样做,我们成功地识别了一只蜜蜂而不是一朵花,一只狗而不是一只猫,以及一座山而不是云(反之亦然)。

相关文章

- EasyCVR对接华为iVS订阅摄像机和用户变更请求接口介绍

- 精选 | 腾讯云CDN内容加速场景有哪些?

- 模块化网络防止基于模型的多任务强化学习中的灾难性干扰

- 用搜索和注意力学习稳健的调度方法

- 用于多变量时间序列异常检测的学习图神经网络

- 助力政企自动化自然生长,华为WeAutomate RPA是怎么做到的?

- 使用腾讯轻量云搭建Fiora聊天室

- TSRC安全测试规范

- 云计算“功守道”

- 助力成本优化,腾讯全场景在离线混部系统Caelus正式开源

- Flink 利器:开源平台 StreamX 简介

- 腾讯云实践 | 一图揭秘腾讯碳中和?解决方案

- 深度学习中的轻量级网络架构总结与代码实现

- 信息系统项目管理师(高项复习笔记三)

- Adobe国际认证让科技赋能时尚

- c++该怎么学习(面试吃土记)

- 面试官问发布订阅模式是在问什么?

- 面试官:请实现一个通用函数把 callback 转成 promise

- 空中悬停、翻滚转身、成功着陆,我用强化学习「回收」了SpaceX的火箭

- 中山大学林倞解读视觉语义理解新趋势:从表达学习到知识及因果融合