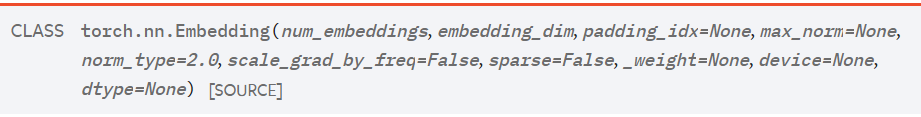

torch.nn.Embedding

Parameters

-

num_embeddings (int) – size of the dictionary of embeddings

-

embedding_dim (int) – the size of each embedding vector

-

padding_idx (int, optional) – If specified, the entries at

padding_idxdo not contribute to the gradient; therefore, the embedding vector atpadding_idxis not updated during training, i.e. it remains as a fixed “pad”. For a newly constructed Embedding, the embedding vector atpadding_idxwill default to all zeros, but can be updated to another value to be used as the padding vector. -

max_norm (float, optional) – If given, each embedding vector with norm larger than

max_normis renormalized to have normmax_norm. -

norm_type (float, optional) – The p of the p-norm to compute for the

max_normoption. Default2. -

scale_grad_by_freq (boolean, optional) – If given, this will scale gradients by the inverse of frequency of the words in the mini-batch. Default

False. -

sparse (bool, optional) – If

True, gradient w.r.t.weightmatrix will be a sparse tensor. See Notes for more details regarding sparse gradients.

Examples:

import torch

from torch import nn

embedding = nn.Embedding(5, 4) # 假定字典中只有5个词,词向量维度为4

word = [[1, 2, 3],

[2, 3, 4]] # 每个数字代表一个词,例如 {'!':0,'how':1, 'are':2, 'you':3, 'ok':4}

#而且这些数字的范围只能在0~4之间,因为上面定义了只有5个词

embed = embedding(torch.LongTensor(word))

print(embed)

print(embed.size())

结果:

tensor([[[-0.0436, -1.0037, 0.2681, -0.3834],

[ 0.0222, -0.7280, -0.6952, -0.7877],

[ 1.4341, -0.0511, 1.3429, -1.2345]],

[[ 0.0222, -0.7280, -0.6952, -0.7877],

[ 1.4341, -0.0511, 1.3429, -1.2345],

[-0.2014, -0.4946, -0.0273, 0.5654]]], grad_fn=<EmbeddingBackward0>)

torch.Size([2, 3, 4])

相关文章

- Redis-技术专区-帮从底层彻底吃透RDB技术原理

- Mybatis-技术专区-如何清晰的解决出现「多对一模型」和「一对多模型」的问题

- 🏆【JVM技术专区】「难点-核心-遗漏」TLAB内存分配+锁的碰撞(技术串烧)!

- 🏆【Alibaba微服务技术系列】「Dubbo3.0技术专题」回顾Dubbo2.x的技术原理和功能实现及源码分析(温故而知新)

- 【微服务技术专题】Netflix动态化配置服务-微服务配置组件变色龙Archaius

- 🏆【JVM技术专区】「虚拟机专题」JDK/JVM的新储君—GraalVM和Quarkus

- Alibaba-技术专区-RocketMQ 延迟消息实现原理和源码分析

- 【SpringBoot技术专题】「JWT技术专区」SpringSecurity整合JWT授权和认证实现

- Alibaba-技术专区-Dubbo3总体技术体系介绍及技术指南(目录)

- Alibaba-技术专区-Dubbo3总体技术体系介绍及技术指南(序章)

- SpringBoot-技术专区-用正确的姿势如何用外置tomcat配置及运行(Tomcat优化分析)

- 【SpringCloud技术专题】「原生态Fegin」打开Fegin之RPC技术的开端,你会使用原生态的Fegin吗?(下)

- 【SpringCloud技术专题】「原生态Fegin」打开Fegin之RPC技术的开端,你会使用原生态的Fegin吗?(中)

- 【SpringCloud技术专题】「原生态Fegin」打开Fegin之RPC技术的开端,你会使用原生态的Fegin吗?(上)

- 🏆(不要错过!)【CI/CD技术专题】「Jenkins实战系列」(4)jenkins+pipeline构建自动化部署

- 🏆(不要错过!)【CI/CD技术专题】「Jenkins实战系列」(3)Jenkinsfile+DockerFile实现自动部署

- 【Maven实战技巧】「插件使用专题」Maven-Assembly插件实现自定义打包

- [书单]

- MySQL-技术专题-innodb存储引擎

- 浅谈NIO和Epoll的实现原理