OpenStack+Ceph集群 清理pool池 解决 pgs: xxx% pgs unknown的问题

2023-04-18 16:54:37 时间

昨天没有清空pool直接删除osd节点,导致今天ceph挂掉了…

执行

ceph -s

显示

2022-05-07 08:10:08.273 7f998ddeb700 -1 asok(0x7f9988000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.230947.140297388437176.asok': (2) No such file or directory

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

5 pool(s) have no replicas configured

Reduced data availability: 640 pgs inactive

1/3 mons down, quorum node1,node2

services:

mon: 3 daemons, quorum node1,node2 (age 14h), out of quorum: node1_bak

mgr: node1(active, since 14h), standbys: node2

osd: 6 osds: 6 up (since 14h), 6 in (since 22h)

data:

pools: 5 pools, 640 pgs

objects: 0 objects, 0 B

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 100.000% pgs unknown

640 unknown

可以看到:pgs: 100.000% pgs unknown数据异常

执行

ceph df

显示

2022-05-07 08:15:56.872 7f96a3477700 -1 asok(0x7f969c000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.54160.140284839079608.asok': (2) No such file or directory

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 5.2 TiB 5.2 TiB 2.4 GiB 8.4 GiB 0.16

TOTAL 5.2 TiB 5.2 TiB 2.4 GiB 8.4 GiB 0.16

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

images 1 128 0 B 0 0 B 0 0 B

volumes 2 128 0 B 0 0 B 0 0 B

backups 3 128 0 B 0 0 B 0 0 B

vms 4 128 0 B 0 0 B 0 0 B

cache 5 128 0 B 0 0 B 0 0 B

存储池分配的空间都是0

说明osd节点挂掉了

查看一下osd节点情况

ceph osd tree

2022-05-07 08:17:38.461 7f917c55c700 -1 asok(0x7f9174000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.54288.140262693154488.asok': (2) No such file or directory

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-9 0 root vm-disk

-8 0 root cache-disk

-7 0 root hdd-disk

-1 3.25797 root default

-15 1.62898 host computer

1 hdd 0.58600 osd.1 up 1.00000 1.00000

3 hdd 0.16399 osd.3 up 1.00000 1.00000

5 hdd 0.87900 osd.5 up 1.00000 1.00000

-13 1.62898 host controller

0 hdd 0.58600 osd.0 up 1.00000 1.00000

2 hdd 0.16399 osd.2 up 1.00000 1.00000

4 hdd 0.87900 osd.4 up 1.00000 1.00000

-3 0 host node1

-5 0 host node2

可以看到node1和node2都不存在osd了,变成了外网IP的computer和controller上

可能是因为crush map设置不对,之前编译的newcrushmap在/etc/ceph下

cd /etc/ceph

ceph osd setcrushmap -i newcrushmap

再执行

ceph df

可以看到存储信息恢复

2022-05-07 08:26:48.930 7f946fa78700 -1 asok(0x7f9468000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.54898.140275376729784.asok': (2) No such file or directory

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 5.2 TiB 5.2 TiB 2.4 GiB 8.4 GiB 0.16

TOTAL 5.2 TiB 5.2 TiB 2.4 GiB 8.4 GiB 0.16

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

images 1 128 1.7 GiB 222 1.7 GiB 0.15 1.1 TiB

volumes 2 128 495 MiB 146 495 MiB 0.04 1.1 TiB

backups 3 128 19 B 3 19 B 0 1.1 TiB

vms 4 128 0 B 0 0 B 0 3.5 TiB

cache 5 128 104 KiB 363 104 KiB 0 317 GiB

执行

ceph -s

2022-05-07 08:27:01.426 7f7cf23db700 -1 asok(0x7f7cec000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.54928.140174512107192.asok': (2) No such file or directory

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

5 pool(s) have no replicas configured

Reduced data availability: 128 pgs inactive

application not enabled on 3 pool(s)

1/3 mons down, quorum node1,node2

services:

mon: 3 daemons, quorum node1,node2 (age 14h), out of quorum: node1_bak

mgr: node1(active, since 14h), standbys: node2

osd: 6 osds: 6 up (since 14h), 6 in (since 22h)

data:

pools: 5 pools, 640 pgs

objects: 734 objects, 2.2 GiB

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 20.000% pgs unknown

512 active+clean

128 unknown

可以看到还有pgs: 20.000% pgs unknown20%的数据异常

这就是当初直接删除osd没清理vms导致的了

防止之后操作出现异常

先修改主机名

节点1:

hostnamectl set-hostname node1

节点2:

hostnamectl set-hostname node2

节点1上操作

执行删除pool命令

ceph osd pool delete vms vms --yes-i-really-really-mean-it

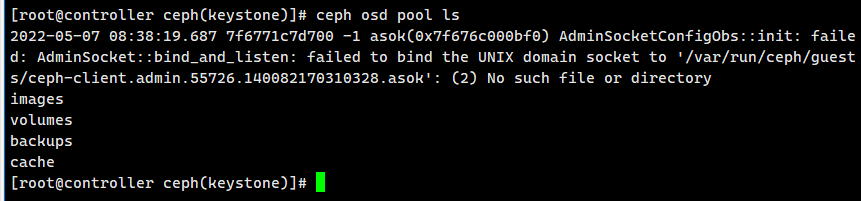

查看池情况:

ceph osd pool ls

可以看到vms已经删除了

再查看ceph -s

[root@controller ceph(keystone)]# ceph -s

2022-05-07 08:40:41.131 7f86eb0dc700 -1 asok(0x7f86e4000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.55936.140217327562424.asok': (2) No such file or directory

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

4 pool(s) have no replicas configured

application not enabled on 3 pool(s)

1/3 mons down, quorum node1,node2

services:

mon: 3 daemons, quorum node1,node2 (age 14h), out of quorum: node1_bak

mgr: node1(active, since 14h), standbys: node2

osd: 6 osds: 6 up (since 14h), 6 in (since 22h)

data:

pools: 4 pools, 512 pgs

objects: 734 objects, 2.2 GiB

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 512 active+clean

可以看到数据异常已经解决,剩下就是重建vms

ceph osd pool create vms 128 128

修改存储池规则

ceph osd pool set vms crush_rule vm-disk

查看osd分配情况

ceph osd tree

发现已经应用正常了

[root@controller ceph(keystone)]# ceph osd tree

2022-05-07 08:44:36.093 7fcf9ed4c700 -1 asok(0x7fcf98000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.56195.140529585106616.asok': (2) No such file or directory

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-9 1.74597 root vm-disk

4 hdd 0.87299 osd.4 up 1.00000 1.00000

5 hdd 0.87299 osd.5 up 1.00000 1.00000

-8 1.74597 root cache-disk

2 hdd 0.87299 osd.2 up 1.00000 1.00000

3 hdd 0.87299 osd.3 up 1.00000 1.00000

-7 1.09000 root hdd-disk

0 hdd 0.54500 osd.0 up 1.00000 1.00000

1 hdd 0.54500 osd.1 up 1.00000 1.00000

-1 3.25800 root default

-3 1.62900 host node1

0 hdd 0.58600 osd.0 up 1.00000 1.00000

2 hdd 0.16399 osd.2 up 1.00000 1.00000

4 hdd 0.87900 osd.4 up 1.00000 1.00000

-5 1.62900 host node2

1 hdd 0.58600 osd.1 up 1.00000 1.00000

3 hdd 0.16399 osd.3 up 1.00000 1.00000

5 hdd 0.87900 osd.5 up 1.00000 1.00000

剩下就是解决一直报的鉴权文件异常问题

2022-05-07 08:44:36.093 7fcf9ed4c700 -1 asok(0x7fcf98000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.56195.140529585106616.asok': (2) No such file or directory

编写重启ceph的脚本

cd /opt/sys_sh/

vi restart_ceph.sh

#!/bin/bash

mondirfile=/var/lib/ceph/mon/ceph-node1/store.db

mondir=/var/lib/ceph/mon/*

runguest=/var/run/ceph/guests/

logkvm=/var/log/qemu/

crushmap=/etc/ceph/newcrushmap

host=node1

host2=controller

echo "修改主机名为$host"

hostnamectl set-hostname $host

cd /etc/ceph

echo "检测ceph-mon服务异常并恢复重启"

if [ "$(netstat -nltp|grep ceph-mon|grep 6789|wc -l)" -eq "0" ]; then

sleep 1

if [ -e "$mondirfile" ]; then

sleep 1

else

sleep 1

rm -rf $mondir

ceph-mon --cluster ceph -i $host --mkfs --monmap /etc/ceph/monmap --keyring /etc/ceph/monkeyring -c /etc/ceph/ceph.conf

chown -R ceph:ceph $mondir

fi

systemctl reset-failed ceph-mon@node1.service

systemctl start ceph-mon@node1.service

else

sleep 1

fi

if [ "$(netstat -nltp|grep ceph-mon|grep 6781|wc -l)" -eq "0" ]; then

sleep 1

ceph-mon -i node1_bak --public-addr 10.0.0.2:6781

else

sleep 1

fi

echo "重启ceph-osd和相关所有服务"

if [ "$(ps -aux|grep ceph-mgr|wc -l)" -eq "1" ]; then

sleep 1

systemctl reset-failed ceph-mgr@node1.service

systemctl start ceph-mgr@node1.service

else

sleep 1

fi

if [ "$(ps -e|grep ceph-osd|wc -l)" -eq "$(lsblk |grep osd|wc -l)" ]; then

sleep 1

else

sleep 1

systemctl reset-failed ceph-osd@0.service

systemctl start ceph-osd@0.service

systemctl reset-failed ceph-osd@2.service

systemctl start ceph-osd@2.service

systemctl reset-failed ceph-osd@4.service

systemctl start ceph-osd@4.service

fi

if [ -d "$runguest" -a -d "$logkvm" ]; then

sleep 1

else

sleep 1

mkdir -p $runguest $logkvm

chown 777 -R $runguest $logkvm

fi

echo "重写ceph存储规则"

ceph osd setcrushmap -i $crushmap

echo "修改主机名为$host2"

hostnamectl set-hostname $host2

再执行ceph -s

可以看到错误解决

[root@node1 sys_sh(keystone)]# ceph -s

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

5 pool(s) have no replicas configured

application not enabled on 3 pool(s)

services:

mon: 3 daemons, quorum node1,node2,node1_bak (age 70s)

mgr: node1(active, since 15h), standbys: node2

osd: 6 osds: 6 up (since 15h), 6 in (since 23h)

data:

pools: 5 pools, 640 pgs

objects: 734 objects, 2.2 GiB

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 640 active+clean

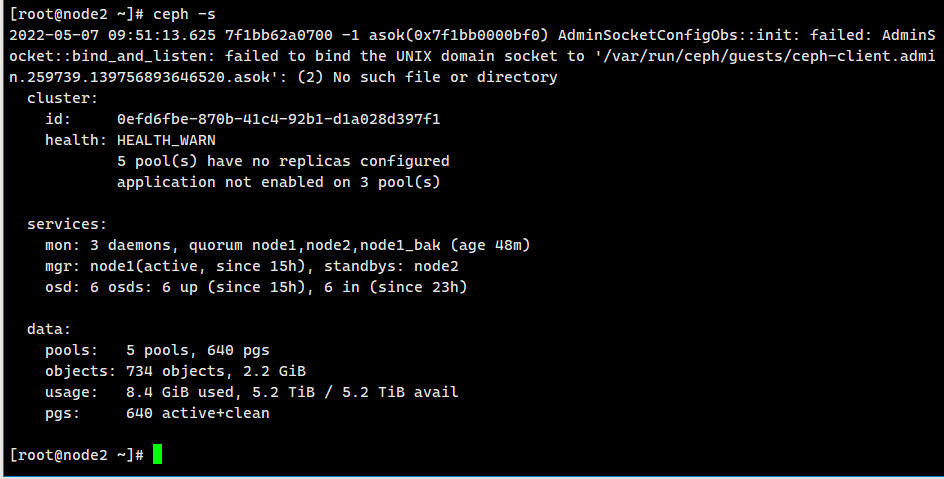

来到节点2执行ceph -s

2022-05-07 09:51:13.625 7f1bb62a0700 -1 asok(0x7f1bb0000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.259739.139756893646520.asok': (2) No such file or directory

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

5 pool(s) have no replicas configured

application not enabled on 3 pool(s)

services:

mon: 3 daemons, quorum node1,node2,node1_bak (age 48m)

mgr: node1(active, since 15h), standbys: node2

osd: 6 osds: 6 up (since 15h), 6 in (since 23h)

data:

pools: 5 pools, 640 pgs

objects: 734 objects, 2.2 GiB

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 640 active+clean

也是提示

2022-05-07 09:51:13.625 7f1bb62a0700 -1 asok(0x7f1bb0000bf0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.admin.259739.139756893646520.asok': (2) No such file or directory

编写重启ceph的代码

cd /opt/sys_sh/

vi restart_ceph.sh

#!/bin/bash

mondirfile=/var/lib/ceph/mon/ceph-node1/store.db

mondir=/var/lib/ceph/mon

runguest=/var/run/ceph/guests/

logkvm=/var/log/qemu/

crushmap=/etc/ceph/newcrushmap

host=node2

host2=computer

echo "修改主机名为$host"

hostnamectl set-hostname $host

cd /etc/ceph

echo "检测ceph-mon服务异常并恢复重启"

if [ "$(netstat -nltp|grep ceph-mon|grep 6789|wc -l)" -eq "0" ]; then

sleep 1

if [ -e "$mondirfile" ]; then

sleep 1

else

sleep 1

rm -rf $mondir

ceph-mon --cluster ceph -i $host --mkfs --monmap /etc/ceph/monmap --keyring /etc/ceph/monkeyring -c /etc/ceph/ceph.conf

chown -R ceph:ceph $mondir

fi

systemctl reset-failed ceph-mon@node2.service

systemctl start ceph-mon@node2.service

else

sleep 1

fi

echo "重启ceph-osd和相关所有服务"

if [ "$(ps -aux|grep ceph-mgr|wc -l)" -eq "1" ]; then

sleep 1

systemctl reset-failed ceph-mgr@node2.service

systemctl start ceph-mgr@node2.service

else

sleep 1

fi

if [ "$(ps -e|grep ceph-osd|wc -l)" -eq "$(lsblk |grep osd|wc -l)" ]; then

sleep 1

else

sleep 1

systemctl reset-failed ceph-osd@1.service

systemctl start ceph-osd@1.service

systemctl reset-failed ceph-osd@3.service

systemctl start ceph-osd@3.service

systemctl reset-failed ceph-osd@5.service

systemctl start ceph-osd@5.service

fi

if [ -d "$runguest" -a -d "$logkvm" ]; then

sleep 1

else

sleep 1

mkdir -p $runguest $logkvm

chown 777 -R $runguest $logkvm

fi

echo "修改主机名为$host2"

hostnamectl set-hostname $host2

执行ceph -s可以发现问题解决

[root@node2 sys_sh]# ceph -s

cluster:

id: 0efd6fbe-870b-41c4-92b1-d1a028d397f1

health: HEALTH_WARN

5 pool(s) have no replicas configured

application not enabled on 3 pool(s)

services:

mon: 3 daemons, quorum node1,node2,node1_bak (age 59m)

mgr: node1(active, since 16h), standbys: node2

osd: 6 osds: 6 up (since 16h), 6 in (since 24h)

data:

pools: 5 pools, 640 pgs

objects: 734 objects, 2.2 GiB

usage: 8.4 GiB used, 5.2 TiB / 5.2 TiB avail

pgs: 640 active+clean

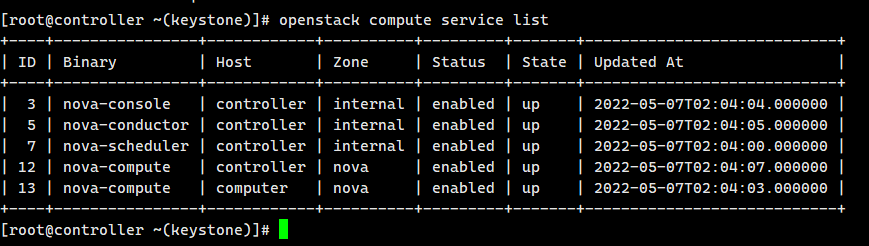

回主节点查看计算服务,可以看到计算节点连接正常

openstack compute service list

[root@controller ~(keystone)]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------------+----------+---------+-------+----------------------------+

| 3 | nova-console | controller | internal | enabled | up | 2022-05-07T02:04:04.000000 |

| 5 | nova-conductor | controller | internal | enabled | up | 2022-05-07T02:04:05.000000 |

| 7 | nova-scheduler | controller | internal | enabled | up | 2022-05-07T02:04:00.000000 |

| 12 | nova-compute | controller | nova | enabled | up | 2022-05-07T02:04:07.000000 |

| 13 | nova-compute | computer | nova | enabled | up | 2022-05-07T02:04:03.000000 |

+----+----------------+------------+----------+---------+-------+----------------------------+

相关文章

- 【技术种草】cdn+轻量服务器+hugo=让博客“云原生”一下

- CLB运维&运营最佳实践 ---访问日志大洞察

- vnc方式登陆服务器

- 轻松学排序算法:眼睛直观感受几种常用排序算法

- 十二个经典的大数据项目

- 为什么使用 CDN 内容分发网络?

- 大数据——大数据默认端口号列表

- Weld 1.1.5.Final,JSR-299 的框架

- JavaFX 2012:彻底开源

- 提升as3程序性能的十大要点

- 通过凸面几何学进行独立于边际的在线多类学习

- 利用行动影响的规律性和部分已知的模型进行离线强化学习

- ModelLight:基于模型的交通信号控制的元强化学习

- 浅谈Visual Source Safe项目分支

- 基于先验知识的递归卡尔曼滤波的代理人联合状态和输入估计

- 结合网络结构和非线性恢复来提高声誉评估的性能

- 最佳实践丨云开发CloudBase多环境管理实践

- TimeVAE:用于生成多变量时间序列的变异自动编码器

- 具有线性阈值激活的神经网络:结构和算法

- 内网渗透之横向移动 -- 从域外向域内进行密码喷洒攻击