python爬取全站链接

以 oschina 为例:

生成项目

$ scrapy startproject oschina

$ cd oschina

配置 编辑 settings.py, 加入以下(主要是User-agent和piplines):

USER_AGENT = 'Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0'

LOG_LEVEL = 'ERROR'

RETRY_ENABLED = False

DOWNLOAD_TIMEOUT = 10

ITEM_PIPELINES = {

'oschina.pipelines.SomePipeline': 300,

}

编辑 items.py, 内容如下:

# -*- coding: utf-8 -*-

import scrapy

class OschinaItem(scrapy.Item):

Link = scrapy.Field()

LinkText = scrapy.Field()

编辑 pipelines.py, 内容如下:

# -*- coding: utf-8 -*-

import json

from scrapy.exceptions import DropItem

class OschinaPipeline(object):

def __init__(self):

self.file = open('result.jl', 'w')

self.seen = set() # 重复检测集合

def process_item(self, item, spider):

if item['link'] in self.seen:

raise DropItem('Duplicate link %s' % item['link'])

self.seen.add(item['link'])

line = json.dumps(dict(item), ensure_ascii=False) + ' '

self.file.write(line)

return item

生成模板spider然后修改:

$ scrapy genspider scrapy_oschina oschina.net # scrapy genspider 爬虫名 要爬取的域名

编辑 spiders/scrapy_oschina.py:

# -*- coding: utf-8 -*-

import scrapy

from oschina.items import OschinaItem

class ScrapyOschinaSpider(scrapy.Spider):

name = "scrapy_oschina"

allowed_domains = ["oschina.net"]

start_urls = (

'http://www.oschina.net/',

)

def parse(self, response):

sel = scrapy.Selector(response)

links_in_a_page = sel.xpath('//a[@href]') # 页面内的所有链接

for link_sel in links_in_a_page:

item = OschinaItem()

link = str(link_sel.re('href="(.*?)"')[0]) # 每一个url

if link:

if not link.startswith('http'): # 处理相对URL

link = response.url + link

yield scrapy.Request(link, callback=self.parse) # 生成新的请求, 递归回调self.parse

item['link'] = link

link_text = link_sel.xpath('text()').extract() # 每个url的链接文本, 若不存在设为None

if link_text:

item['link_text'] = str(link_text[0].encode('utf-8').strip())

else:

item['link_text'] = None

#print item['link'], # 取消注释在屏幕显示结果

#print item['link_text']

yield item

运行:

scrapy crawl scrapy_oschina

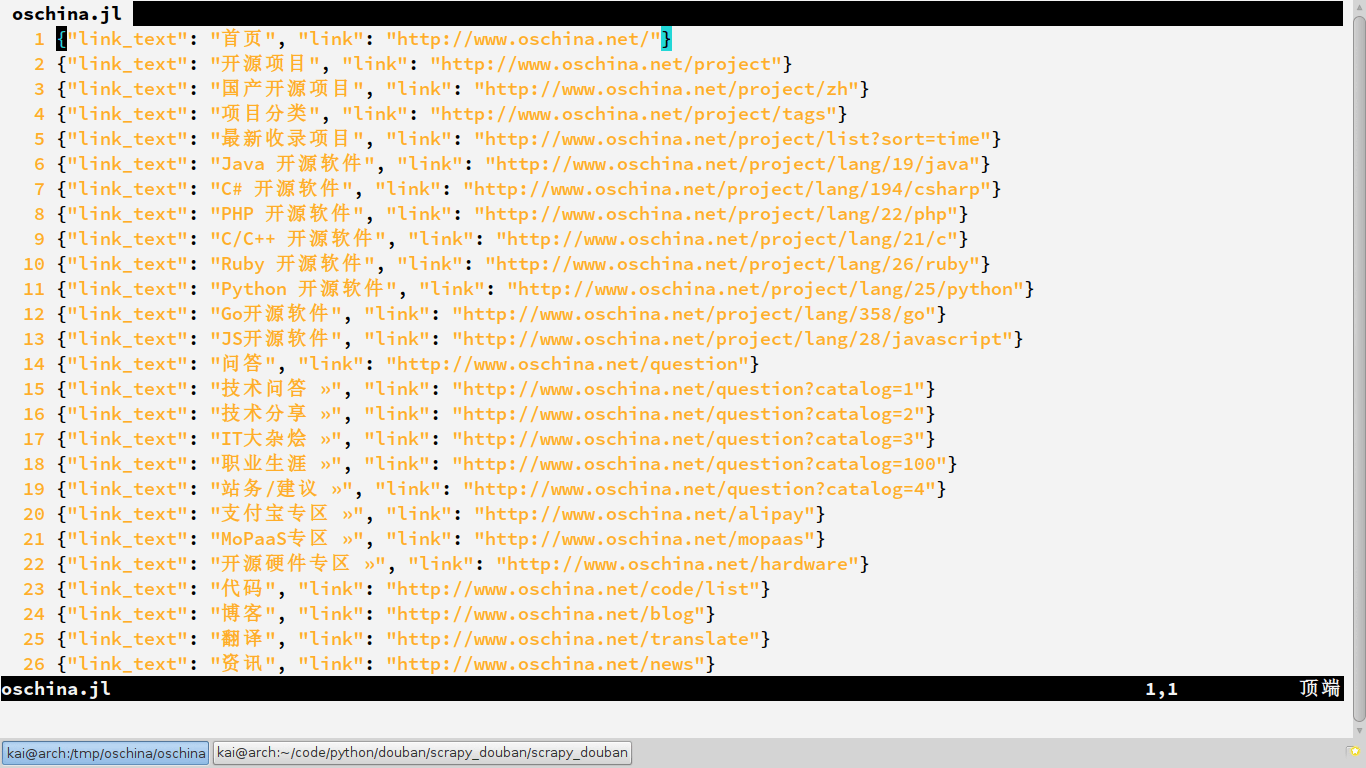

结果保存在 oschina.jl 文件中, 目的只是为了介绍怎样编写item pipeline,如果要将所有爬取的item都保存到同一个JSON文件, 需要使用 Feed exports

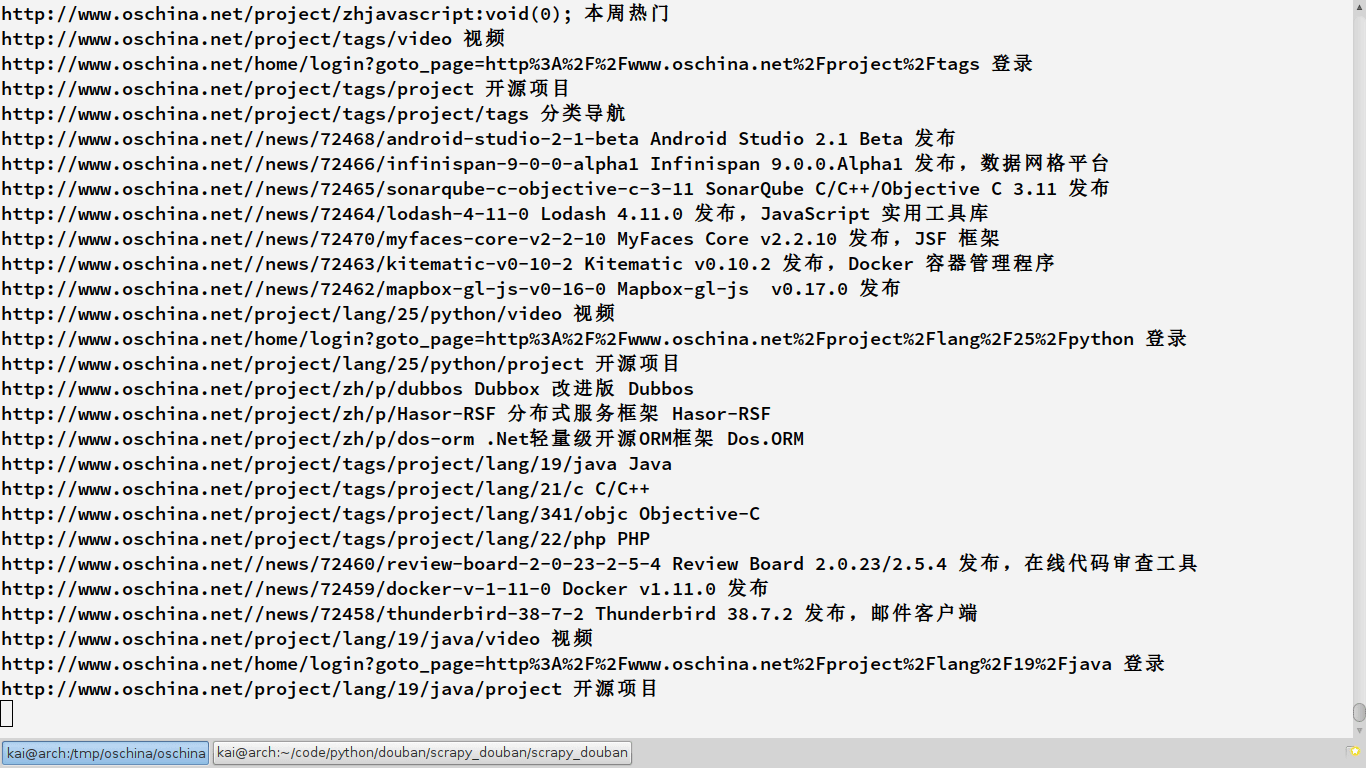

截图如下:

保存数据到mongoDB

在 pipelines.py中加入:

import pymongo

class MongoPipeline(object):

def __init__(self, mongo_host, mongo_port, mongo_db):

self.mongo_host = mongo_host

self.mongo_port = mongo_port

self.mongo_db = mongo_db

@classmethod

def from_crawler(cls, crawler):

return cls(

mongo_host=crawler.settings.get('MONGO_HOST'),

mongo_port=crawler.settings.get('MONGO_PORT'),

mongo_db=crawler.settings.get('MONGO_DB', 'doubandb'),

)

def open_spider(self, spider):

self.client = pymongo.MongoClient(self.mongo_host, self.mongo_port)

self.db = self.client[self.mongo_db]

def close_spider(self, spider):

self.client.close()

def process_item(self, item, spider):

collection_name = item.__class__.__name__

self.db[collection_name].insert(dict(item))

return item

在settings.py设置相应的 MONGO_HOST(默认127.0.0.1),MONGO_PORT(默认27017), MONGO_DB, MONGO_COLLECTION, ITEM_PIPELINES字典加入这个项

'scrapy_douban.pipelines.MongoPipeline':400,

数字代表优先级, 越大越低

使用 XmlItemExporter

在pipelines.py添加:

from scrapy.exporters import XmlItemExporter

from scrapy import signals

class XmlExportPipeline(object):

def __init__(self):

self.files = {}

@classmethod

def from_crawler(cls, crawler):

pipeline = cls()

crawler.signals.connect(pipeline.spider_opened, signals.spider_opened)

crawler.signals.connect(pipeline.spider_closed, signals.spider_closed)

return pipeline

def spider_opened(self, spider):

file = open('%s_urls.xml' % spider.name, 'w+b')

self.files[spider] = file

self.exporter = XmlItemExporter(file)

self.exporter.start_exporting()

def spider_closed(self, spider):

self.exporter.finish_exporting()

file = self.files.pop(spider)

file.close()

def process_item(self, item, spider):

self.exporter.export_item(item)

return item

settings.py中 ITEM_PIPELINES 添加项

'oschina.pipelines.XmlExportPipeline':500,

相关文章

- Python使用tkinter组件Label显示简单数学公式

- 内网渗透之DCOM横向移动

- 以目标为导向的语义交流的共同语言——一个课程学习框架

- python爬虫前奏【成信笔记】

- HTML 5 File API:文件拖放上传功能

- 教你快速创建 Python 虚拟环境

- pyenv 实现Python多版本自由切换

- 用 Python 对 Excel文件进行批量操作

- Python - 接入钉钉机器人

- Python - 抓取 iphone13 pro 线下店供货信息并发送到钉钉机器人,最后设置为定时任务

- crontab - 解决 mac 下通过 crontab 设置了 Python 脚本的定时任务却无法运行

- [源码解析] PyTorch分布式(5) ------ DistributedDataParallel 总述&如何使用

- Python科普系列——类与方法(上篇)

- SAP对STO的交货单执行PGI,报错 -Fld selectn for mvmt type 643 acct 400020 differs

- Spring Boot 实现通用 Auth 认证的 4 种方式

- 盘点4种使用Python批量合并同一文件夹内所有子文件夹下的Excel文件内所有Sheet数据

- OushuDB 学习经验分享(三):技术特点

- Java和Python思维方式的不同之处

- Python中日志记录新技能

- 奥比中光Gemini OpenCV—Python使用